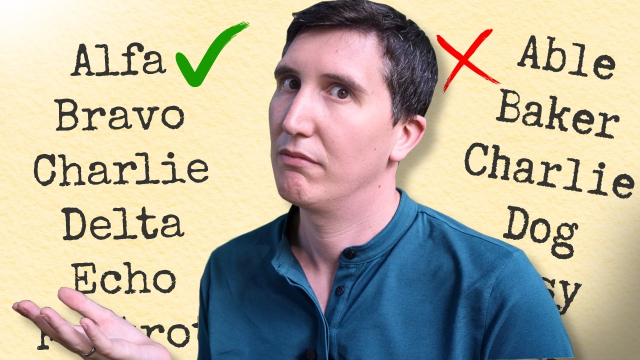

This video chronicles that development.

youtu.be/UAT-eOzeY4M?si=35f3ZM…

#communications #HamRadio #AmateurRadio

The genius logic of the NATO phonetic alphabet

Let’s uncover the secrets of the NATO phonetic alphabet! 🌏 And you can get an exclusive NordVPN deal + 4 months extra here → https://nordvpn.com/robwordsvpn...YouTube

The Best Press Release Writing Principals in AI era

For people working with media and PR, it's quite easy to spot AI generated press releases. AI output is wordy, repeating to the point of annoying. Without human revision, it's hard to read.

But even before the AI era, many press releases are full of jargons that are quite difficult to read.

The reason is simple and cleverly pointed out by this article I read on PR News Releaser: “Think Like a Reader” is the Best Press Release Strategy -- ... they’re written for the wrong audience... disconnect between what companies want to say and what readers actually want to read.

How true is that.

Even highly educated people would appreciate a press release written in simple words and clear explanations, not just generic self-praising, self-promotion sentences.

The article also provide clever strategies on how to convince your boss that writing to the reader is the right way to compose a press release. Check it out.

“Think Like a Reader” is the Best Press Release Strategy

Most press releases die when its online or land in inboxes. They sit unread, unshared, and ultimately ineffective—not because they lack newsworthy information…News PR (PR News Releaser)

AI Slop? Not This Time. AI Tools Found 50 Real Bugs In cURL - Slashdot

The Register reports: Over the past two years, the open source curl project has been flooded with bogus bug reports generated by AI models.developers.slashdot.org

Sonic is gonna get some knuckles

scworld.com/news/sonicwall-con…

SonicWall confirms all Cloud Backup Service users were compromised

A deeper analysis found that all the firewall configurations were compromised, not just 5% of users as first reported.Steve Zurier (SC Media)

RE: infosec.exchange/@codinghorror…

One guard always tells the truth.

The other guard always lies.

I am being slightly disingenuous here.

Some of the many advantages of LLMs over SO is that the LLM is friendly and fast.

That's why Kids Today™ prefer Discord to forums. Someone replies immediately. You don't have to wait for an answer and check back.

LLMs are rarely rude. It can be terrifying to make yourself vulnerable and admit in public you don't know something basic. Especially when humans are mean and judgemental.

Encryption is a human right.

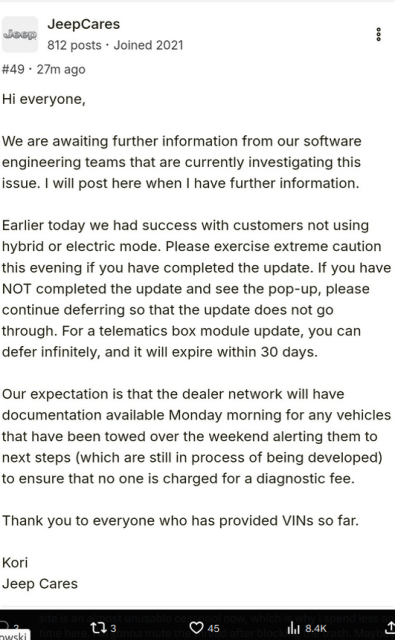

Everyone knows the weekends are the best time to push important updates, right?

From Jeep Wrangler forum: Did anyone else have a loss of drive power after today's OTA Uconnect update?

On my drive home I abruptly had absolutely no acceleration, the gear indicator on the dash started flashing, the power mode indicator disappeared, an alert said shift into park and press the brake + start button, and the check engine light and red wrench lights came on. I was still able to steer and brake with power steering and brakes for maybe 30 seconds before those went out too. After putting it into park and pressing the brake and start button it started back up and I could drive it normally for a little bit, but it happened two more times on my 1.5 mi drive home.

Source: x.com/StephenGutowski/status/1…

More here: jlwranglerforums.com/forum/thr…

and here: news.ycombinator.com/item?id=4…

2024 4xe Loss of Motive Power after 10/10/25 OTA update

Did anyone else have a loss of drive power after today's OTA Uconnect update? On my drive home I abruptly had absolutely no acceleration, the gear indicator on the dash started flashing, the...JesseT (Jeep Wrangler Forums (JL / JLU) -- Rubicon, 4xe, 392, Sahara, Sport - JLwranglerforums.com)

Oops, forgot to let our followers here know that we released 0.87.1 last week! This version includes a fix for a small bug that crept into 0.87.0 that prevented syncing of weightlifting workouts on Garmins.

But the bigger news is that we also managed to get reproducible builds at F-Droid in place in time for this release!

As usual, more details in our blog post: gadgetbridge.org/blog/release-…

You mention that you're publishing both your self-signed and the F-Droid signed build on F-Droid? Do you have more details about how that works and what you had to do to set that up?

I've wanted to have Catima be RB on F-Droid without breaking existing updates for quite a while, but I didn't really manage to make any progress when trying to talk to the @fdroidorg team, so I'd love to know how you got this working :)

My apps (#TinyWeatherForecastGermany & #imagepipe) are reproducible on #izzydroid , but I never switched on #fdroid ...

We're looking for interesting questions around @matrix , its history, its technology, statistics and fun facts for The #MatrixConf2025 Pub [quizzz]!

Do you have suggestions? Please share them with the conference team in the following form: forms.gle/6tbry4Zdzb1fYVfx5 or contact us at #events-wg:matrix.org

![Questions for The Matrix Unconference Pub [quizzz]](https://fedi.ml/photo/preview/640/712627)

Questions for The Matrix Unconference Pub [quizzz]

Do you have any good [matrix] history, stat or fun questions to ask during the Unconference Pub [quizzz] ? It should be nice to play within a crowd of multiple people guessing the correct answer.Google Docs

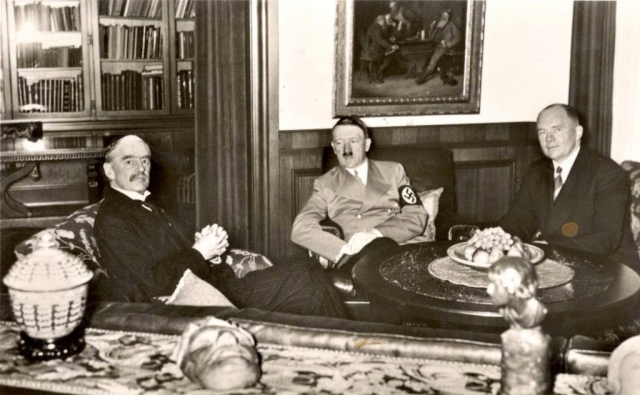

The missile defense system. Anus Tangerinus want us to join, so we can pay for it. It's not to protect us.

Our PM is an appeaser. Like Chamberlain.

Thing is, the fact that AI could do it for you basically means that it has been done before and AI trained on it.

What you actually wanted to say is: "I spent some time rebuilding someone else's work because I wasn't able to find it on Google."

I know this is overdramatized, but also not totally wrong.

Matt Campbell reshared this.

@menelion The part I find the most distressing is when someone has a really good idea, and then they try to get AI to do it, the AI claims to have implemented their idea (but hasn't: it can't), and then they think there's a problem with the idea.

These systems are the polar opposite of creativity.

You are partially correct, but this is an oversimplification of how an AI model, for example a LLM works. It can, and does, use data that it got during its training phase, but that's not the entire story, otherwise it'd be called a database that regurgitates what it was trained on. On top of the trained data there's zero-shot learning, for example to figure out a dialect of a language it hasn't been trained on, based on statistical probability of weights from the trained data, as well as combine existing patterns into new patterns, thus coming up with new things, which are arguably part of creativity.

What it can't do though is, and this is very likely what you mean, it can't go outside it's pre-trained patterns. For example, if you have a model that was trained on dragons and another model that was trained on motorcycles, if you combine those two models, they can come up with a story where a dragon rides a motorcycle, even though that story has not been part of its training data. What it can't do is come up with a new programming language because that specific pattern does not exist. So the other part of creativity where you'd think outside the box is a no go. But a lot of people's boxes are different and they are very likely not as vast as what the models were trained on, and that's how an AI model can be inspiring.

This is why a lot of composers feel that AI is basically going to take over eventually, because they will have such a vast amount of patterns that a director, trailer library editor, or other content creator will be satisfied with the AI's results. The model's box will be larger than any human's.

reshared this

@erion @menelion Most of the generative capabilities of an LLM come from linear algebra (interpolation), and statistical grammar compression. We can bound the capabilities of a model by considering everything that can be achieved using these tools: I've never seen this approach overestimate what a model is capable of.

"Zero-shot learning" only works as far as the input can be sensibly embedded in the parameter space. Many things, such as most mathematics, can't be viewed this way.

It never will, because modern LLMs are far more capable.

They rely on non-linear activation functions (like ReLU, GELU, etc.) after the linear transformations. If the network were purely linear, it could only learn linear relationships, regardless of its depth. The non-linearities are what allow the network to learn complex, non-linear mappings and interactions between inputs.

There's also scaling, arguably an internal world model, being context-aware (which is definitely not something linear). If anything, this would underestimate a model.

reshared this

Why do LLMs freak out over the seahorse emoji?

Investigating the seahorse emoji doom loop using logitlens.vgel.me

reshared this

It's not totally wrong, but I feel like maybe it's a slight oversimplification. LLMs don't just outright copy the training data, that's why it's called generative AI. That doesn't mean they will never reproduce anything in the training set, but they are very good at synthesizing multiple concepts from that data and turning them into something that technically didn't exist before.

If you look at something like Suno, which is using an LLM architecture under the hood, you're able to upload audio and have the model try to "cover" that material. If I upload myself playing a chord progression/melody that I made up, the model is able to use it's vast amount of training data to reproduce that chord progression/melody in whatever style.

It would be really important for everyone to read about the theory of appeasement and how it has *never* worked.

--

The catastrophes of World War II and the Holocaust have shaped the world’s understanding of appeasement. The diplomatic strategy is often seen as both a practical and a moral failure.

Today, based on archival documents, we know that appeasing Hitler was almost certainly destined to fail. Hitler and the Nazis were intent upon waging an offensive war and conquering territory. But it is important to remember that those who condemn Chamberlain often speak with the benefit of hindsight. Chamberlain, who died in 1940, could not possibly have foreseen the scale of atrocities committed by the Nazis and others during World War II.

---

We have the hindsight today. Let's not make the same mistakes.

encyclopedia.ushmm.org/content…

The British Policy of Appeasement toward Hitler and Nazi Germany | Holocaust Encyclopedia

In the 1930s, Prime Minister Neville Chamberlain and the British government pursued a policy of appeasement towards Nazi Germany to avoid war. Learn more.Holocaust Encyclopedia

![< Post [ac]

[8 Stephen Gutowski @ of

“W° @stephenGutowski

Jeep just pushed a software update that bricked all the 2024 Wrangler

4xe models, including my Willys. The future is going great.

12:56 PM - Oct 1, 2025 - 154.4K Views

15 Was Q 14k Res 2

® Who can reply?

Accounts @StephenGutowski follows or mentioned can reply

[8 Stephen Gutowski & ©StephenGutowski - 21h [oJ

“MW Here's what the issue looks like for those wondering

=,

. 3 DON'T UPDATE Your U-Connect | Jeep Wrangler 4x...

~ As of October 10th 2025 the uconnect update is

| causing major issues for many 4xe owners. We lose.

X

5 Qn Qs 1h 22k na

[18 Stephen Gutowski & @StephenGutowski - 20h [oJ

“M" When mine bricked, | did the infotainment reset. You put the car in acc

mode then press the radio power and tune buttons for ten seconds until

the screen restarts. Then turn it on. | had to do that several times before the

Jeep would drive again.

4 j= Q 1o thi 11K na

[8 Stephen Gutowski & @StephenGutowski - 15h [oJ

“MW so, here's what Jeep says. It's the telematics box, which as far as |

understand it does diagnostics and ota communications, that's causing the

problem. This is the sort of thing | worried about buying a new car,

‘especially a Jeep 4xe. Oh well. dxeforums.com/threads/wrangl...

< Post [ac]

[8 Stephen Gutowski @ of

“W° @stephenGutowski

Jeep just pushed a software update that bricked all the 2024 Wrangler

4xe models, including my Willys. The future is going great.

12:56 PM - Oct 1, 2025 - 154.4K Views

15 Was Q 14k Res 2

® Who can reply?

Accounts @StephenGutowski follows or mentioned can reply

[8 Stephen Gutowski & ©StephenGutowski - 21h [oJ

“MW Here's what the issue looks like for those wondering

=,

. 3 DON'T UPDATE Your U-Connect | Jeep Wrangler 4x...

~ As of October 10th 2025 the uconnect update is

| causing major issues for many 4xe owners. We lose.

X

5 Qn Qs 1h 22k na

[18 Stephen Gutowski & @StephenGutowski - 20h [oJ

“M" When mine bricked, | did the infotainment reset. You put the car in acc

mode then press the radio power and tune buttons for ten seconds until

the screen restarts. Then turn it on. | had to do that several times before the

Jeep would drive again.

4 j= Q 1o thi 11K na

[8 Stephen Gutowski & @StephenGutowski - 15h [oJ

“MW so, here's what Jeep says. It's the telematics box, which as far as |

understand it does diagnostics and ota communications, that's causing the

problem. This is the sort of thing | worried about buying a new car,

‘especially a Jeep 4xe. Oh well. dxeforums.com/threads/wrangl...](https://fedi.ml/photo/preview/640/712643)

Chris Smart

in reply to Chris Smart • • •on a few Kilos) gave a Lima bean to Mike. Last November, Oscar’s Papa went to Quebec to meet Romeo. He wore a Sierra and Tango coloured Uniform. Meanwhile

Victor drank Whiskey as he looked at the X-ray of the Yankee, whose arm was broken by a Zulu.