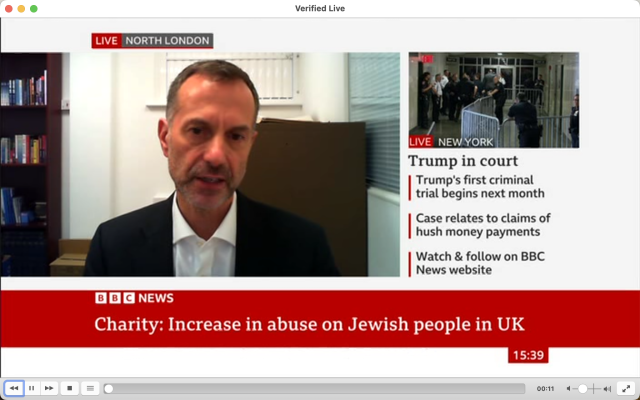

This is the Llava:34B LLLM (local large language model) running on my #MacBook Pro describing a #screenshot from #BBC News. This, to me, is as good info as #BeMyAI would provide using #GPT4, so it goes to show that we can do this on-device and have some really meaningful results. Screenshot attached, #AltText contains the description.

Lately, I've taken to using this to describe images instead of using GPT, even if it takes a little longer for results to come back. I consider this to be quite impressive.

Lately, I've taken to using this to describe images instead of using GPT, even if it takes a little longer for results to come back. I consider this to be quite impressive.