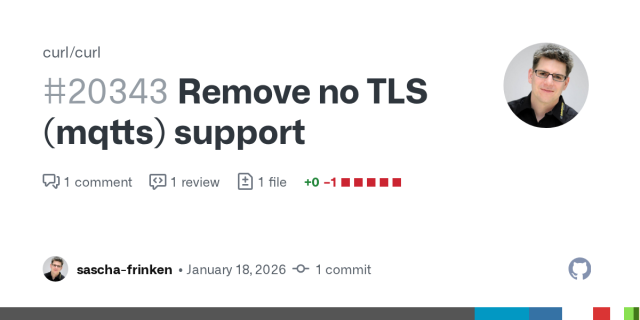

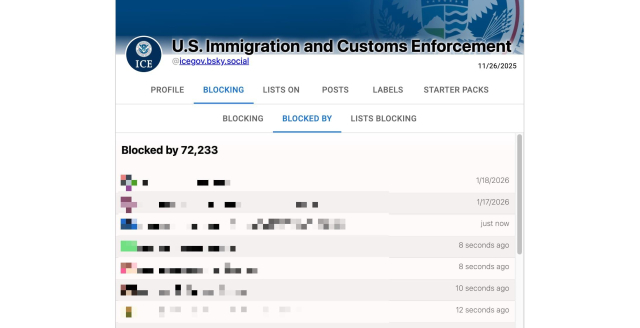

An important PSA for people who are active on #Bluesky and who, upon hearing that the ICE account was officially verified, are saying: "I will just block it."

Blocking on Bluesky is NOT PRIVATE: it's very easy to see who is blocking any account by visiting sites that list that information.

I took a screenshot from clearsky.app, listing all the accounts that are blocking ICE (I pixelated avatars and usernames for privacy purposes).

The safest bet is to mute (that info is private) 😫

This is also true about Mastodon*, but Mastodon actively tries to hide that fact from users and muddy the waters.

* It's technically hidden to users but not the admins of the instances involved, but if you're a gov agency, you're presumably on your own instance, as seems to be the custom here for the "big players."

miki reshared this.

In a way, #Putin even got more then he ever could wish for

All for free by #Trump

Alliances shattered, internal threats, everyone really disliking the US, speaking about war within #NATO even

It's unbelievable how much damage that senile dic(tator) has done within a year

I really hope we learn from this.. But history shown otherwise I guess

This post by Bruce Schneier contains so many thoughtful soundbites:

> The question is not simply whether copyright law applies to AI. It is why the law appears to operate so differently depending on who is doing the extracting and for what purpose.

> Like the early internet, AI is often described as a democratizing force. But also like the internet, AI’s current trajectory suggests something closer to consolidation.

schneier.com/blog/archives/202…

AI and the Corporate Capture of Knowledge - Schneier on Security

More than a decade after Aaron Swartz’s death, the United States is still living inside the contradiction that destroyed him. Swartz believed that knowledge, especially publicly funded knowledge, should be freely accessible.Bruce Schneier (Schneier on Security)

I like looking at this through the concept of "enjoyment", which was originally developed in Japan I believe.

From that point of view, copyright only applies to a work when it is used for "enjoyment", for its intended purpose. If the work is primarily entertainment, it applies when the consumer is using it to entertain themselves. If the work is educative, it applies when the consumer is using it to learn something. It does not apply when the work is used for a purpose completely unrelated to its creation, such as testing a CD player on an unusual CD, demonstrating the performance of a speaker system, training a language model to classify customer complaints etc.

(This isn't a legal perspective, not even quite in Japan I believe, but it's useful lens through which we can look at the world and which people can use to decide on policy).

The “ay” style diphthong sounds clear because the first vowel and the second vowel are very far apart, so the slide into the second part is obvious. With the “eh to ee” style diphthong, those two vowels are much closer, so the second part can be hard to notice even though the text-to-IPA output looks basically the same.

This one we can mostly solve in the language packs: we can treat the second part as a glide (a “y” sound) instead of a full vowel, and we can also tweak the “eh” vowel a little so it doesn’t sit so close to “ee.” Both changes make the second part stand out more.

The “oo-ee” style diphthong having a gap is usually a different issue: our synthesizer adds a tiny boundary pause between sound segments to keep speech crisp. That helps with many consonants, but it can accidentally create a hole between two vowels. Packs can reduce that pause, but that affects everything in the language. The clean fix is in the engine: detect vowel-to-vowel transitions and don’t insert that boundary pause there, just blend smoothly. I'll build that into the next update.

Remove no TLS (mqtts) support by sascha-frinken · Pull Request #20343 · curl/curl

As curl now supports TLS (mqtts), it is no longer necessary to list it as a limitation in the docs.GitHub

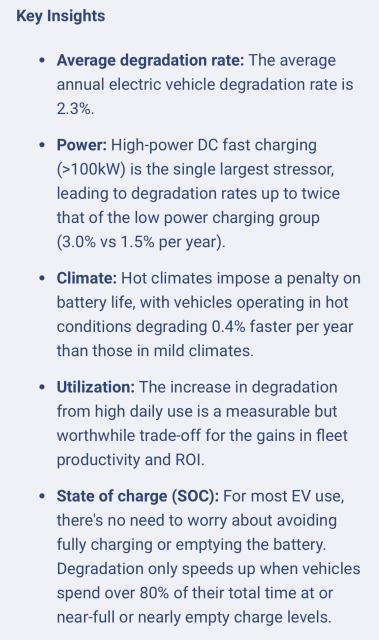

TL;DR Most EV batteries will last longer than the cars they’re in. Battery degradation is at better (meaning: lower) rates than expected. Slow charging is better. Drive EV and don’t worry about your battery.

„Our 2025 analysis of over 22,700 electric vehicles, covering 21 different vehicle models, confirms that overall, modern EV batteries are robust and built to last beyond a typical vehicle’s service life.“

PQ leader says Legault's resignation further evidence of need for independent Quebec

cbc.ca/news/canada/montreal/qu…

tl;dr: the leader of the PQ is full MAGA. He believe in Santa Claus. He believe that in the US dictatorship Quebec and it's francofascism would be safe. Remember MAGA implies hating anyone speaking something other than English.

RE: mstdn.social/@TechCrunch/11591…

oh look, another AI chat tool pops on the block.

TechCrunch (@TechCrunch@mstdn.social)

Attached: 1 image Confer is designed to look and feel like ChatGPT or Claude, but your conversations can't be used for training or advertising. https://techcrunch.TechCrunch (Mastodon 🐘)

Why Poilievre and Carney Are Silent on Grok’s Child Sexual Abuse

thetyee.ca/Opinion/2026/01/15/…

The former is just in his cesspool, running is con. The latter is just an hypocrite coward elite that would have no problem with Internet legislation when they can't enforce the basics.

Why Poilievre and Carney Are Silent on Grok’s Child Sexual Abuse | The Tyee

The Liberals are afraid of Trump. The Conservatives fear their base.Eric Van Rythoven (The Tyee)

Scott

in reply to Sy Hoekstra • • •Sy Hoekstra

in reply to Scott • • •