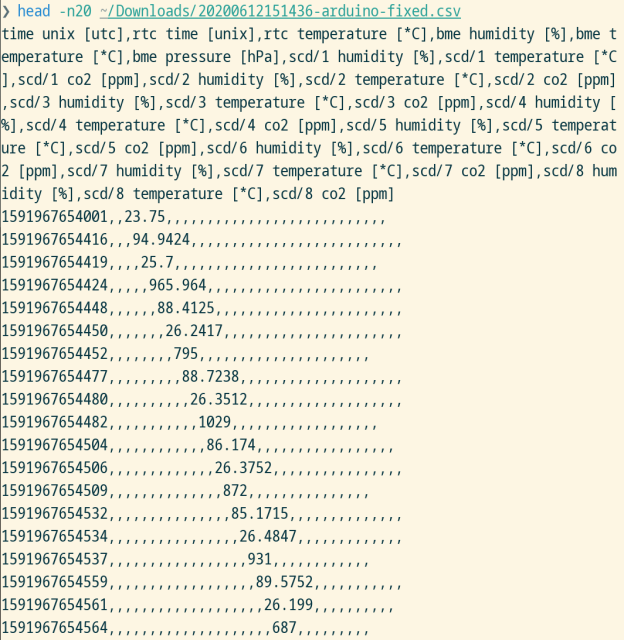

First results of my #compression algorithm benchmark run on a 72MB CSV file. It seems #zstd really has something for everybody, though it can't reach #xz's insane (but slow) compression ratios at maximum settings.

This chart includes multithreaded runs for #zstd.

Very interesting! 🧐

gitlab.com/nobodyinperson/comp…

#Python #matplotlib #Jupyter #JupyterLab

Yann Büchau / ⏱️ Compression Algorithm Benchmark · GitLab

A Python script to benchmark file compression algorithms 🗜️GitLab

Yann Büchau

in reply to Yann Büchau • • •Yann Büchau

in reply to Yann Büchau • • •Yann Büchau

in reply to Yann Büchau • • •Yann Büchau

in reply to Yann Büchau • • •First let's look only at the non-fancy options (no --fast or multithreading) and make log-log plots to better see what's happening in the 'clumps' of points. Points of interest for me:

- #gzip has a *really* low memory footprint across all compression levels

- #zstd clearly wins the decompression speed/compression ratio compromise!

- #xz at higher levels is unrivalled in compression ratio

- #lz4 higher levels aren't worth it. #lz4 is also just fast.

Yann Büchau

in reply to Yann Büchau • • •Repeated the #compression #benchmark with the same file on a beefier machine (AMD Ryzen 9 5950X), results are quite identical, except faster overall.

This plot is also interesting:

- #gzip and #lz4 have fixed (!) and very low RAM usage across levels and compression/decompression

- #xz RAM usage scales with the level from a couple of MBs (0) to nearly a GB (9)

- #zstd RAM usage scales weirdly with level but not as extreme as #xz

#Python #matplotlib

Yann Büchau

in reply to Yann Büchau • • •My conclusion after all this is that I'll probably use #zstd level 1 (not the default level 3!) for #compression of my #CSV measurement data from now on:

- ultra fast compression and decompression, on par with #lz4

- nearly as good a compression ratio as #gzip level 9

- negligible RAM usage

When I need ultra small files though, e.g. for transfer over a slow connection, I'll keep using #xz level 9.