The best way to test the resilience of your service is: reboot the server. I mean it. Don't just restart the service, reboot the server. A story in two parts:

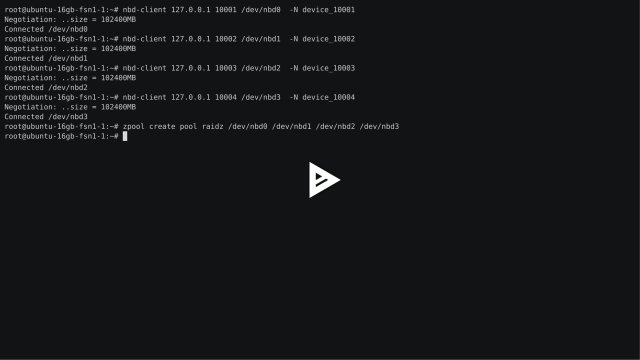

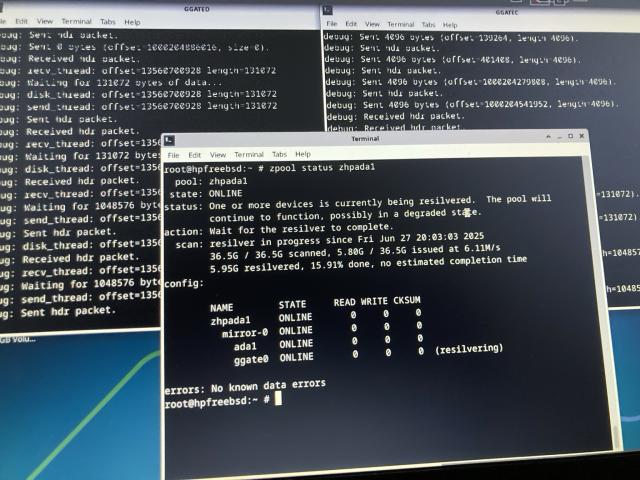

Part 1: a while ago I configured ZFS under my Talos Kubernetes cluster, and everything was fine, until I decided to reboot. When it came back, nothing was working, because I forgot to properly configure a way for Talos to read the ZFS volume encryption key.

Part 2: at some point I configured Audiobookshelf to store data on top of said ZFS volume. Everything was working fine for a few weeks, until I had to reboot the server again (for reasons). When it came back, I lost all my downloaded podcasts, because I had a typo on my configuration that was pointing to a directory outside of the PVC, so it mounted as an emptyDir volume.

Honestly, I should have known better. I had issues in the past when some servers went down because of power failures (battery didn't last) and they did not come back properly.

You gotta do a reboot/power test every once in a while, just like you have to test your backups on a regular basis.

#HomeLab #TalosLinux #ZFS #SRE #DevOps @homelab