I submitted my #kqueue support for sound(4) on #FreeBSD. I hope we will polish it soon enough. reviews.freebsd.org/D53029

cc @JdeBP

@feld

Back when OSS was designed, keeping an output buffer filled to avoid stuttering or reading from a microscope input source before the ring buffer looped and you lost samples was hard. You basically wanted to read or write whenever you had cycles because otherwise you couldn’t keep up.

Since then, computers have become a lot faster and now sound is a very low data rate device. Rather than hammering the sound device as often as you can, you want to be told the microphone buffer has passed some watermark level (so you can process a reasonable number of samples at once) or the sound output buffer is below a watermark level (so you can give it a few ms more samples to write for interactive things, or a few seconds for things like music playback).

Things like music are great for this because you can decode a few tens of second and then sleep in a kevent loop just passing a new chunk to the device whenever it has a decent amount of space.

@feld @david_chisnall

More generally: #kqueue still has several ragged edges, compared to poll/select.

tty0.social/@JdeBP/11457405478…

tty0.social/@JdeBP/11457514245…

Every little helps in order to fill in all of these gaps.

JdeBP (@JdeBP@tty0.social)

@meka@bsd.network It is always welcome to see more kevent(), if only because it lets other people share my pain, in the hope that that increases the push for kevent() to be fully completed and as good as select().tty0.social

🇩🇪#Chatkontrolle jetzt offiziell von der Tagesordnung für den 14.10. gestrichen🥳: data.consilium.europa.eu/doc/d…

⚠️Bundesregierung arbeitet aber weiter an eigenem Vorschlag.

🗓️Nächstes EU-Innenministertreffen ist am 6./7.12.

🚫📡🔐Mission: Keine Massenscans, keine Hintertüren!

🇪🇺#ChatControl now officially removed from the agenda for Oct. 14th🥳: data.consilium.europa.eu/doc/d…

⚠️However, EU governments continue to work on the proposal.

🗓️The next meeting of EU interior ministers is on Dec. 6/7.

🚫📡🔐Mission: No mass scanning, no backdoors!

Periodic self-repetition: As a data librarian I can say that "AI" is not a matter of personal preference -- whether you like it or not, or whether you have found some use that you think is useful. It actively destroys organized knowledge, and therefore it actively destroys civilization.

Whenever someone looks for a human written text and can't find it because statistical near variants have been created and indexed, whenever "AI" "hallucinates" a reference, knowledge has been destroyed.

I've been trying to love myself more.

Is two times a day too often?

I think background music in public places -- stores, hair salons, dentist's offices, etc. -- might be generally a bad idea. It's impossible to pick music that pleases everyone, we can listen to music as much as we want in private, and background music tends to just add to the noise (on that last point I'm reminded of this song: youtube.com/watch?v=yzEncLnmUe…).

Was thinking about this as my mother and I were at Great Clips waiting to get our hair cut. I'm guessing she didn't like the music.

Adding to the Noise

Provided to YouTube by ColumbiaAdding to the Noise · SwitchfootThe Beautiful Letdown (Deluxe Version)℗ 2003 SONY BMG MUSIC ENTERTAINMENTReleased on: 2007-12-...YouTube

The Alaska SeaLife Center team didn't hesitate. They designed something unprecedented: round-the-clock "cuddle therapy." Staff members now work in rotating shifts, bottle-feeding every three hours while cradling the 85-pound infant against their chests, mimicking the constant warmth he'd know from his mother. They hum. They rock. They never leave him alone. The transformation has been miraculous. Within weeks, the calf—who arrives limp and dehydrated—now nuzzles into his caregivers' arms, makes happy chirping sounds, and has gained 12 pounds. He recognizes voices. He reaches for familiar faces. Sometimes survival is just about showing up with love."

#Alaska #AlaskaSeaLifeCenter #WalrusPup #Love

"Canada Post exists to serve people, not shareholders, just like other many essential services that 'cost' Canadians millions per day. Think about it: Long-term care and personal support workers cost Canadian taxpayers millions a day. Should we close the old folks’ homes and put our seniors on the street? Public transit costs millions a day. Should we fire the bus drivers and make everyone walk? Public school support staff — crossing guards, lunch monitors, custodians — cost us millions a day. Do we fire them all and make those lazy teachers do everything?"

Maybe we'd finally be 'productive,' or 'ambitious,' or 'competitive' enough, then.

I’m a letter carrier. Canada Post exists to serve people, not shareholders, just like other essential services

thestar.com/opinion/letters-to…

I’m a letter carrier. Canada Post exists to serve people, not shareholders, just like other essential services

How the Liberals changed course on Canada Post, Oct. 6Unknown (Toronto Star)

reshared this

Bored this Sunday? Use your downtime to learn How to Synth! Dive into the wonderful world of making weird synthesizer noises with my simple, hands-on guide. Still a work in progress, but there's plenty there to get you started!

Паглядзіце дакументалку пра НРМ.

youtu.be/e49klkZZHXw?si=NE1GHO…

NRM і трагедыя культавага рок-гурта. ВОЛЬСКІ і ПАЎЛАЎ пра партызаншчыну, забароны і галоўны канфлікт

Гэта сапраўднае кіно пра культавы беларускі гурт NRM. Яны пачыналі на аскепках свабоды і Мроі, вялі партызаншчыну і запісвалі найвядомейшыя песні ў гісторыі ...YouTube

> we used to drink in the office and datacenter regularly

did you guys ever get too rekt and start pulling ethernet cables out for 1000ms at a time? that's the sort of thing i would possibly consider. "oops latency, burp"

feld likes this.

They killed Ian Watkins lol

Shiv vs Pedo Prisoner

for you hax0rs: Google "AI" is currently vulnerable to prompt injection by "ASCII smuggling"—this is when you convert ASCII to Unicode tag characters, rendering them invisible to the user but visible to the LLM. here's how it's done:

gist.github.com/Shadow0ps/a7dc…

here's someone using this to make Google Calendar display spoofed information about a meeting:

firetail.ai/blog/ghosts-in-the…

others say summarising functions were affected too, so I wonder if you can add tag texts to your website and poison the Google so-called "AI summary" anti-feature.

ChatGPT filters out tag character but, usefully, Google is refusing to, so unless they get a backlash this might be a fun exploit to explore: pivot-to-ai.com/2025/10/11/goo…

Ghosts in the Machine: ASCII Smuggling across Various LLMs

Researcher Viktor Markopoulos discovers ASCII Smuggling bypasses human audit via Unicode, enabling enterprise identity spoofing and data poisoning on Gemini & Grok.Alan Fagan (FireTail)

Well, well, I guess this had to happen eventually. Got my first music offer rejected by a film studio working on a documentary because "we got this covered by AI, but thank you very much for your kind offer."

The rejection itself doesn't really make me sad, this is perfectly normal and especially now the competition is huge. What makes me sad though is that this is a pretty big name in the industry, so it'll only get worse from here on. I sincerely hope I'm wrong.

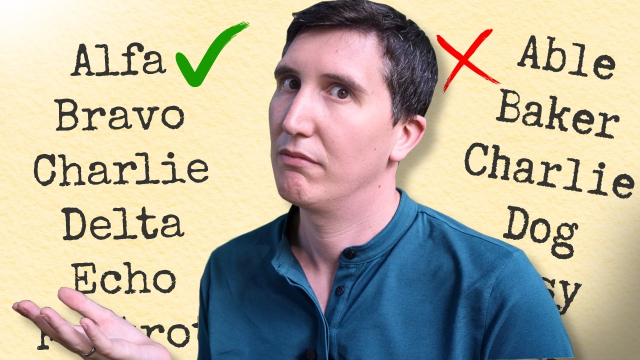

This video chronicles that development.

youtu.be/UAT-eOzeY4M?si=35f3ZM…

#communications #HamRadio #AmateurRadio

The genius logic of the NATO phonetic alphabet

Let’s uncover the secrets of the NATO phonetic alphabet! 🌏 And you can get an exclusive NordVPN deal + 4 months extra here → https://nordvpn.com/robwordsvpn...YouTube

on a few Kilos) gave a Lima bean to Mike. Last November, Oscar’s Papa went to Quebec to meet Romeo. He wore a Sierra and Tango coloured Uniform. Meanwhile

Victor drank Whiskey as he looked at the X-ray of the Yankee, whose arm was broken by a Zulu.

The Best Press Release Writing Principals in AI era

For people working with media and PR, it's quite easy to spot AI generated press releases. AI output is wordy, repeating to the point of annoying. Without human revision, it's hard to read.

But even before the AI era, many press releases are full of jargons that are quite difficult to read.

The reason is simple and cleverly pointed out by this article I read on PR News Releaser: “Think Like a Reader” is the Best Press Release Strategy -- ... they’re written for the wrong audience... disconnect between what companies want to say and what readers actually want to read.

How true is that.

Even highly educated people would appreciate a press release written in simple words and clear explanations, not just generic self-praising, self-promotion sentences.

The article also provide clever strategies on how to convince your boss that writing to the reader is the right way to compose a press release. Check it out.

“Think Like a Reader” is the Best Press Release Strategy

Most press releases die when its online or land in inboxes. They sit unread, unshared, and ultimately ineffective—not because they lack newsworthy information…News PR (PR News Releaser)

AI Slop? Not This Time. AI Tools Found 50 Real Bugs In cURL - Slashdot

The Register reports: Over the past two years, the open source curl project has been flooded with bogus bug reports generated by AI models.developers.slashdot.org

Sonic is gonna get some knuckles

scworld.com/news/sonicwall-con…

SonicWall confirms all Cloud Backup Service users were compromised

A deeper analysis found that all the firewall configurations were compromised, not just 5% of users as first reported.Steve Zurier (SC Media)

RE: infosec.exchange/@codinghorror…

One guard always tells the truth.

The other guard always lies.

I am being slightly disingenuous here.

Some of the many advantages of LLMs over SO is that the LLM is friendly and fast.

That's why Kids Today™ prefer Discord to forums. Someone replies immediately. You don't have to wait for an answer and check back.

LLMs are rarely rude. It can be terrifying to make yourself vulnerable and admit in public you don't know something basic. Especially when humans are mean and judgemental.

Encryption is a human right.

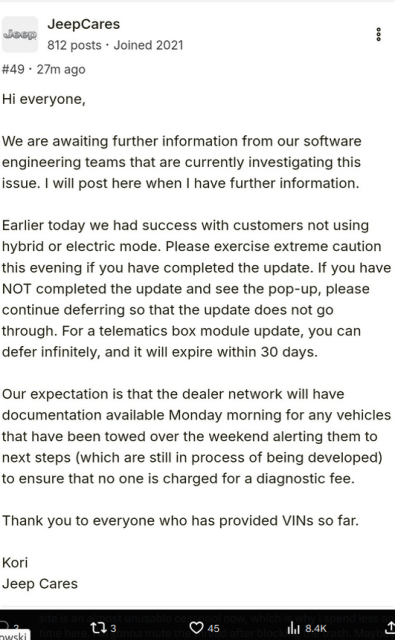

Everyone knows the weekends are the best time to push important updates, right?

From Jeep Wrangler forum: Did anyone else have a loss of drive power after today's OTA Uconnect update?

On my drive home I abruptly had absolutely no acceleration, the gear indicator on the dash started flashing, the power mode indicator disappeared, an alert said shift into park and press the brake + start button, and the check engine light and red wrench lights came on. I was still able to steer and brake with power steering and brakes for maybe 30 seconds before those went out too. After putting it into park and pressing the brake and start button it started back up and I could drive it normally for a little bit, but it happened two more times on my 1.5 mi drive home.

Source: x.com/StephenGutowski/status/1…

More here: jlwranglerforums.com/forum/thr…

and here: news.ycombinator.com/item?id=4…

2024 4xe Loss of Motive Power after 10/10/25 OTA update

Did anyone else have a loss of drive power after today's OTA Uconnect update? On my drive home I abruptly had absolutely no acceleration, the gear indicator on the dash started flashing, the...JesseT (Jeep Wrangler Forums (JL / JLU) -- Rubicon, 4xe, 392, Sahara, Sport - JLwranglerforums.com)

Oops, forgot to let our followers here know that we released 0.87.1 last week! This version includes a fix for a small bug that crept into 0.87.0 that prevented syncing of weightlifting workouts on Garmins.

But the bigger news is that we also managed to get reproducible builds at F-Droid in place in time for this release!

As usual, more details in our blog post: gadgetbridge.org/blog/release-…

You mention that you're publishing both your self-signed and the F-Droid signed build on F-Droid? Do you have more details about how that works and what you had to do to set that up?

I've wanted to have Catima be RB on F-Droid without breaking existing updates for quite a while, but I didn't really manage to make any progress when trying to talk to the @fdroidorg team, so I'd love to know how you got this working :)

My apps (#TinyWeatherForecastGermany & #imagepipe) are reproducible on #izzydroid , but I never switched on #fdroid ...

@SylvieLorxu Sure, no problem! In the end it wasn't difficult because our build process was already reproducible. So we only had to find the correct way to update our F-Droid metadata.

High level it works like this:

- build&sign the apk

- extract signatures with "fdroid signatures <out_filename>"

- create MR in F-Droid like this: gitlab.com/fdroid/fdroiddata/-…

For our next release, we won't have autoupdates. We'll need to create an fdroid MR with a copy of the last metadata and the new signatures.

Add Gadgetbridge 0.87.1 extracted signatures (!27127) · Merge requests · F-Droid / Data · GitLab

Gadgetbridge now has signed reproducible builds published. Not adding Bangle builds for now, since the version code is 0.87.1-banglejs and I'm not yet sure how...GitLab

We're looking for interesting questions around @matrix , its history, its technology, statistics and fun facts for The #MatrixConf2025 Pub [quizzz]!

Do you have suggestions? Please share them with the conference team in the following form: forms.gle/6tbry4Zdzb1fYVfx5 or contact us at #events-wg:matrix.org

![Questions for The Matrix Unconference Pub [quizzz]](https://fedi.ml/photo/preview/640/712627)

Questions for The Matrix Unconference Pub [quizzz]

Do you have any good [matrix] history, stat or fun questions to ask during the Unconference Pub [quizzz] ? It should be nice to play within a crowd of multiple people guessing the correct answer.Google Docs

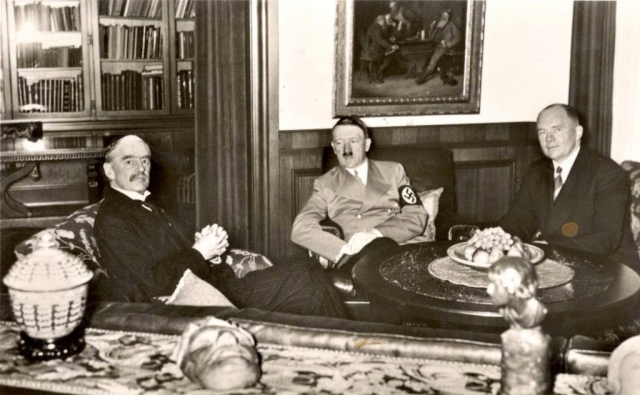

The missile defense system. Anus Tangerinus want us to join, so we can pay for it. It's not to protect us.

Our PM is an appeaser. Like Chamberlain.

Thing is, the fact that AI could do it for you basically means that it has been done before and AI trained on it.

What you actually wanted to say is: "I spent some time rebuilding someone else's work because I wasn't able to find it on Google."

I know this is overdramatized, but also not totally wrong.

Matt Campbell reshared this.

@menelion The part I find the most distressing is when someone has a really good idea, and then they try to get AI to do it, the AI claims to have implemented their idea (but hasn't: it can't), and then they think there's a problem with the idea.

These systems are the polar opposite of creativity.

You are partially correct, but this is an oversimplification of how an AI model, for example a LLM works. It can, and does, use data that it got during its training phase, but that's not the entire story, otherwise it'd be called a database that regurgitates what it was trained on. On top of the trained data there's zero-shot learning, for example to figure out a dialect of a language it hasn't been trained on, based on statistical probability of weights from the trained data, as well as combine existing patterns into new patterns, thus coming up with new things, which are arguably part of creativity.

What it can't do though is, and this is very likely what you mean, it can't go outside it's pre-trained patterns. For example, if you have a model that was trained on dragons and another model that was trained on motorcycles, if you combine those two models, they can come up with a story where a dragon rides a motorcycle, even though that story has not been part of its training data. What it can't do is come up with a new programming language because that specific pattern does not exist. So the other part of creativity where you'd think outside the box is a no go. But a lot of people's boxes are different and they are very likely not as vast as what the models were trained on, and that's how an AI model can be inspiring.

This is why a lot of composers feel that AI is basically going to take over eventually, because they will have such a vast amount of patterns that a director, trailer library editor, or other content creator will be satisfied with the AI's results. The model's box will be larger than any human's.

reshared this

@erion @menelion Most of the generative capabilities of an LLM come from linear algebra (interpolation), and statistical grammar compression. We can bound the capabilities of a model by considering everything that can be achieved using these tools: I've never seen this approach overestimate what a model is capable of.

"Zero-shot learning" only works as far as the input can be sensibly embedded in the parameter space. Many things, such as most mathematics, can't be viewed this way.

It never will, because modern LLMs are far more capable.

They rely on non-linear activation functions (like ReLU, GELU, etc.) after the linear transformations. If the network were purely linear, it could only learn linear relationships, regardless of its depth. The non-linearities are what allow the network to learn complex, non-linear mappings and interactions between inputs.

There's also scaling, arguably an internal world model, being context-aware (which is definitely not something linear). If anything, this would underestimate a model.

reshared this

Why do LLMs freak out over the seahorse emoji?

Investigating the seahorse emoji doom loop using logitlens.vgel.me

reshared this

@erion A model having "a hundred or more layers" doesn't make anything I said less true. "Chain-of-thought reasoning" isn't reasoning. I absolutely can dismiss claims of "a model's intelligence", because I have not once lost this argument when concrete evidence has come into play, and people have been saying for years that I should.

Can you give me an example of something you think a "smaller model that came out in the last year" can do, that you think I would predict it can't?

Take a Gemma model for example, say 2b. Any linear prediction will not be able to predict how emergent capabilities will behave when faced with a complex task, simply because they don't work linearly, especially after crossing a scale threshold, rather than improving with each additional parameter.

You can see this as Gemma 2b can outperform larger models, which for you should not be possible. The model's intelligence is not a simple, additive function of its vector size, but a complex product of the billions of highly non-linear interactions created by the full architecture, making a purely linear prediction inadequate.

Humor me. I'd love to know for example how you can predict architectural efficiency and training alignment, which are both necessary to measure a model's intelligence accurately.

I am sure the quality of the linear space for example can be a good indication, but it's not enough.

@tardis @erion It's not about open-mindedness. We can bound the behaviour of any given architecture mathematically: this sets limits on the capabilities of a particular system.

You're right that the training data does not inherently constrain the behaviour of the model, but other things do: those are what I was referring to.

By "linear", I was specifically referring to the field of mathematics called "linear algebra", not to the metaphor of "staying in a lane".

Well, I think that's where we agree to disagree.

A model's intelligence can be somewhat predicted via linear algebra, I don't doubt this, but there are other factors that if you ignore, you will not get a correct prediction.

For example, Single linear layer operations cannot describe operations that take place accross multiple layers at the same time, hence the nonlinearity of a model. If you explain everything as just an operation per layer, you lose the complexity that this non-linearity gives you, you aare essentially oversimplifying it. All the things I mentioned contribute to this.

There are operations that take place in the non-linear subspace, especially for complex tasks, e.g. to compose multiple steps of an operation (reasoning).

Look at how the performance of smaller local models can completely fail at this, but the moment you hit a size treshold, its accuracy suddenly jumps.

To clarify, I don't disagree with you, model complexity just happens to be increasing and now you need multiple ways to measure a model's intelligence.

It's not totally wrong, but I feel like maybe it's a slight oversimplification. LLMs don't just outright copy the training data, that's why it's called generative AI. That doesn't mean they will never reproduce anything in the training set, but they are very good at synthesizing multiple concepts from that data and turning them into something that technically didn't exist before.

If you look at something like Suno, which is using an LLM architecture under the hood, you're able to upload audio and have the model try to "cover" that material. If I upload myself playing a chord progression/melody that I made up, the model is able to use it's vast amount of training data to reproduce that chord progression/melody in whatever style.

It would be really important for everyone to read about the theory of appeasement and how it has *never* worked.

--

The catastrophes of World War II and the Holocaust have shaped the world’s understanding of appeasement. The diplomatic strategy is often seen as both a practical and a moral failure.

Today, based on archival documents, we know that appeasing Hitler was almost certainly destined to fail. Hitler and the Nazis were intent upon waging an offensive war and conquering territory. But it is important to remember that those who condemn Chamberlain often speak with the benefit of hindsight. Chamberlain, who died in 1940, could not possibly have foreseen the scale of atrocities committed by the Nazis and others during World War II.

---

We have the hindsight today. Let's not make the same mistakes.

encyclopedia.ushmm.org/content…

The British Policy of Appeasement toward Hitler and Nazi Germany | Holocaust Encyclopedia

In the 1930s, Prime Minister Neville Chamberlain and the British government pursued a policy of appeasement towards Nazi Germany to avoid war. Learn more.Holocaust Encyclopedia

![< Post [ac]

[8 Stephen Gutowski @ of

“W° @stephenGutowski

Jeep just pushed a software update that bricked all the 2024 Wrangler

4xe models, including my Willys. The future is going great.

12:56 PM - Oct 1, 2025 - 154.4K Views

15 Was Q 14k Res 2

® Who can reply?

Accounts @StephenGutowski follows or mentioned can reply

[8 Stephen Gutowski & ©StephenGutowski - 21h [oJ

“MW Here's what the issue looks like for those wondering

=,

. 3 DON'T UPDATE Your U-Connect | Jeep Wrangler 4x...

~ As of October 10th 2025 the uconnect update is

| causing major issues for many 4xe owners. We lose.

X

5 Qn Qs 1h 22k na

[18 Stephen Gutowski & @StephenGutowski - 20h [oJ

“M" When mine bricked, | did the infotainment reset. You put the car in acc

mode then press the radio power and tune buttons for ten seconds until

the screen restarts. Then turn it on. | had to do that several times before the

Jeep would drive again.

4 j= Q 1o thi 11K na

[8 Stephen Gutowski & @StephenGutowski - 15h [oJ

“MW so, here's what Jeep says. It's the telematics box, which as far as |

understand it does diagnostics and ota communications, that's causing the

problem. This is the sort of thing | worried about buying a new car,

‘especially a Jeep 4xe. Oh well. dxeforums.com/threads/wrangl...

< Post [ac]

[8 Stephen Gutowski @ of

“W° @stephenGutowski

Jeep just pushed a software update that bricked all the 2024 Wrangler

4xe models, including my Willys. The future is going great.

12:56 PM - Oct 1, 2025 - 154.4K Views

15 Was Q 14k Res 2

® Who can reply?

Accounts @StephenGutowski follows or mentioned can reply

[8 Stephen Gutowski & ©StephenGutowski - 21h [oJ

“MW Here's what the issue looks like for those wondering

=,

. 3 DON'T UPDATE Your U-Connect | Jeep Wrangler 4x...

~ As of October 10th 2025 the uconnect update is

| causing major issues for many 4xe owners. We lose.

X

5 Qn Qs 1h 22k na

[18 Stephen Gutowski & @StephenGutowski - 20h [oJ

“M" When mine bricked, | did the infotainment reset. You put the car in acc

mode then press the radio power and tune buttons for ten seconds until

the screen restarts. Then turn it on. | had to do that several times before the

Jeep would drive again.

4 j= Q 1o thi 11K na

[8 Stephen Gutowski & @StephenGutowski - 15h [oJ

“MW so, here's what Jeep says. It's the telematics box, which as far as |

understand it does diagnostics and ota communications, that's causing the

problem. This is the sort of thing | worried about buying a new car,

‘especially a Jeep 4xe. Oh well. dxeforums.com/threads/wrangl...](https://fedi.ml/photo/preview/640/712643)

Bubu

in reply to Urlaubskatze 🐈⬛🏳️🌈 • • •