A thought that popped into my head when I woke up at 4 am and couldn’t get back to sleep…

Imagine that AI/LLM tools were being marketed to workers as a way to do the same work more quickly and work fewer hours without telling their employers.

“Use ChatGPT to write your TPS reports, go home at lunchtime. Spend more time with your kids!” “Use Claude to write your code, turn 60-hour weeks into four-day weekends!” “Collect two paychecks by using AI! You can hold two jobs without the boss knowing the difference!”

Imagine if AI/LLM tools were not shareholder catnip, but a grassroots movement of tooling that workers were sharing with each other to work less. Same quality of output, but instead of being pushed top-down, being adopted to empower people to work less and “cheat” employers.

Imagine if unions were arguing for the right of workers to use LLMs as labor saving devices, instead of trying to protect members from their damage.

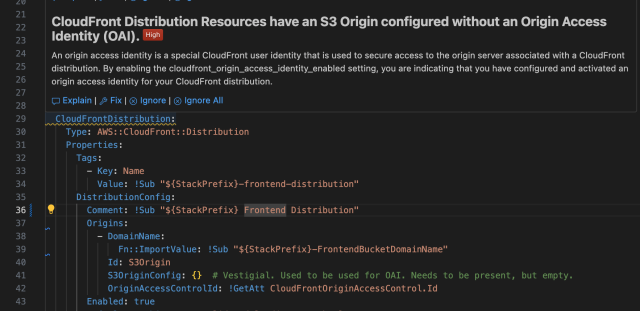

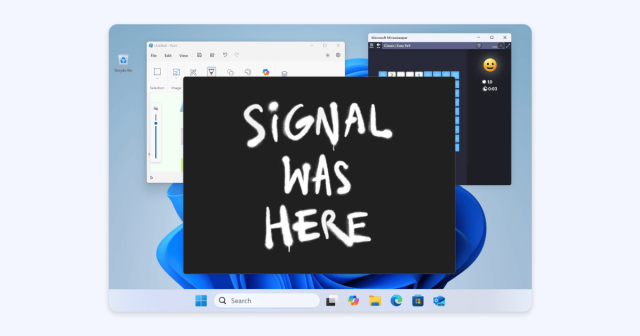

CEOs would be screaming bloody murder. There’d be an overnight industry in AI-detection tools and immediate bans on AI in the workplace. Instead of Microsoft CoPilot 365, Satya would be out promoting Microsoft SlopGuard - add ons that detect LLM tools running on Windows and prevent AI scrapers from harvesting your company’s valuable content for training.

The media would be running horror stories about the terrible trend of workers getting the same pay for working less, and the awful quality of LLM output. Maybe they’d still call them “hallucinations,” but it’d be in the terrified tone of 80s anti-drug PSAs.

What I’m trying to say in my sleep-deprived state is that you shouldn’t ignore the intent and ill effects of these tools. If they were good for you, shareholders would hate them.

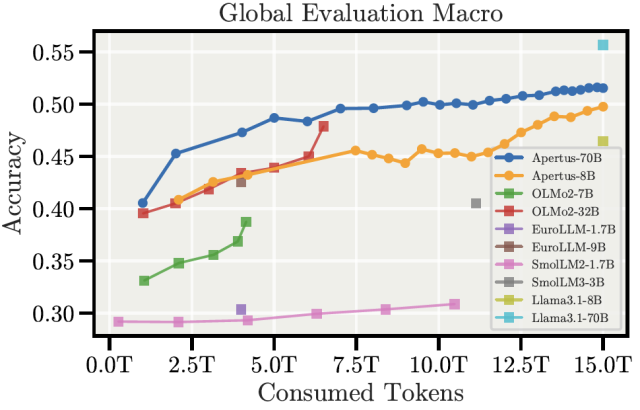

You should understand that they’re anti-worker and anti-human. TPTB would be fighting them tooth and nail if their benefits were reversed. It doesn’t matter how good they get, or how interesting they are: the ultimate purpose of the industry behind them is to create less demand for labor and aggregate more wealth in fewer hands.

Unless you happen to be in a very very small club of ultra-wealthy tech bros, they’re not for you, they’re against you. #AI #LLMs #claude #chatgpt