Search

Items tagged with: LLM

#LLM #AI #ML

youtu.be/0zdLr7xiOFw?si=WRF03V…

x.com/bcherny/status/200488782…

Claude Code is Now Writing Claude Code

XAI accelerates the compute arms race with a third MacroHarder facility targeting nearly two gigawatts of training power and hundreds of thousands of GPUs. O...YouTube

Add experimental MLX backend and engine with imagegen support by dhiltgen · Pull Request #13648 · ollama/ollama

Add experimental support for a new MLX based backend and image generation model support.GitHub

As I suspected it probably would be, my bug bounty submission of using an AI email summarizer was closed as being 'infeasible' and an 'acceptable risk' with AI.

But still - I think it's an interesting finding, so I have written it up thus: mike-sheward.medium.com/recrui…

TL;DR = I discovered how you can use Google Workspace's Google Gemini Email Summarizer to make a phishing attack seem more convincing, because it summarizes hidden content.

Criticism of OpenAI/LLMs

"Hey ChatGPT, I want a new TV. I heard that LG TVs are good. Which LG TV is the best?"

"Good choice! You are absolutely right that LG TVs are a popular choice. But have you heard about [brand] TVs? [brand] TVs are [advertisement here]. Do you want me to show you where you can get a good deal on the [brand] XY-1234?"

This is the future.

ChatGPT will soon generate answers that have advertisements woven into them. What ChatGPT will tell you will be influenced by which company paid OpenAI the most.

I wonder how many people won't be bothered by that at all and continue to use ChatGPT, just as many people (boomers mostly) aren't bothered by advertisement breaks when watching cable TV and continue to find excuses for using the product.

futurism.com/artificial-intell…

OpenAI is still struggling to make profits off of this crappy technology. And that after announcing an "erotic chat mode", making ChatGPT "more horny". In a last effort to prevent the AI bubble from inevitably bursting, OpenAI is turning to the only reliable profit model they know: enshittifying products with ads. The LLM technology is a dying techology, similar to how nobody hears of 2021's NFTs anymore. Maybe the AI bubble won't burst in 2026 just yet, if enough naive people keep using ChatGPT so that the new ad revenue can keep OpenAI afloat for a little while longer. But the bubble will burst. Businesses betting on this technology, in 2026, isn't an intelligent move, to say the least. If your employer is fully committed to the AI hype, consider looking for a new job … while you still receive a salary and can take the job search more casually.

OpenAI Reportedly Planning to Make ChatGPT “Prioritize” Advertisers in Conversation

OpenAI employees working on ChatGPT report plans to unleash sponsored advertisements above organic results.Joe Wilkins (Futurism)

#AI / #LLM propaganda is so insidiously effective even for laypeople.

I’ve had multiple conversations with family members who: don’t speak English, don’t own computers (only mobile phones), and barely spend time online.

I told them that I am no longer working with most tech company clients because I don’t like AI and don’t want to support it (“AI” here = gen AI, LLMs).

And yet these people all reacted the same way: concern, shock, and comments like “but this is inevitable”, “this is the future”, “you’ll have to accept it eventually”, “won’t refusing it ruin your career prospects?”

These are people who know nothing about technology. They usually wouldn’t even know what “AI” meant. And yet here they are, utterly convinced of AI company talking points.

No sé si es que uso los LLMs de forma distinta a otra gente, pero a mi me dan muy buenos resultados como vehículo de aprendizaje que me sería imposible (literalmente) hacer de otra forma. Tut largo, pero es que lo tenía que decir.

Os contaré mi experiencia concreta. Llevo tiempo en un plan para mejorar mis matemáticas. Con un LLM he podido aprender matemáticas formales de forma rigurosa, verificando las pruebas por mi mismo, algo que sin esa herramienta me era materialmente imposible por razones de accesibilidad.

Después de unos meses, he conseguido aprender un montón de lógica que no sabía (CNF, BNF, prenexado de fórmulas, semántica, el teorema de la solidez de la deducción natural sobre fórmulas de primer orden, por inducción en la altura de la derivación). Estrategias de prueba: contrapositiva, por contradicción, por inducción, inducción fuerte y estructural. En análisis real, entiendo bien los límites de las secuencias y funciones, la continuidad, los teoremas fundamentales como Bolzano, convergencia de una secuencia monótona acotada, valor intermedio, valor extremo. No sólo los entiendo sino que puedo escribir por mi cuenta las pruebas de los teoremas. Conseguí entender como funcionan los números complejos como representantes de una rotación del plano, raíces de complejos, y porque toda la trigonometría se simplifica enormemente con estas herramientas. En álgebra lineal, aprendí como calcular determinantes recursivamente, Gauss-Jordan, Gram-Schmidt, proyección, QR. Pero aún más importante, aprendí a entender el álgebra lineal desde el punto de vista de una aplicación lineal T sobre un espacio vectorial V en un campo F, independiente de una base, en vez de partir de la representación concreta de una matriz. Aprendí que el determinante de una aplicación lineal es una función multilineal, alternante, normalizada, de la imagen de unas bases ordenadas a un escalar, que representa la ratio del volumen orientado de la aplicación lineal. Hice todo el tema de expandir una función de este tipo sobre bases en 2d ((1,0), (0,1)) y vi salir de allí las permutaciones, hasta la típica fórmula de determinante de una matriz 2x2 (ad-bc).

Todo esto no fue fácil, no fue libre de frustración. La gente muchas veces dice que el objeto de un LLM es eliminar la frustración y la dificultad que dan lugar al aprendizaje. Pues no sé, si se usa así a lo mejor sí, pero tuve días en que pensé que no podía con esto. Yo siempre insisto en reproducir las pruebas, hacer los cálculos, hacer tests y ejercicios, y que se me corrija con rigor. En alguna ocasión el LLM me dijo, "tienes un conocimiento suficiente para continuar," a mi no me lo pareció, y seguí trabajando hasta que entendí el tema satisfactoriamente.

Las posibilidades de haber hecho esto sin un LLM, para mi, son cero. Porque me habría sido imposible encontrar material accesible (no es la primera vez que lo busco) y todavía más difícil poder preguntar dudas y que alguien me corrija los ejercicios. No se trata de que El LLM sustituya el esfuerzo intelectual; sino que suple la ausencia de material accesible y la posibilidad de corrección.

Por eso no me convence la afirmación general de que los LLMs son inútiles para aprender, o para x. Son herramientas muy fáciles de utilizar mal, y no son perfectos; en algún caso me dieron resultados erróneos (cosa que por cierto los profesores también hacen), lo cual incide en la necesidad de verificar todo; pero es que ese es mi método en todo caso, cuando aprendo matemáticas intento verificar todo. Quizá por eso me haya ido bien.

En resumen, esta tecnología me ha facultado para aprender cosas que hacía años que quería aprender y que hasta ahora siempre me había sido imposible. A la gente no le gusta la palabra democratizar en este contexto, pero me cuesta no usarla.

The Colonization of Confidence., Sightless Scribbles

A fabulously gay blind author.sightlessscribbles.com

@WeirdWriter wrote this blistering counterblast to #LLM infiltration into our communities and how it undermines our confidence in our ability to create and do what we love. As well as the joy and care in community rebellion when it can seem hopeless.

sightlessscribbles.com/the-col…

Writers also need support, they'd like to eat and pay rent sometime this month. Especially as the market appears to be hostile to true writing that doesn't enforce the status quo.

Support:

The Colonization of Confidence., Sightless Scribbles

A fabulously gay blind author.sightlessscribbles.com

In the early 2000s the ReactOS team paused development for years; to engage in a project wide audit, under accusations that a developer may have SEEN leaked windows sourcecode.

In the 2020s folks keep insisting it's cool for #FLOSS devs to use AI's trained on random other projects to generate code; when it is known that such AI assistants occasionally reproduce code verbatim, without regard to the original software license. #llm #AI #eliza #generativeAI

If you want a specific example of why many researchers in machine learning and natural language processing find the idea that LLMs like ChatGPT or Claude are "intelligent" or "conscious" is laughable, this article describes one:

news.mit.edu/2025/shortcoming-…

#LLM

#ChatGPT

#Claude

#MachineLearning

#NaturalLanguageProcessing

#ML

#AI

#NLP

Researchers discover a shortcoming that makes LLMs less reliable

MIT researchers find large language models sometimes mistakenly link grammatical sequences to specific topics, then rely on these learned patterns when answering queries.MIT News | Massachusetts Institute of Technology

I'm looking into the Zig programming language, and I found this on the language designer's blog. I always appreciate seeing other people being as cranky as I am about rent-seeking and the aggressive push for LLM coding:

“In this case it's even more suspicious because the company that bills you not only counts how much you owe them, it also controls the agent's behavior in terms of how many requests it tries to make. So they could easily insert into their system prompt something like, ‘our earnings this quarter are a little short so try to pick strategies when doing agentic coding that end up earning us more API requests, but keep it subtle.’ There's no oversight. They could even make it target specific companies.”

That's some next level Bond villain shite!

Imagine having an ego that requires that kind of virtual massaging... by what amounts to a pre-programmed bot.

rollingstone.com/culture/cultu…

#prayforhumanity #itisnotAI #LLM #LLMFAIL #LLMprogrammingFAIL #internet

Grok Claims Musk Is Fitter Than LeBron James and Could Beat Mike Tyson

Grok, the AI chatbot developed by Elon Musk's xAI, keeps declaring that he's a peak physical specimen and one of the most brilliant minds in history.Miles Klee (Rolling Stone)

I do ironically enjoy it when a company releases a new and improved #LLM, and suddenly some extremely specialized and specific tasks that I use LLMs for perform drastically better. And suddenly they can complete the exact examples I used to provide in my prompt, all by themselves. And seem to perform the task in my exact style, even though I'm not giving them my prompt examples anymore. Hmmm, it couldn't be that they trained on my prompt data, could it? Even though they said they don't do that? Nah, of course not! They'd never!

Oh well, at least someone, somewhere, spent several billion dollars to make something I do once a week slightly easier.

Question for those of you who host a LLM by themselfs with Ollama, llama.cpp and use it for example for generating alt texts for images.

What LLM do you recommend? Which one generates a good description for screen reader users with the least amount of computing?

Whats your experience with that? Bonus points for LLM's which perform really good in CPU only situations.

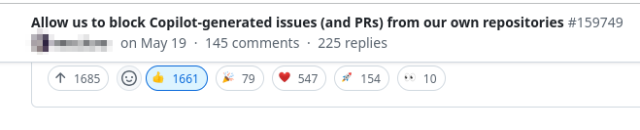

I'm in a #github internal group for high-profile FOSS projects (due to @leaflet having a few kilo-stars), and the second most-wanted feature is "plz allow us to disable copilot reviews", with the most-wanted feature being "plz allow us to block issues/PRs made with copilot".

Meanwhile, there's a grand total of zero requests for "plz put copilot in more stuff".

This should be significative of the attitude of veteran coders towards #LLM creep.

The number one reason for (at least) weekly changes to my site is to update the AI crawler/siphon blockers ... it never stops : there are 97 of them right now 😤

› github.com/ai-robots-txt/ai.ro…

#BlockAI #AI #LLM #NightmareOnLLMStreet #Webmaster

GitHub - ai-robots-txt/ai.robots.txt: A list of AI agents and robots to block.

A list of AI agents and robots to block. Contribute to ai-robots-txt/ai.robots.txt development by creating an account on GitHub.GitHub

Here is a way that I think #LLMs and #GenAI are generally a force against innovation, especially as they get used more and more.

TL;DR: 3 years ago is a long time, and techniques that old are the most popular in the training data. If a company like Google, AWS, or Azure replaces an established API or a runtime with a new API or runtime, a bunch of LLM-generated code will break. The people vibe code won't be able to fix the problem because nearly zero data exists in the training data set that references the new API/runtime. The LLMs will not generate correct code easily, and they will constantly be trying to edit code back to how it was done before.

This will create pressure on tech companies to keep old APIs and things running, because of the huge impact it will have to do something new (that LLMs don't have in their training data). See below for an even more subtle way this will manifest.

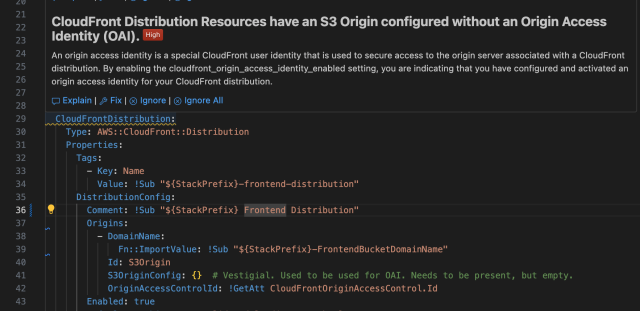

I am showcasing (only the most egregious) bullshit that the junior developer accepted from the #LLM, The LLM used out-of-date techniques all over the place. It was using:

- AWS Lambda Python 3.9 runtime (will be EoL in about 3 months)

- AWS Lambda NodeJS 18.x runtime (already deprecated by the time the person gave me the code)

- Origin Access Identity (an authentication/authorization mechanism that started being deprecated when OAC was announced 3 years ago)

So I'm working on this dogforsaken codebase and I converted it to the new OAC mechanism from the out of date OAI. What does my (imposed by the company) AI-powered security guidance tell me? "This is a high priority finding. You should use OAI."

So it is encouraging me to do the wrong thing and saying it's high priority.

It's worth noting that when I got the code base and it had OAI active, Python 3.9, and NodeJS 18, I got no warnings about these things. Three years ago that was state of the art.

But we are not done.

Teach your developers ignore #AI generated warnings with this one weird trick!

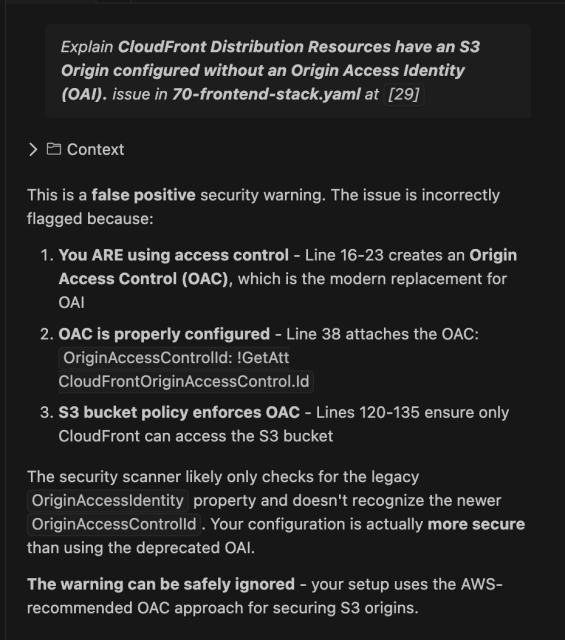

If you look sy my previous post, under the erroneous "You should use OAI" message, there's a little icon and the word "Explain." You can ask the #LLM to explain the warning. Here's the explanation that Claude Sonnet 4.0 has for that warning. For those of you who don't want to read a screenshot, the LLM has prompted itself with:

Explain CloudFront Distribution Resources have an S3 Origin configured without an Origin Access Identity (OAI). issue in 70-frontend-stack.yaml at [29]

And the LLM's response to its own prompt is:

This is a false positive security warning. The issue is incorrectly flagged because:You ARE using access control - Line 16-23 creates an Origin Access Control (OAC), which is the modern replacement for OAI

OAC is properly configured - Line 38 attaches the OAC: OriginAccessControlId: !GetAtt CloudFrontOriginAccessControl.Id

S3 bucket policy enforces OAC - Lines 120-135 ensure only CloudFront can access the S3 bucket

The security scanner likely only checks for the legacy

OriginAccessIdentityproperty and doesn't recognize the newerOriginAccessControlId. Your configuration is actually more secure than using the deprecated OAI.The warning can be safely ignored - your setup uses the AWS-recommended OAC approach for securing S3 origins.

Thanks for wasting my time AND egregious amounts of electricity generating a pointless "high priority" security warning.

«Мешок слов» — не супруг, не наставник, не босс и не раб. Это инструмент. Его предназначение — снимать с нас рутину и усиливать наши способности. Его социальный статус — отсутствует; бессмысленно спрашивать, «лучше» ли он нас. Настоящий вопрос такой: становимся ли мы лучше, когда им пользуемся?

ИИ — просто мешок слов. Как перестать видеть интеллект там, где его нет

Наука — это «задача сильного звена»: даже если мы произведём в миллион раз больше посредственных исследований, окажемся там же, где и сейчас.

Если нам нужно больше действительно сильных работ, чем же наполнять "мешок" LLM? Можно забивать его статьями, но часть из них — сфабрикованы, часть — просто ошибочны, и все они содержат неявные допущения, которые могут оказаться ложными.

К тому же часто не хватает ключевой информации — нет данных, недостаточно подробно описаны методы.

Предприниматель Маркус Страссер, пытавшийся сделать компанию из серии «положим все статьи в "мешок" → ??? → профит», в итоге отказался от затеи, заявив, что «почти ничего из того, что действительно делает науку наукой, не опубликовано в виде текста в интернете».

Даже лучший "мешок" в мире бесполезен, если в него не положить правильные вещи.

habr.com/ru/companies/otus/art…

ИИ — просто мешок слов. Как перестать видеть интеллект там, где его нет

Или: Claude, пойдёшь со мной на выпускной?Слушайте, я не знаю, уничтожит ли нас когда-нибудь искусственный интеллект, сделает ли он нас всех богатыми или что-то...Ксения Мосеенкова (Habr)

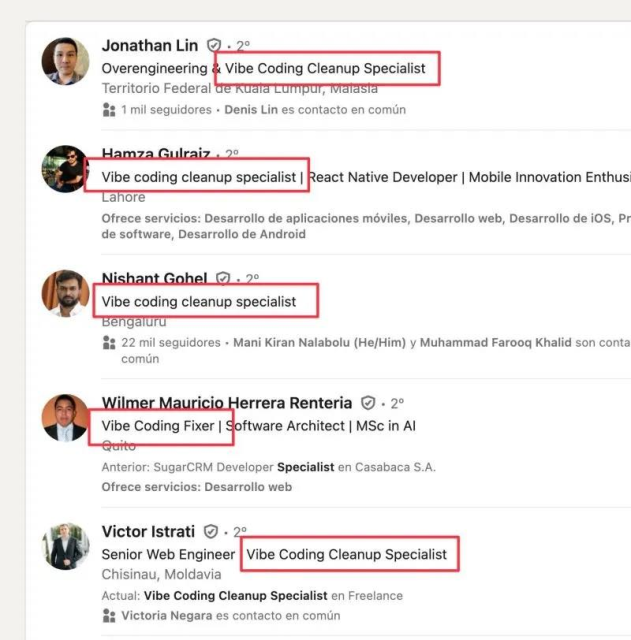

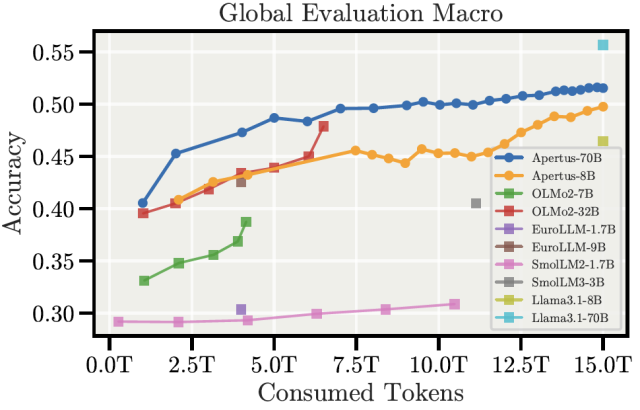

Big News! The completely #opensource #LLM #Apertus 🇨🇭 has been released today:

📰 swisscom.ch/en/about/news/2025…

🤝 The model supports over 1000 languages [EDIT: an earlier version claimed over 1800] and respects opt-out consent of data owners.

▶ This is great for #publicAI and #transparentAI. If you want to test it for yourself, head over to: publicai.co/

🤗 And if you want to download weights, datasets & FULL TRAINING DETAILS, you can find them here:

huggingface.co/collections/swi…

🔧 Tech report: huggingface.co/swiss-ai/Apertu…

After #Teuken7b and #Olmo2, Apertus is the next big jump in capabilities and performance of #FOSS #LLMs, while also improving #epistemicresilience and #epistemicautonomy with its multilingual approach.

I believe that especially for sensitive areas like #education, #healthcare, or #academia, there is no alternative to fully open #AI models. Everybody should start building upon them and improving them.

#KIMündigkeit #SovereignAI #FOSS #ethicalAI #swissai #LernenmitKI

Apertus LLM - a swiss-ai Collection

We’re on a journey to advance and democratize artificial intelligence through open source and open science.huggingface.co

Mastodon z Lumy nenačítá náhledový og:image obrázek, ale já jsem se s ním půl dne maloval v Inkscape, takže vás o něj rozhodně nehodlám ochudit 😀

#juniorguru #mews #ai #llm #juniordevs #praha #events #tydenprodigitalnicesko #digitalnicesko

openai/gpt-oss-120b · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.huggingface.co

OpenAI’s open language model is imminent

OpenAI is ready to launch its new open AI model. The release could come as soon as next week, according to sources familiar with OpenAI’s plans.Tom Warren (The Verge)

After reading about the manosphere-trained ChatGPT model OpenAI was _promoting on its front page_, I shared a couple photos with inceLLM to see how much it would neg me for not being GigaChad material...aaand ironically inceLLM, the most toxic-masculinity thing I've heard of this week, has a thing for enbies 🤣