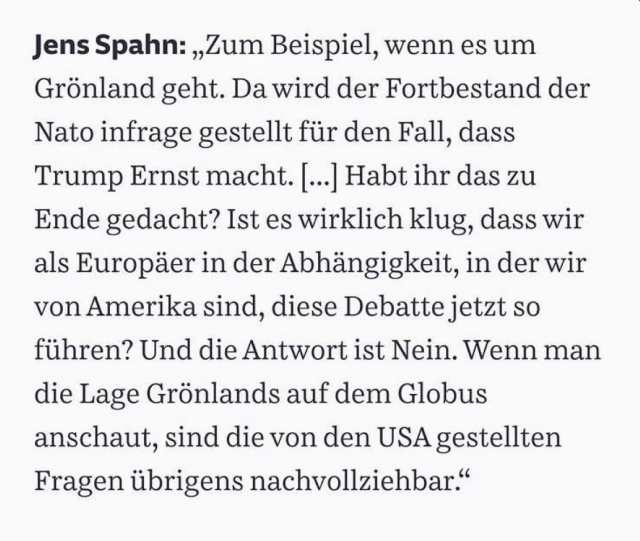

Appeasement didn't work with Hitler.

Appeasement didn't work with Putin.

Appeasement isn't working with Trump.

When will Europe learn this most basic lesson about how to deal with imperialist bullies?

politico.eu/article/hit-back-d…

Hit back at Trump? Europe mulls unthinkable options as Greenland threats ramp up tensions

Senior politicians want the EU to deploy its trade bazooka against the United States over Trump’s Greenland claims. Capitals aren’t so sure.Nicholas Vinocur (POLITICO)

johann

in reply to Jonathan • • •Like right now I kinda trust all the big companies to at least cover that if something happens LOL.

Jonathan

in reply to johann • • •johann

in reply to Jonathan • • •I just need to set it up properly, just have to get over the lazyness with actually looking at how to use these things.

Jonathan

in reply to johann • • •