A little PSA: if your library of choice is facing funding cuts, don't hold off on using their services because you're worried it'll put pressure on their existing funds.

Take advantage of everything and help them get some lovely stats to help them demonstrate impact as they fight back! If it looks like they're not being useful to folks, they'll get cut!

Don't do the cost cutters' jobs for them!

I'm really hoping for Fediverse help here.

I have a 14 year old niece. She's incredibly smart and extremely motivated when it comes to engineering things.

I want to get her some electronics stuff, like an Arduino kit, but maybe something with wearables, etc. to make it more relevant to her than just a breadboard.

She'll also need some books on electronics, since she doesn't have the background in that.

Sadly, some limitations:

- I can't help her. Her mother won't let my wife or I talk to her. This gift itself will have to be given through a third party.

- She has a learning disability around reading- likely dyslexia, and so we need material that's easy to read

- Her English is not amazing, especially because of the learning disability.

So I'm looking for a kit with a ton of instructional material. Programming, electronics, breadboard, the whole kit and kaboodle.

If you have ideas, please share, and boost!

Sensitive content

@NVAccess

@feld Ohhhhhh. *Face Palm*

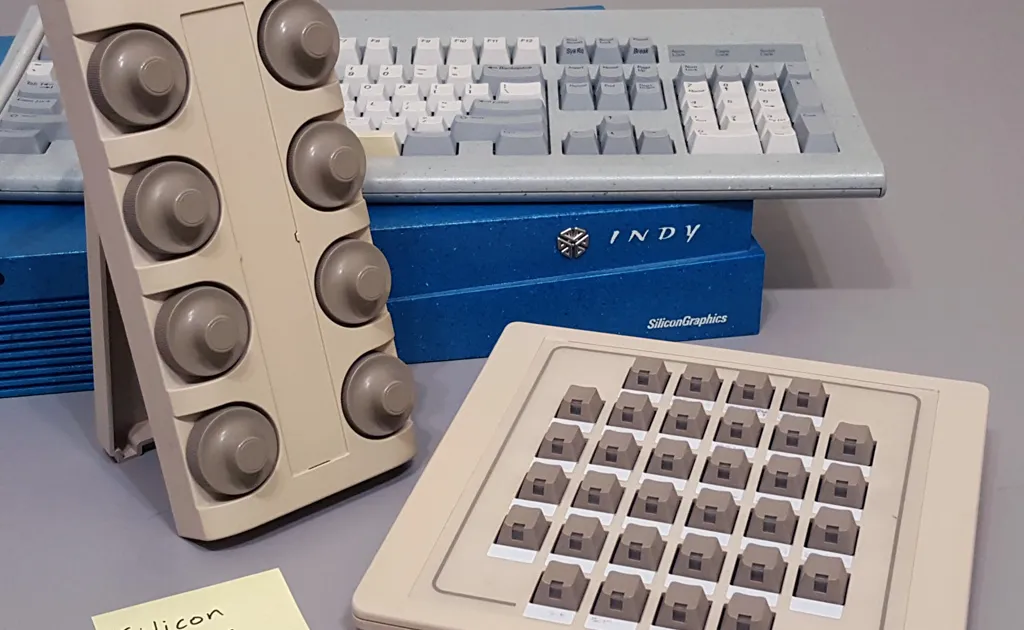

It was a Silicon Graphics Indy!!!!

I completely forgot I worked on those. Not Many of them mind you (expensive little buggers), bur occasionally in Engineering CAD/CAE/CAM I'd trip over them, and I knew xNIX so IRIX got shoveled in my direction. Good little machines tho.

Sensitive content

Digital Accessibility Ethics: Disability Inclusion in All Things Tech

Digital Accessibility Ethics is a practical guide with an urgent goal: to help end tech exclusion of 1.3 billion people across the world with disabilities.Routledge & CRC Press

This is pretty wild. Checkout.com got hacked by a group that claims to be Shiny Hunters again. Checkout said in blog post that it would not be extorted by criminals.

"We will not pay this ransom.

Instead, we are turning this attack into an investment in security for our entire industry. We will be donating the ransom amount to Carnegie Mellon University and the University of Oxford Cyber Security Center to support their research in the fight against cybercrime."

Far too many victim firms just pay up, to get back to business as usual asap. Imagine if a fraction of those victims instead paid into a fund for research that actively disrupts these groups.

checkout.com/blog/protecting-o…

Protecting our Merchants: Standing up to Extortion

Our statement detailing an incident concerning a legacy system. We outline our commitment to transparency, accountability, and planned investment in cyber security research.www.checkout.com

Ce n’est plus à démontrer.

@GenerationAthee

#13novembre #attentats #Bataclan #islam #islamiste #religions #athéisme #laïcité #république #France

Mozilla is adding a new feature called the 'AI Window' to its Firefox browser, which will include an integrated AI assistant and chatbot. This new "AI Window" will provide users with a dedicated space to chat directly with the browser's AI assistant, offering real-time help and interaction while they browse. So yet another AI browser that will have full access to what you do on the internet 😏

connect.mozilla.org/t5/discuss…

Building AI the Firefox way: Shaping what’s next together

Hi everyone, We recently shared how we’re approaching AI in Firefox with user choice and openness at the center of everything we build.connect.mozilla.org

reshared this

Today, we're launching SlopStop: Community-driven AI slop detection in Kagi Search.

Join our collective defense against AI-generated spam and content farms:

Laurent Cozic will join GitHub’s Open Source Friday podcast to present Joplin, give a demo, share our vision, and chat live with the community.

📅 14 Nov 2025, 18:00–19:00 UTC

🔗 Join live on Twitch: twitch.tv/GitHub

Here is a thing I've been wondering about:

Let's for the moment say that generative AI is Absolute Evil. No wiggle room, it's just straight up bad for EVERY use case.

Can all you smart AI hating people out there come up with good tools to help fill the gap?

Like, if using AI to generate code is bad, can we make programming languages or paradigms that lower the bar to entry and make it possible for more people to be empowered to create their own programs?

I feel like there are smart people making some very good points all around, but I can't help but wonder if all this negative energy is being mis-directed.

I feel like more people USED to have that vision. Remember Hypercard? Or Visual BASIC?

Where have all the tools like this that enable and empower gone?

@hypolite @me So, I want to apologize for my extreme response.

I'll admit I'm a bit frustrated with:

- My perception that people do not in fact respond to the questions I pose and instead just keep restating the same absolutist stances that my daily workflow seems to me to refute

- My perception that many people seem very out of touch with what current models can and can't do.I don't ask anyone to favor AI, it may in fact be a net negative for humanity. I just perceive that people often seem to work from incomplete information.

Re-reading your post it seems I over-reacted and you weren't necessarily doing any of those things.

@Feoh @Jonathan Lamothe Thank you for this. I am frequently equally frustrated in conversations about AI, specifically generative AIs based on Large Language models because the people who make any sort of claims about what they can or could do also are people with the least understanding of how it works technically.

The truth is that this crop of AI is engineered to fool us humans, including about their capabilities. Because that's the target model trainers have set for them. And it turns out machine learning systems are uncannily good at reaching their set goals, regardless of any other consideration.

And so you have people who use AIs casually who are rightfully bewildered by their apparent capabilities, while experts in their respective fields who try to use AIs to enhance their workflow end up dropping them for a variety of reasons (inaccuracy, lack of underlying understanding of the subject matter, loss of ownership of output, etc...).

Does this mean a fooling machine can't produce an accurate answers? Absolutely not, but it will make figuring out the inevitable inaccurate answers harder because it's already been so good at fooling the people who trained the model.

Even without considering the ethics (or mostly the lack thereof) of this current crop of AI, it cannot answer any need that isn't about fooling people at scale.

reshared this

There is an issue for reporting keystroke conflicts here: github.com/nvaccess/nvda/issue…

And just last week I discovered the "Check Input Gesturs" add-on which I mentioned in In-Process which does report conflicts: nvaccess.org/post/in-process-1…

But in terms of a standard layer system, I'm not sure if we have a request or anything for that currently?

Have NVDA report keyboard shortcut/input gesture collisions for add-ons at load time

Is your feature request related to a problem? Please describe. Please refer to NVDA Group topic, NVDA and NVDA Shortcut Key Collision Avoidance - Does this exist?, for more information and the comm...britechguy (GitHub)

SwiftOnSecurity

in reply to SwiftOnSecurity • • •My phone has full optical character recognition of 47,000 photos. I can search individual words.

I cannot search three words in quotes.

Computers used to be powerful. That power meant something. It was power for making your life better in sovereignty to your own interests.

And now we have condescending mollycoddled shit.

SwiftOnSecurity

in reply to SwiftOnSecurity • • •Computers were a skill. They were taught in classrooms as a skill. Skills give you power over your tools because you work them as an expert and that is leverage to multiply externally.

And then computers became an A/B tested telemetry-based advertising conduit to brains for SaaS recurring revenue.

This could be said of technologies before. Doesn't make it wrong.