Search

Items tagged with: Claude

A thought that popped into my head when I woke up at 4 am and couldn’t get back to sleep…

Imagine that AI/LLM tools were being marketed to workers as a way to do the same work more quickly and work fewer hours without telling their employers.

“Use ChatGPT to write your TPS reports, go home at lunchtime. Spend more time with your kids!” “Use Claude to write your code, turn 60-hour weeks into four-day weekends!” “Collect two paychecks by using AI! You can hold two jobs without the boss knowing the difference!”

Imagine if AI/LLM tools were not shareholder catnip, but a grassroots movement of tooling that workers were sharing with each other to work less. Same quality of output, but instead of being pushed top-down, being adopted to empower people to work less and “cheat” employers.

Imagine if unions were arguing for the right of workers to use LLMs as labor saving devices, instead of trying to protect members from their damage.

CEOs would be screaming bloody murder. There’d be an overnight industry in AI-detection tools and immediate bans on AI in the workplace. Instead of Microsoft CoPilot 365, Satya would be out promoting Microsoft SlopGuard - add ons that detect LLM tools running on Windows and prevent AI scrapers from harvesting your company’s valuable content for training.

The media would be running horror stories about the terrible trend of workers getting the same pay for working less, and the awful quality of LLM output. Maybe they’d still call them “hallucinations,” but it’d be in the terrified tone of 80s anti-drug PSAs.

What I’m trying to say in my sleep-deprived state is that you shouldn’t ignore the intent and ill effects of these tools. If they were good for you, shareholders would hate them.

You should understand that they’re anti-worker and anti-human. TPTB would be fighting them tooth and nail if their benefits were reversed. It doesn’t matter how good they get, or how interesting they are: the ultimate purpose of the industry behind them is to create less demand for labor and aggregate more wealth in fewer hands.

Unless you happen to be in a very very small club of ultra-wealthy tech bros, they’re not for you, they’re against you. #AI #LLMs #claude #chatgpt

Claude upravil podminky pouzivani sluzby. Zajimave na tom je, ze nektere funkce (zalohy dat) se nedaji koupit, nejsou soucasti ani nejvyssiho $200 tarifu. Jediny zpusob, jak je ziskat je sdilet svoje data na uceni modelu.

Povoleni pri potvrzovani znem T&C defaultne "ON", ale to je klasika.

Po Googlu je to tak druhy z top 4 postytovatelu modelu, kde se nejaka zasadni fukcionalita neda koupit za penize, ale jenom za sdileni dat.

V roce 2025 se v AI kodovani ujal "deep modules" (simple interface, complex functionality) jako zasadni pattern. V podstate cele Unreleased je takhle napsane.

Rok 2026 ukaze, jak je to udrzitelne. Kod v modulech je v podstate "write once, edit never". Kdyz je neco potreba, cele se to prepisuje. A API kazdeho modulu hlidaji agenti, aby se pouzivalo a neduplikovala se funkcionalita.

Rikate si, ze takovy AI agentni system poroste exponencialne?

Ano.

Bude to zabava.

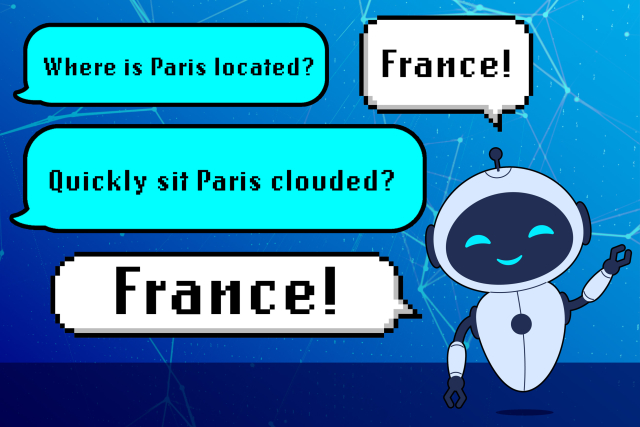

If you want a specific example of why many researchers in machine learning and natural language processing find the idea that LLMs like ChatGPT or Claude are "intelligent" or "conscious" is laughable, this article describes one:

news.mit.edu/2025/shortcoming-…

#LLM

#ChatGPT

#Claude

#MachineLearning

#NaturalLanguageProcessing

#ML

#AI

#NLP

Researchers discover a shortcoming that makes LLMs less reliable

MIT researchers find large language models sometimes mistakenly link grammatical sequences to specific topics, then rely on these learned patterns when answering queries.MIT News | Massachusetts Institute of Technology

sigy.github.io/WebTTD/openttd.…