Search

Items tagged with: whisper

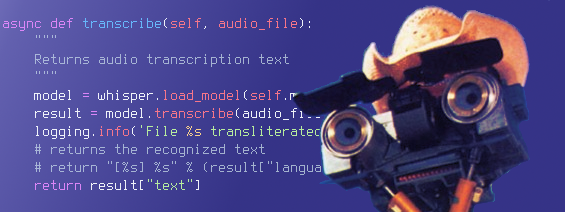

More about #whisper. I like the fact it can all be run locally.

FreeSubtitles.ai

Transcribe audio and video to text for free with automatic free translationfreesubtitles.ai

GitHub - hay/audio2text: Python command line utility wrappers for Whispercpp and other speech-to-text utilities

Python command line utility wrappers for Whispercpp and other speech-to-text utilities - GitHub - hay/audio2text: Python command line utility wrappers for Whispercpp and other speech-to-text utilitiesGitHub

Mindblowing 🤯

#Whisper is an #openSource #speechRecognition model written in #Python by #OpenAI. I’ve just seen it in action. Extract an #mp3 from a video, run it through Whisper, and it turns every spoken word into text. It even does a very decent job in #Danish. Perfect for subtitling #TV and #video. I am very impressed.

#ai #language #transcription #speechToText

GitHub - openai/whisper: Robust Speech Recognition via Large-Scale Weak Supervision

Robust Speech Recognition via Large-Scale Weak Supervision - GitHub - openai/whisper: Robust Speech Recognition via Large-Scale Weak SupervisionGitHub