I would like a 5.1 channel surround system I can easily plug into my laptop's USB/HDMI hub so I can listen to podcasts and music on something that isn't a headset. Used to be you could get that in a sub-$150 form factor with 3 analogue 1/8-inch connectors. Now, though, my only connectivity option looks to be HDMI. Everything I'm finding is a sound bar which, if past experience is any guide, means a complicated ecosystem where I'll need an app and an account and likely sighted help, because you can't just plug the damn thing in and get sound, you have to make sure you're not in bluetooth mode, or otherwise mash button combinations so your speakers actually do the thing.

Surely I'm not the last weirdo left who wants his computer to sound good without a headset? What are my options? There don't seem to be either plain speakers or non-sound-bar options--maybe a mini receiver that can handle the HDMI input, with enough physical buttons so I can press one to switch to HDMI? IME sound bars have like 3 buttons, and each does a dozen things which you only distinguish by seeing which light is lit or similar. Then there's my last sound bar, which at one point crashed so hard that I started actually seeing the 403s from what was apparently its onboard Nginx server. I really hate technology somedays.

Qwen taught me something useful today after I questioned something it wrote.

There is such thing as an transmission "erasure" vs a transmission "error"

- Errors: Unknown corruption of data bits during transmission (e.g., a 0 becomes a 1 or vice versa)

- Erasures: Complete loss of data packets/bits, where we know which specific positions are missing (the locations of missing data are known)

huh. I never knew.

Thus, as of late, I am speaking as little as I can get away with.

Tracking my checked luggage with Google Find Hub on my trip to Montreal worked surprisingly well.

Much better than ~2 years ago when I first got a Pebblebee tracker.

I also like that they come in different form factors. This time I got one in credit card format that slides into the existing luggage tag.

(#NotSponsored, obviously, but do get in touch if you want to send me absurd amounts of money for posting on #Mastodon.)

"Vorzeigeplattform für Inklusion vor dem Aus" - so lautet die heutige Schlagzeile bei ORF Tirol über das Projekt bidok

Lesen Sie den ganzen Artikel hier: tirol.orf.at/stories/3329364/

Unsere Bitte: Teilen Sie diesen Beitrag, damit möglichst viele Menschen erfahren, was auf dem Spiel steht.

Falls Sie es noch nicht getan haben, können Sie hier für bidok unterschreiben: tinyurl.com/bidok-unterschrift

#bidok #bidokbib #barrierefrei #barrierefreiheit #disabilitystudies #Tirol

Wissenschaft: Vorzeigeplattform für Inklusion vor dem Aus

Das im ganzen deutschsprachigen Raum angesehene Beratungs- und Vermittlungsprojekt „bidok – behinderung inklusion dokumentation“ steht vor dem Aus. Nach 20 Jahren streicht das Sozialministerium mit Jahresende 100 Prozent der Förderungen.ORF.at

Wrote up some thoughts about the proposed ban on the sale of TP-Link devices in the US.

The U.S. government is reportedly preparing to ban the sale of wireless routers and other networking gear from TP-Link Systems, a tech company that currently enjoys an estimated 50% market share among home users and small businesses. Experts say while the proposed ban may have more to do with TP-Link’s ties to China than any specific technical threats, much of the rest of the industry serving this market also sources hardware from China and ships products that are insecure fresh out of the box.

reshared this

Use Linux they say. It's easy for non-technical users and they never have to touch the command line they say.

I just spent 4 hours the other day digging around CLI and man pages and more trying to get my rsync and restic and system to do even kinda sorta system backup to cloud functionality that's built into Windows and MacOS, or offered as an easy client from your backup vendor. "Backup my home directory up to someplace". Because easy backup clients just aren't available or well supported for Linux.

No I'm not particularly interested in "oh you just have to go and find this random package that's in this repo and configure it and ...

I love and hate Linux sometimes.

As someone who recommends linux and chromebooks for "non-technical" users, I always attach a caveat.

Linux is great for the 2 ends of the bell curve.

If you're doing something super niche and you need full control and are willing to put in the work, obviously its great.

Its also great if your idea of a "computer" is just a facebook, YouTube and email machine. I have setup family members with either chrome books or Linux mint and it "just works" for them. Bonus points for keeping them better protected from malware.

But for anything in the middle, its probably gunna be more painful than other OSes.

"#curl working as intended is a vulnerability"

Ok I paraphrased the title but this onslaught is a bit exhausting...

curl disclosed on HackerOne: Arbitrary Configuration File...

## Summary: The Arbitrary Configuration File Inclusion (ACFI) vulnerability was identified in the curl utility via the --config option. This flaw is a form of External Control of File Name...HackerOne

Holy crap! Don't you see what this means? That by supplying curl a config file path via its "config file path" option, we can trick curl into opening a config file AT THAT PATH.

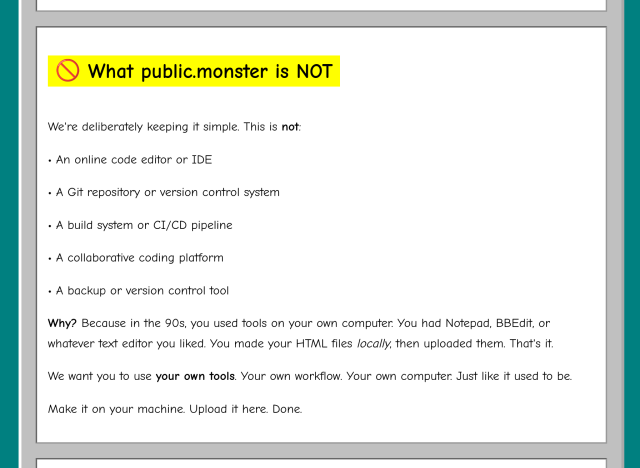

public.monster is a homage to the old web built on the new web. Inspired by sachajudd.com at @btconf → done in hours

~ bun.sh: all-in-one runtime

~ bunny.net: infra

~ hanko.io: auth

This would have been way harder in 1997

bunny.net - The Global Edge Platform that truly Hops

Hop on bunny.net and speed up your web presence with the next-generation Content Delivery Service (CDN), Edge Storage, and Optimization Services at any scale.bunny.net

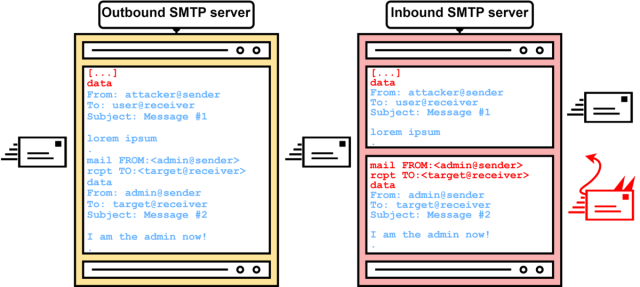

one of the most common security reports we get in #curl is claims of various CRLF injections where a user injects a CRLF into their own command lines and that's apparently "an attack".

We have documented this risk if you pass in junk in curl options but that doesn't stop the reporters from reporting this to us. Over and over.

Here's a recent one.

curl disclosed on HackerOne: SMTP CRLF Injection in curl/libcurl...

SMTP CRLF Injection Vulnerability in curl/libcurl ## Vulnerability ID: CURL-SMTP-CRLF-2024 ## CWE-93: Improper Neutralization of CRLF Sequences ### Executive Summary curl/libcurl contains a CRLF...HackerOne

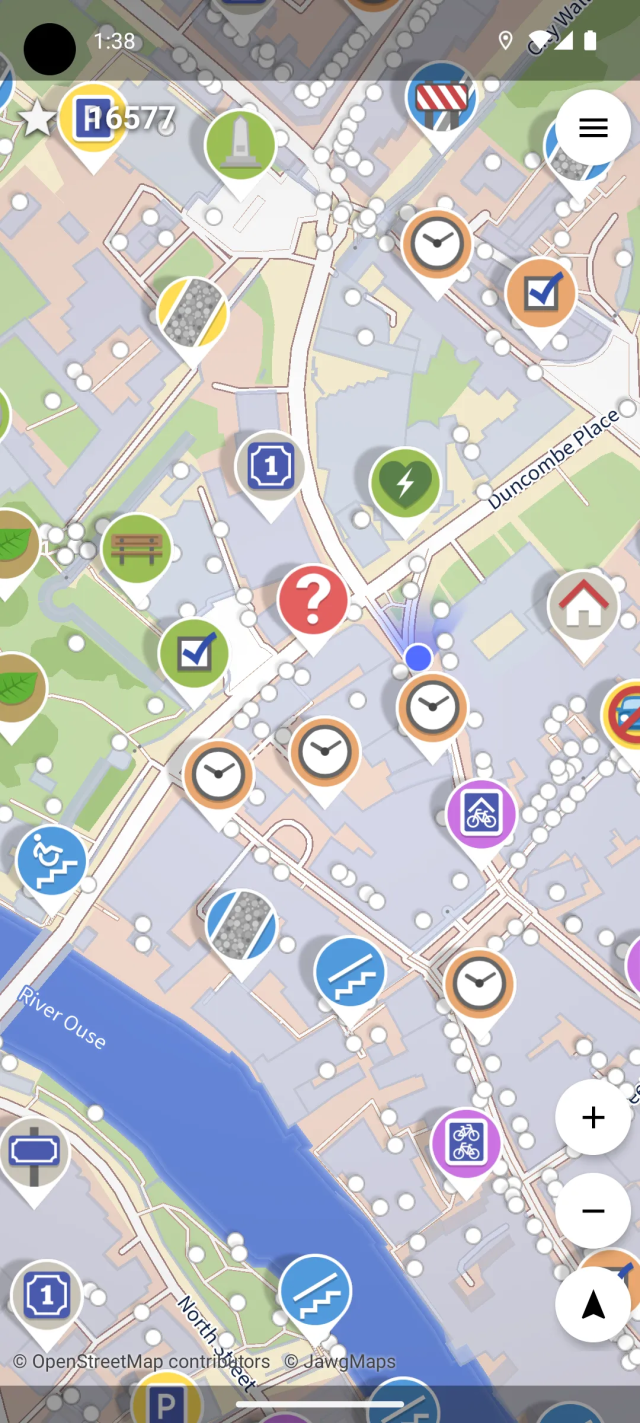

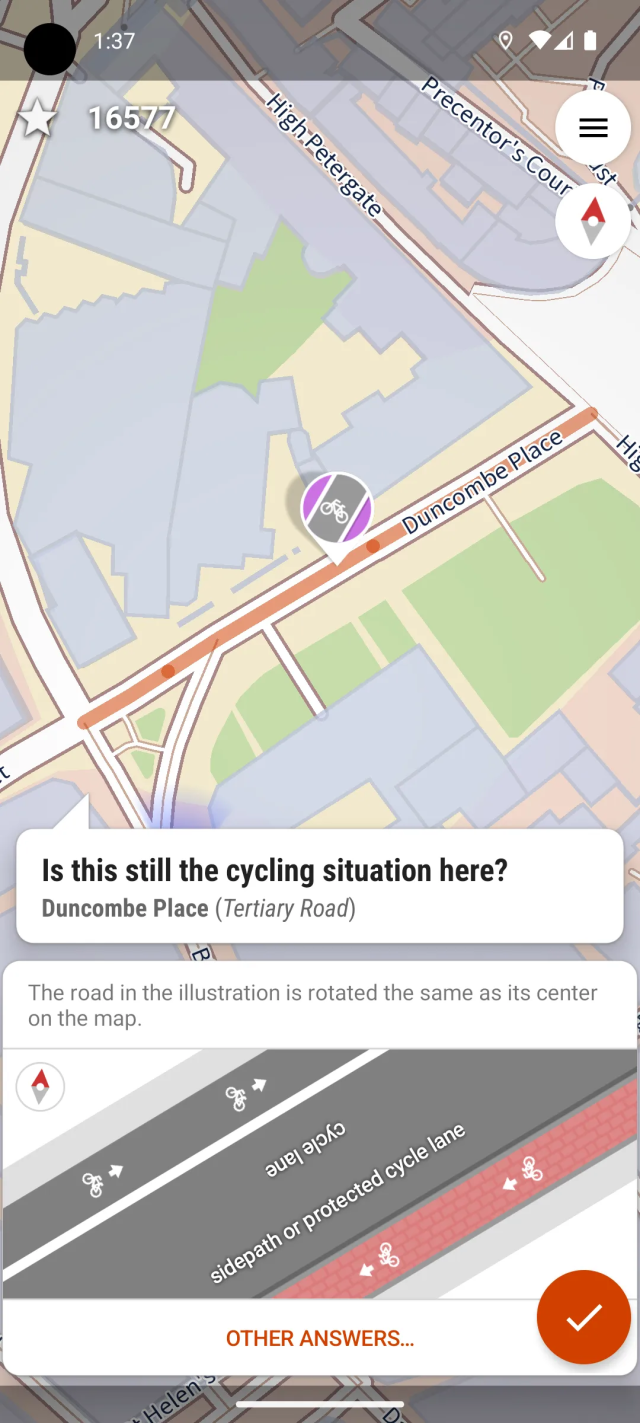

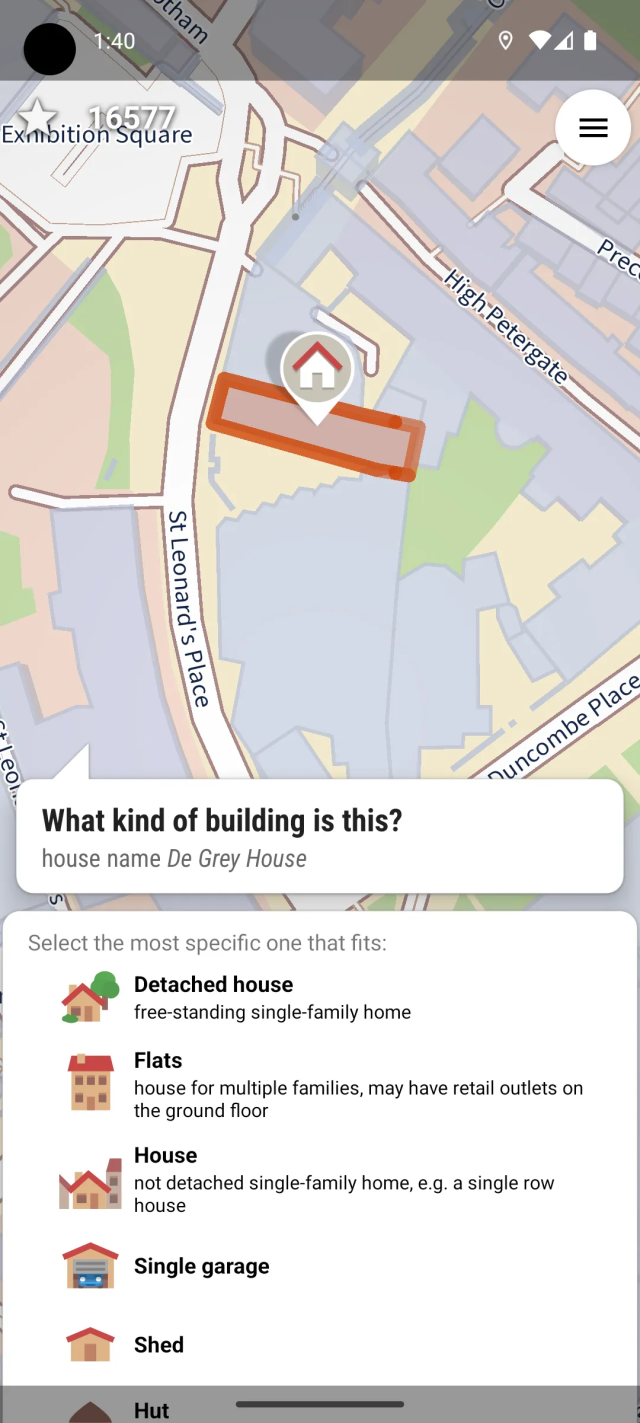

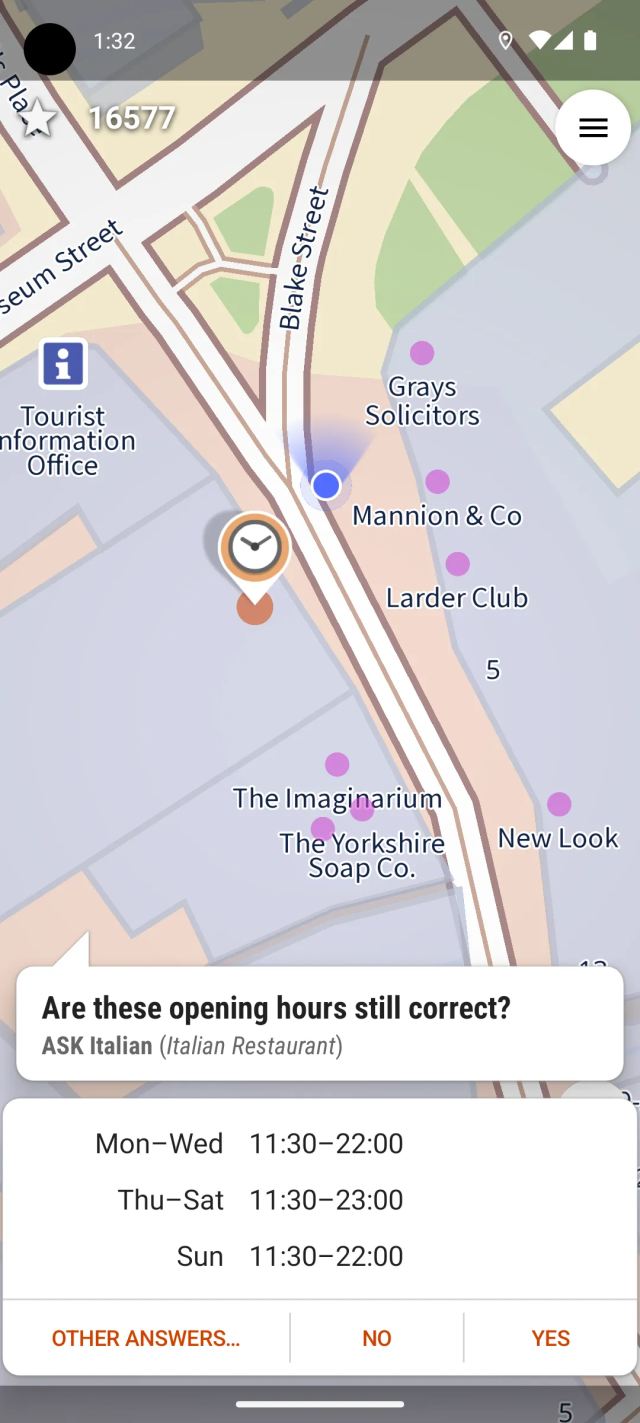

StreetComplete is a really fun and accessible way to contribute to OpenStreetMap from an Android device - walk around in your local neighbourhood (or anywhere really) and solve 'quests' by answering questions about the things around you!

You don't need to learn anything about mapping conventions, or infrastructure, or about the more complex mapping tools that exist for OpenStreetMap. The app will explain everything to you that you need to know, when you need to know it, and ask easily understandable questions with reference pictures for the answers.

The only setup needed is to make an OSM account and log into it from the app, so that it can upload your answers - and you can also do that at any later time, after trying out the app without an account for a while first. You can just install it and go outside right away!

The app doesn't need any cellular internet connection; it can work offline and synchronize your answers once you reach a place with eg. WiFi. It's also quite performant, and should run well even on lower-end phones. There is also a 'multiplayer' option that lets you split up in teams and each tackle different quests in the area.

Part of the reason I’m so against LLM coding assistants is that it seems like it can do nothing but suck all of the joy and fulfilment out of work.

Like, for any task that requires skill, there’s some pleasure in using that skill and succeeding at it. Why would I want to automate it?

It’s like the thing of “automating my hobbies so I can spend more time doing the laundry”.

And obviously, yes, I do realise that my job doesn’t simply exist for the sake of me having fun. I don’t actually expect that to be a persuasive argument from a business perspective. But what makes the whole thing completely inexplicable to me is that this automation doesn’t even do a good job or speed things up at all.

All the code I’ve seen from LLMs has been total garbage. At best, it’s eventually come out with something as good as a human could do, except no faster, and through a process that’s far more annoying and unpleasant than simply doing the work manually.

There’s literally nothing in it for anybody (except the LLM companies, who get a subscription fee for doing something you could have easily done yourself, and who, when you complain that the results are bad, invent nonsense like “you have to have multiple LLMs all checking each other’s output” to wring more money out of you).

- Accessible Output (50%, 1 vote)

- Tolk (0%, 0 votes)

- SRAL (50%, 1 vote)

- Other (Specify In a Reply) (0%, 0 votes)

@jscholes Yes. And spoon-feeding text to a screen reader should not be what developers primarily think of when they think of accessibility. The actual GUi should be made accessible through platform APIs. I know you know this, of course; I'm just stating it for the benefit of anyone watching who is outside the cottage industry of apps developed specifically for and usually by blind people.

GitHub - AccessKit/the-intercept: Proof of concept for integrating screen reader accessibility into Unity; fork of Inkle's game The Intercept

Proof of concept for integrating screen reader accessibility into Unity; fork of Inkle's game The Intercept - AccessKit/the-interceptGitHub

🇨🇦Samuel Proulx🇨🇦 likes this.

@fastfinge There are different use cases with various constraints.

I used the word "primary" on purpose in my first post. Right now, screen reader libraries are the first and often only thing reached for by developers of these abstraction libraries.

I would like to see a better abstraction library that keeps the ease of use while supporting multiple techniques. It could opt for the most reliable and user-friendly pattern by default based on information glean from its operating environment and some gentle hints from the developer.

E.g. you don't supply a window handle? There's no window for a live region so it falls back to SR libs. @matt @tunmi13

@matt User control is one reason live regions are a better idea than screen reader libs at least, because I can turn them off.

If an app has decided to shove stuff down the NVDA Controller Client DLL, there's nothing I can do about it. Other than maybe deleting the DLL or restricting access to it, at which point it's anybody's guess whether the app in question will go silent, crash, or switch to SAPI.

Of course, this begs the question about why screen readers don't have a permissions system. @fastfinge @tunmi13

🇨🇦Samuel Proulx🇨🇦 likes this.

nbcnews.com/politics/supreme-c…

Supreme Court rejects long-shot effort to overturn same-sex marriage ruling

WASHINGTON — The Supreme Court on Monday turned away a long-shot attempt to overturn the landmark 2015 ruling that legalized same-sex marriage nationwideLawrence Hurley (NBC News)

Everyday discovering something brand new!

I really need a ruler and pencils to spear time at job... hold my beer.

edit: hold my ruler 😂

edit1: bartender ask me why im laughing.. u know Lexaurin uhm Mastodon and so on 😂

edit2: i didn't know how many people taking them btw

edit3: it wasn't one time this season to customers asking me for...

Ouch. I was really happy to discover LibreOffice Impress Remote app for iOS - but the last update was in 2014 and it doesn't run on current iOS :(

Not reflected in the current docs it seems, ping @libreoffice

Any iOS developers with spare time wanting to get it up to speed? :)

Long-term archiving with ODF: a future-proof strategy - The Document Foundation Blog

Digital documents in proprietary formats often become inaccessible within a few years due to undocumented changes to the XML schema that are intentionally employed for lock-in purposes.Italo Vignoli (The Document Foundation)

feld

in reply to John-Mark Gurney • • •