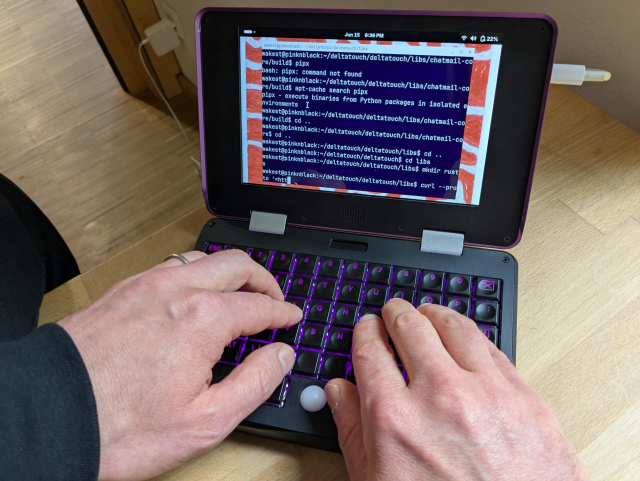

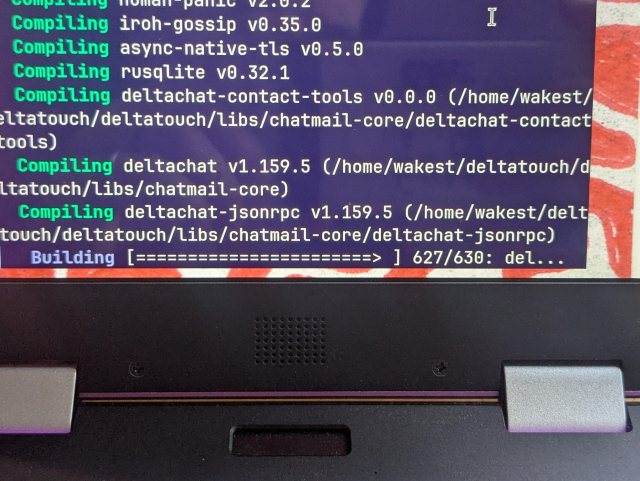

Ghostty will soon be visible to accessibility tooling on macOS. This is increasingly important in the world of AI since this means that tools like ChatGPT, Claude, etc. can now read Ghostty's screen contents too (with permission). This feature is also relatively rare!

Only the built-in Terminal, iTerm2, and Warp also support this. Kitty, Alacritty, and others are invisible to a11y on macOS.

Beyond the screen contents, Ghostty's structure is also visible, navigable, and resizable such as its split as can be seen in the attached video. Ghostty and iTerm2 are the only terminals that expose structure too (splits, tabs).

This is a v1 implementation that is primarily read-only. I plan on iterating and improving a11y interactions in future versions.

miki

in reply to Mitchell Hashimoto • • •Mitchell Hashimoto

in reply to miki • • •@miki I’m not totally sure. Right now our behavior mostly mimics iTerm. It’s admittedly not optimized for humans right now and more for AI (I note that in the PR), but the foundational work is all the same. It’s just all the last mile to get to the next spot.

My thinking thoigh was to break down the terminal into more accessibility groups e.g per command then per N lines of output, and notify AX framework that new data exists.

miki

in reply to Mitchell Hashimoto • • •Autoreading on Mac is though (see that writeup I made on Github at one point) because Voice Over has no built-in queuing mechanism, and you really need one in a terminal. This is not a problem on any other platform, most screen readers let you decide whether you want new speech to interrupt all existing utterances or to queue up.

This means you basically need to handle speech yourself (Mac OS and all other platforms have APIs for that and they're decent), but that opens another can of worms, as then you need a way to manage speech preferences somehow.