As much as I loathe LLM "AI" built from hoards of stolen data, machine learning "AI" has become terrifically useful.

This past week I had 10 audio recorders set out in the forest and nearby grassland, all recording non-stop from Monday afternoon to Friday morning. That was on our recent university field ecology field trip.

Today I downloaded all the files to a hard drive (156 GB of data) and then I set my little M1 Macbook Air to work, using the offline desktop BirdNet app to identify all of the birds in the recordings.

It took most of the day, and now I have a 42,284 row spreadsheet of birds detected.

It really feels like magic.

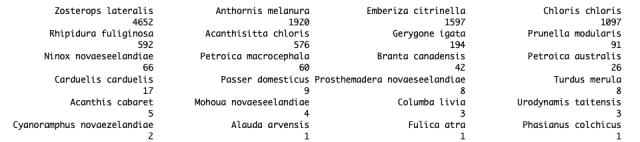

Here's a quick sorted lists of all the bird detections with species IDs with a confidence score >0.9.

Together with the students in the course, we'll laterl compare how birds have changed since we started doing this in 2020, and how the birds in the grassland differ from the forest.)

#birds #BirdNet #ecology #nz #MachineLearning

reshared this

miki

in reply to Jon Sullivan • • •LLMs (combined with ASR models) are basically this but for text.

LLMs let you turn large and messy corpora of text, audio and images into a neat csv, which you can then analyze in any data science tool of choice. You can't just Excel your way through "how likely are right-wing newspapers to mention the race of a rapist, depending on what that race is." Not without a team of grad students doing the gruntwork at least. LLMs automate away all of that gruntwork, letting you answer research questions much faster.

You can't use pure Chat GPT for this, you need specialized tools.

Sure, LLMs hallucinate, just like human annotators do. This is why you (as a human) need to go through a sample of your corpus and figure out what your hallucination rate is. This is still much faster than annotating the entire corpus.

Jon Sullivan

in reply to miki • • •