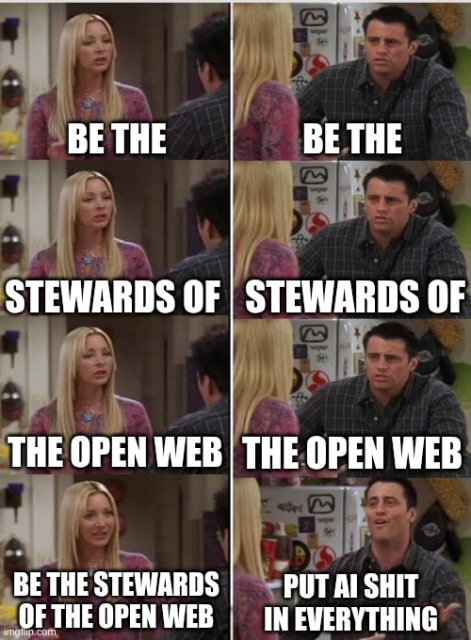

When scientists try to gauge the intelligence of e.g. birds, they look at behaviours such as tool use, tool making, use of language, and self-awareness (mirror test). And all of this should be unprompted.

It seems to me that it would make sense to apply the same criteria to artificial intelligence.

Clearly, LLMs and generative AI "agents" fail the mirror test. There is no "self" there for them to be aware of. The also don't make or use tools of their own accord. In fact, they do nothing of their own accord.

Do they use language? They parse language and generate matching language, but "use" means to communicate with intent, and there is no intent there.

)

)

![Ubuntu Pro now supports LTS releases for up to 15 years through the Legacy add-on. More security, more stability, and greater control over upgrade timelines for enterprises. […]](https://fedi.ml/photo/preview/640/728891)

Kelly Sapergia

in reply to Alan Young • • •Become A Broadcaster - The Global Voice Internet Radio

The Global VoiceAlan Young

in reply to Kelly Sapergia • • •