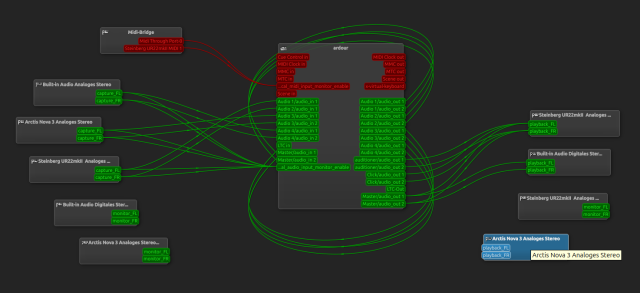

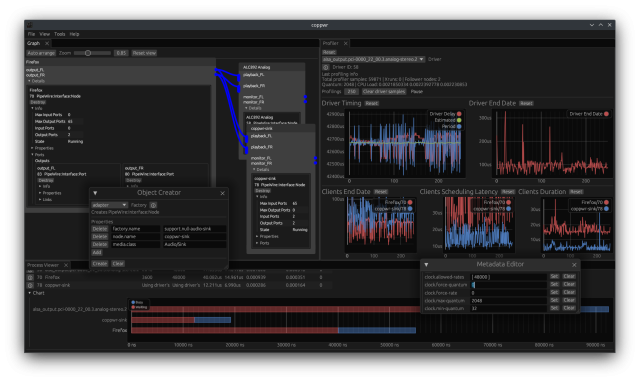

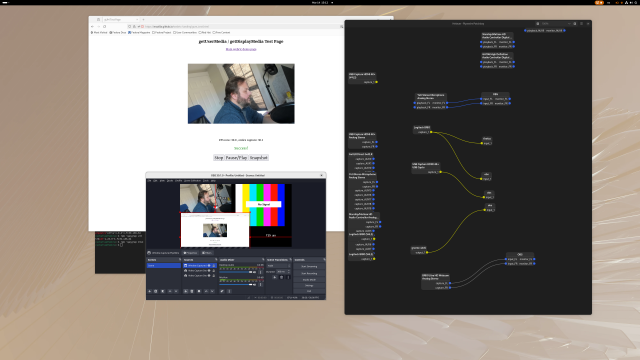

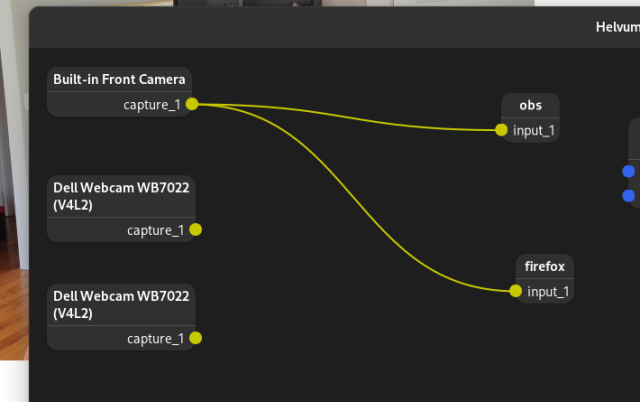

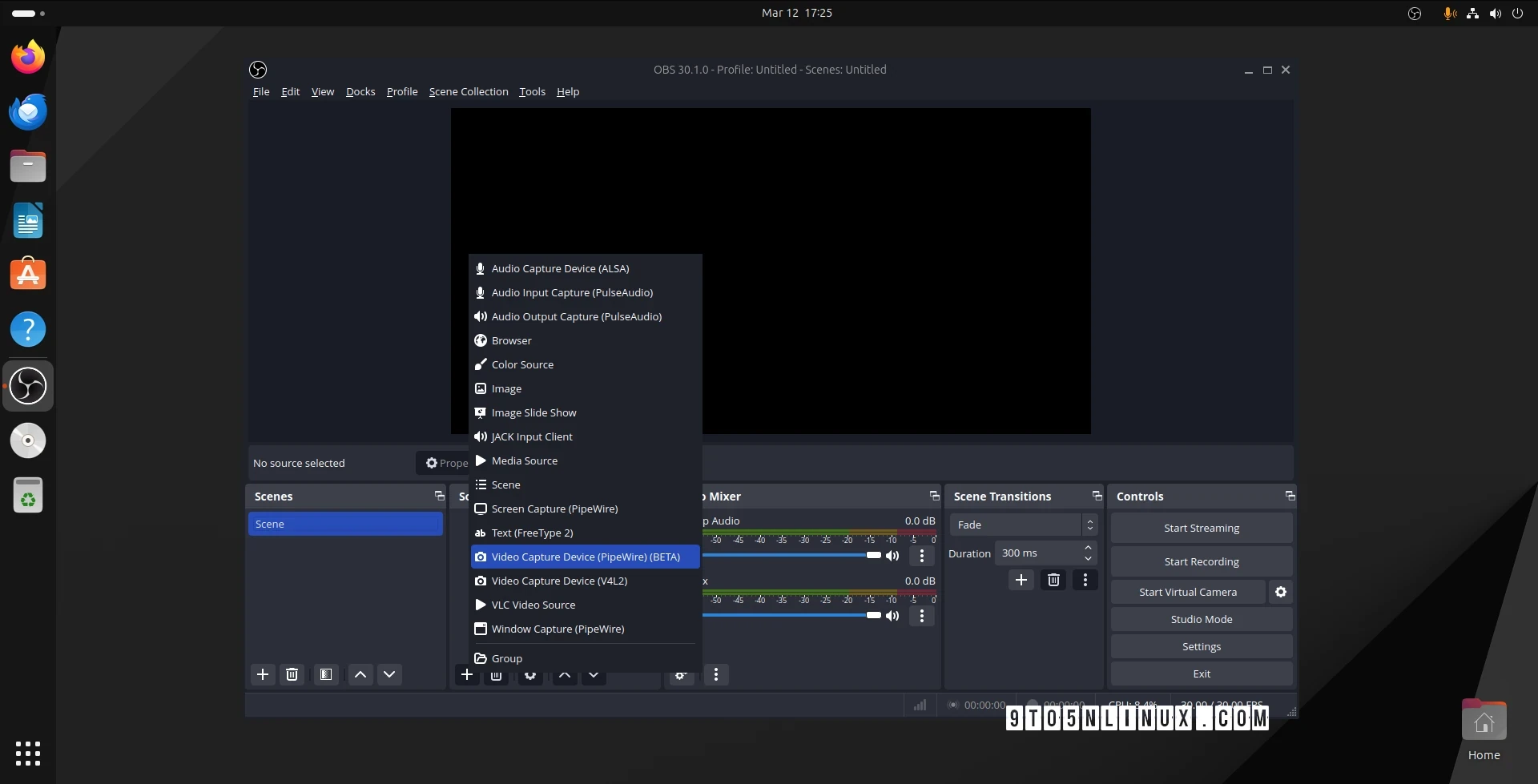

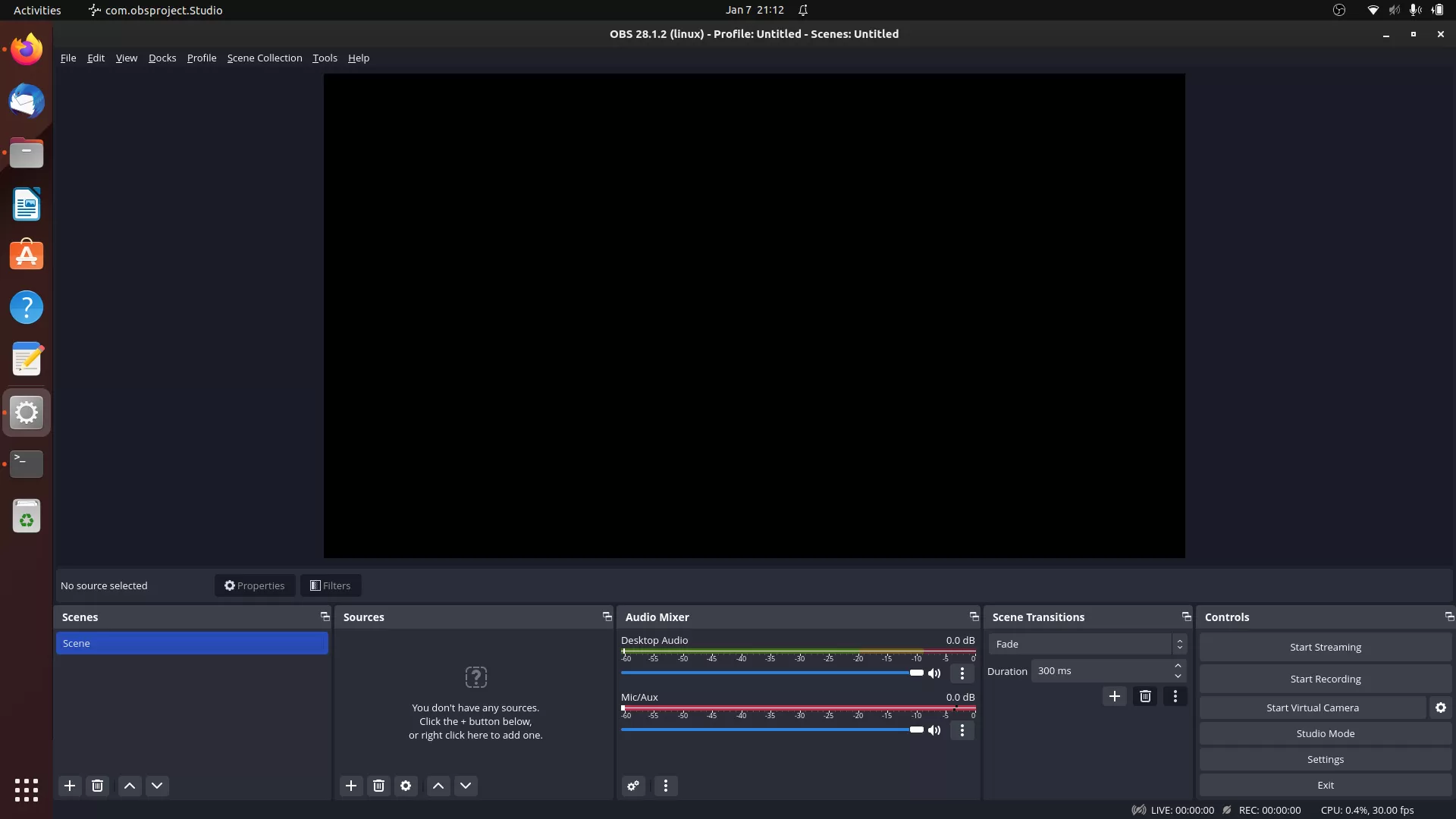

Dear #linuxaudio friends, what are your thoughts on my dream of a new #pipewire / #wireplumber app? 🤔

amadeuspaulussen.com/blog/2026…

A (new?) PipeWire & WirePlumber app… – Amadeus Paulussen

PipeWire and WirePlumber deserve better. 😝amadeuspaulussen.com