I didn’t get a chance to ask the question during the panel, so I’ll ask it out-loud here :)

I tend to have a mental model of behaviour that I think about on social media: interaction level and collective level.

If you want to moderate interaction level content, then that’s things like “is this image problematic” or “is this person harassing someone else” and so on. That seems to be what the DSA mostly addresses? Which is great! We need more protection there

But the collective level is something I’m curious about: what happens when attacks are designed to be “harmless” or “not violating” at the interaction-level, yet deeply harmful at the collective level? How does one defend against a synthetic threat carefully designed to target a vulnerable collective in a way that no interaction level defense is really capable of handeling?

Do we have regulations around this yet? Is it a problem we’re trying to address at the governance level? (I know the tooling is nearly non existent, but slowly starting to emerge. It would be encouraging to see similar momentum in governance)

#FOSDEM #FOSDEM2026 fosstodon.org/@Gina/1159951072…

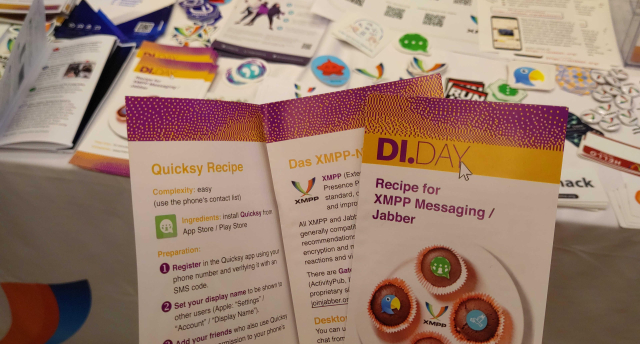

Attached: 1 image

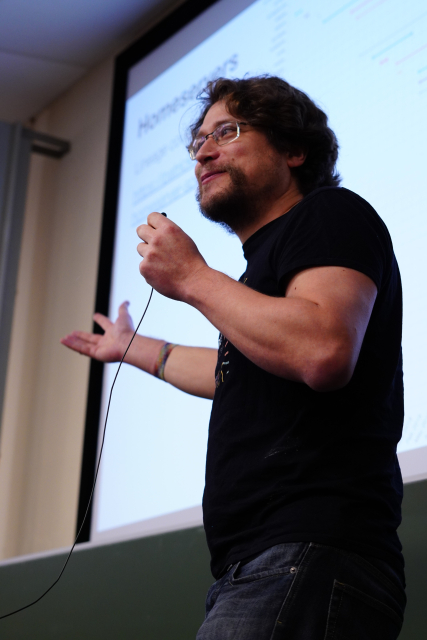

Together with @Mastodon@mastodon.social director @mellifluousbox@mastodon.social , Open Networks advocate @samvie@chaos.social and EU MP @alexandrageese@bonn.social , @jmaris@eupolicy.

Gina (Fosstodon)