Search

Items tagged with: nvda

Our In-Process blog is back for 2026! And we've got a bumper issue to start with:

We highlight the "Switching from Jaws to NVDA" guide, we have tips for running NVDA on a Mac and creating a new NVDA Shortcut.

We hear from a user on their achievements in 2025, and we want to hear yours! And finally, a quick tipe from @JenMsft here on Mastodon on Using Clip with the command line!

All available now at: nvaccess.org/post/in-process-2…

#NVDA #NVDAsr #ScreenReader #Accessibility #Blog #News

Ping @simon since I know you're running a bunch.

#NVDA #NVDASR #Blind #NVDARemote

Release Firefox and performance fixes · fastfinge/unspoken-ng

Everything in this one is thanks to akj. If you use Firefox, you should upgrade right away to prevent errors. Full changes: Fix COM threading violations when navigating Firefox content by extracti...GitHub

UPDATE. Tested without Doug's scripts and with #NVDA, same results. Random messages, especially missed calls, are read as "Press left and right arrows...".

#Accessibility #Blind

My guide for #discord with #nvda isn't finished yet, but I've laid out the new structure for where I want it to go. It's got more headings and lists to make jumping around with a screen reader easier. Sections that need more work are tagged in the unrendered markdown.

The guide focuses on the structure of the Discord desktop and web interface from the perspective of someone who knows screen reader basics and can move around a website but struggles with very complex web interfaces.

There's some useful info in there already. It'll take me a while to write up all the features, but if you know how something works and want to help fill it in or make a correction, feel free to make a PR on the rework branch!

github.com/PepperTheVixen/Disc…

GitHub - PepperTheVixen/Discord-With-NVDA at rework

This is a guide for using Discord on Windows with NVDA - GitHub - PepperTheVixen/Discord-With-NVDA at reworkGitHub

*Update. RIM may work with screen readers other than JAWS.*

(Note: You will need to skip down several headings to find the beginning of the article)

I can't comment on this from a business perspective. But I do know that I have never been able to connect remotely to any of my computers, either from Windows to Windows or from Android or IOS to Windows, with any commercially made program for the purpose. The only one that works for me is NVDA Remote, which works on all three platforms, with Windows and the NVDA screen reader being a requirement. The limitation, however, is that I can't hear the sound on the controlled computer, nor can I transfer files between it and the controller. Fortunately, I don't really need these features and am happy just being able to control my machines at all. But for those who do need them, RIM allows this, but only for users of JAWS (which costs several hundred dollars/NVDA is free), and the last time I checked, it is also very expensive for an individual user who doesn't require it for work purposes. I'm also not sure if it is cross-platform, so it may only work with Windows. If anyone knows of a free, accessible solution that works with NVDA, please let me know.

Remote Incident Manager (RIM)

at-newswire.com/remote-inciden…

#accessibility #Android #blind #computers #IOS #JAWS #NVDA #RemoteAccess #Talkback #technology #Voiceover #W

indows

Remote Incident Manager (RIM) From Pneuma Solutions - AT-Newswire

Here, Aaron Di Blasi describes a familiar failure mode in enterprise remote support: organizations deploy “best-in-class” remote tools that work fine for most staff, but quietly shut out blind and low-vision technicians and users because the experien…Aaron Di Blasi (AT-Newswire)

this seems to have ben a oneoff, a fluke if you will, but have a look at the #NVDA error I got when trying to do insert q then telling it to restart NVDA.

Error dialog Couldn't terminate existing NVDA process, abandoning start: Exception: [WinError 5] Access is denied.

Hey y’all, hope you’re doing well. Quick question: I’m trying to use Tweezcake on my Windows computer. I can open it and hear sound effects, but NVDA doesn’t seem to detect the actual window at all. Has anyone run into this or have any suggestions?

Thanks so much.

#Accessibility #Blind #JAWS #NVDA #Windows

GitHub - gozaltech/espeak-ng-sapi: Sapi5 interface for espeak-ng text-to-speech synthesizer

Sapi5 interface for espeak-ng text-to-speech synthesizer - gozaltech/espeak-ng-sapiGitHub

Release v6: bugfix in automatic language switching · fastfinge/eloquence_64

This is just a bugfix release to ensure Auto Language switching works for languages with dialects. Previously, NVDA could not switch to using any language with a dialect during auto language switch...GitHub

I picked up a Keychron K10 Max from Amazon and got it yesterday, and I don't think I ever want to go back to a membrane keyboard again.

For context: before this, I was using a Logitech Ergo K860. It's a split, membrane keyboard that a lot of people like for ergonomics, and it did help in some ways — but for me, it was also limiting. My hands don't stay neatly parked in one position, and the enforced split often worked against how I naturally move. It also wasn't rechargeable, and the large built-in wrist rest (which I know some people love) mostly became a dirt-collecting obstacle that I had to work around.

Another big factor for me is that I often work from bed. That means my keyboard isn't sitting on a perfectly stable desk. It's on a tray, my lap, or bedding that shifts as I move.

The Logitech Ergo K860 is very light, which sounds nice on paper, but in practice it meant the keyboard was easy to knock around, slide out of position, or tilt unexpectedly. Combined with the split layout, that meant I was constantly re-orienting myself instead of just typing.

The Keychron, by contrast, is noticeably heavier — and that turns out to be a feature. It stays put. It doesn’t drift when my hands move. It feels planted in a way that reduces both physical effort and mental overhead. I don't have to think about where the keyboard is; I can just use it.

For a bed-based workflow, that stability matters more than I realized.

With chronic pain, hand fatigue, and accessibility needs, keyboards are not a neutral tool. They shape how long I can work, how accurately I can type, and how much energy I spend compensating instead of thinking.

This new keyboard feels solid, responsive, and predictable in a way I didn't realize I was missing. The keys register cleanly without requiring force, and the feedback is clear without being harsh. I'm not fighting the keyboard anymore. It's just doing what I ask.

What surprised me even more is how much better the software side feels from an accessibility perspective. Keychron's Launcher and its use of QMK are far more usable for me than Logitech Options Plus ever was. Being able to work with something that’s web-based, text-oriented, and closer to open standards makes a huge difference as a screen reader user. I can reason about what the keyboard is doing instead of wrestling with a visually dense, mouse-centric interface.

That matters a lot. When your primary interface to the computer is the keyboard, both the hardware and the configuration tools need to cooperate with you.

I know mechanical keyboards aren't new, but this is my first one, and I finally understand why people say they'll never go back. For me, this isn't about aesthetics or trends. It's about having a tool that respects my body and my access needs and lets me focus on the work itself.

I'm really grateful I was able to get this, and I'm genuinely excited to keep dialing it in. Sometimes the right piece of hardware, paired with software that doesn’t fight you, doesn’t just improve comfort. It quietly expands what feels possible.

#Accessibility #DisabledTech #AssistiveTechnology

#ScreenReader #NVDA

#MechanicalKeyboards #Keychron

@accessibility @disability @spoonies @mastoblind

GitHub - fastfinge/kittentts-nvda: proof of concept kittentts synthDriver for NVDA

proof of concept kittentts synthDriver for NVDA. Contribute to fastfinge/kittentts-nvda development by creating an account on GitHub.GitHub

Release v1: Initial Release · fastfinge/supertonic-nvda

The first release. It's still kind of janky, but this serves as a proof of concept.GitHub

GitHub - fastfinge/supertonic-nvda: supertonic for nvda

supertonic for nvda. Contribute to fastfinge/supertonic-nvda development by creating an account on GitHub.GitHub

Announcing iceoryx2 v0.8.0

Announcing the release of iceoryx2 version 0.8.0Christian Eltzschig (ekxide)

While NV Access enjoy some well earned rest, we may be delayed in replying for the next few weeks. Please do refer to the tips and links in our last In-Process: nvaccess.org/post/in-process-1…

A very Merry Christmas if you celebrate & a safe & happy New Year to all!

#NVDA #NVDAsr #Christmas #Christmas2025 #NewYear #HappyNewYear

I'm so glad NVDA, Narrator, Orca, VoiceOver, TDSR, and Fenrir exist. I'm so, so glad JAWS is not the only desktop screen reader, and that FS did not persue JAWS for Mac.

I'm so glad that NVDA not only supports addons, but shows them off with the addon store! I'm so glad that NVDA is so inescapably popular that even big corporations support them, like Google Docs and Microsoft Office and countless others that say in their documentation that NVDA is supported.

Found what looks like an #NVDA bug, or possibly #notepad++ bug. An & followed by a space will read a fake command key suggestion when changing focus to the notepad++ window.

NVDA: 2025.3.2.

Notepad++: 8.8.8.

Minimum reproduction:

Open notepad++, if a file is open use ctrl-n for new.

Type the following string in the file: "& ".

Alt-tab in and out. On the focus, NVDA will announce "alt+space".

I just wanted to read before bed.

|a11y #bug

Welcome to our last In-Process blog post for 2025! nvaccess.org/post/in-process-1…

In this edition:

- Holiday Season Trading Hours

- NVDA with Digitech Reece

- World Blindness Summit Presentation

- Finding Things

Do check it out, have a wonderful break if you are having time off or a holiday, spend time with loved ones, and we look forward to catching up with everyone in 2026!

#NVDA #NVDAsr #ScreenReader #Accessibility #Christmas #NewYear

I normally use my computer with a regular qwerty keyboard. But since it's a seven-inch Toughpad, I wanted to try it with my Orbit Writer, due to the size. I bought it to use with my iPhone, which it does very well (better than with Android,). I read the manual and even saved the HID keyboard commands so that I could refer to them quickly. But I don't understand a few things.

1. It is missing the Windows key. Due to this, I can't get to the start menu as I usually do. I also can't get to the desktop in the regular way.

2. I created a desktop shortcut which I put on the start menu, but I can't type ctrl+escape at the same time, so that method of getting to the start menu is also blocked, meaning that I still can't get to the desktop.

3. I can't type NVDA+F11 or F12 for the system tray or the time and date, respectively. I was able to create new commands for both under Input Gestures. But I also tried NVDA+1 for key identification, with both caps lock and insert, and that didn't work either. Fortunately, I was able to create another gesture to get into the NVDA menu.

4. On a qwerty keyboard, I can type alt+f4 to switch between windows. If I hold the alt key, I can also continue pressing f4 to switch between more than two windows. But with the Orbit Writer, while the command works, it seems to only work for two windows i.e. I can't hold alt and continue pressing f4.

Am I missing something here or is this a half-implemented system? How can they say it works with Windows when basic commands can't even be performed? If there are ways around these problems, please let me know.

#accessibility #blind #braille #NVDA #OrbitWriter #technology #Windows

* eloquence: audio ducking now works thanks to akj: github.com/fastfinge/eloquence_64/releases/tag/v5* unspoken-ng: if you use this addon, you also need to update, or audio ducking will remain broken, because someone (glares at himself) didn't quite understand NVWavePlayer: github.com/fastfinge/unspoken-ng/releases/tag/v1.0.3

Release v1.0.3: fix audio ducking · fastfinge/unspoken-ng

This release fixes audio ducking: we now call sync and idle on NVWavePlayer.GitHub

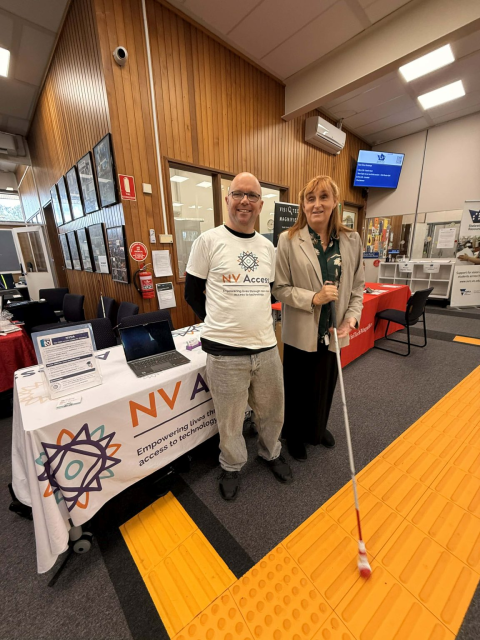

The World #Blind Union General Assembly and World #Blindness Summit in São Paulo, #Brazil in September was an amazing opportunity not only to talk about NVDA, but to give a presentation on the amazing MOVEMENT behind the world's favourite free #screenreader! We have two videos of the presentation and a full transcript for you, complete with an audience-initiated chant of "#NVDA NVDA NVDA!" at the end!

#Blind #Accessibility

Edit: While I'm still interested in any responses to this question, I did not go with Nextcloud in the end, I prefer solutions which do only one thing and that properly and accessible, and while Seafile isn't perfect it's surely better for my needs. Thanks for the interactions with the post never the less.

Release v4: A Lot Of Good Volunteers · fastfinge/eloquence_64

As with any open source project, 64-bit eloquence wouldn't be possible without the community behind it. This release brings us the following: fixes to indexes, and further code simplification (Tha...GitHub

If you saw our recent post on here about Reece, the young boy from Australia who got the ABC to put audio description on "Behind the News", then you'll love this follow up video he made especially about NVDA. It is THE feel-good piece to watch this holiday season!

nvaccess.org/post/NVDA-with-Di…

#NVDA #NVDAsr #ScreenReader #Accessibility #MakingADifference #Empowering #GoodNews #Technology #FeelGood

Happy International Day of Persons with Disabilities!

This year's #IDPwD theme is: "Fostering #disability #inclusive societies for advancing social progress"

What better way than ensuring everyone has access to, and awareness of #Accessibility of technology.

* #NVDA is FREE for anyone

* Right now, get 10% off certification to show your skills (& cheap training materials to get you to that point)

un.org/en/observances/day-of-p…

#DisabilityAwareness #Inclusion #IDPwD2025 #NVDA #Accessibility

International Day of Persons with Disabilities | United Nations

The International Day of Disabled Persons aims to promote the rights and well-being of persons with disabilities in all spheres of society and development.United Nations

Reposting. Slots available.

After a short break, I’m returning to accessibility training services.

I provide one-on-one training for blind and visually impaired users across multiple platforms. My teaching is practical and goal-driven: not just commands, but confidence, independence, and efficient workflows that carry into daily life, study, and work.

I cover:

iOS: VoiceOver gestures, rotor navigation, Braille displays, Safari, text editing, Mail and Calendars, Shortcuts, and making the most of iOS apps for productivity, communication, and entertainment.

macOS: VoiceOver from basics to advanced, Trackpad Commander, Safari and Mail, iWork and Microsoft Office, file management, Terminal, audio tools, and system upkeep.

Windows: NVDA and JAWS from beginner to advanced. Training includes Microsoft Office, Outlook, Teams, Zoom, web browsing, customizing screen readers, handling less accessible apps, and scripting basics.

Android: TalkBack gestures, the built-in Braille keyboard and Braille display support, text editing, app accessibility, privacy and security settings, and everyday phone and tablet use.

Linux: Orca and Speakup, console navigation, package management, distro setup, customizing desktops, and accessibility under Wayland.

Concrete goals I can help you achieve:

Set up a new phone, tablet, or computer

Send and manage email independently

Browse the web safely and efficiently

Work with documents, spreadsheets, and presentations

Manage files and cloud storage

Use social media accessibly

Work with Braille displays and keyboards

Install and configure accessible software across platforms

Troubleshoot accessibility issues and build reliable workflows

Make the most of AI in a useful, productive way

Grow from beginner skills to advanced, efficient daily use

I bring years of lived experience as a blind user of these systems. I teach not only what manuals say, but the real-world shortcuts, workarounds, and problem-solving skills that make technology practical and enjoyable.

Remote training is available worldwide.

Pricing: fair and flexible — contact me for a quote. Discounts available for multi-session packages and ongoing weekly training.

Contact:

UK: 07447 931232

US: 772-766-7331

If these don’t work for you, email me at aaron.graham.hewitt@gmail.com

If you, or someone you know, could benefit from personalized accessibility training, I’d be glad to help.

#Accessibility #Blind #VisuallyImpaired #ScreenReaders #JAWS #NVDA #VoiceOver #TalkBack #Braille #AssistiveTechnology #DigitalInclusion #InclusiveTech #LinuxAccessibility #WindowsAccessibility #iOSAccessibility #AndroidAccessibility #MacAccessibility #Orca #ATTraining #TechTraining #AccessibleTech

This week's In-Process is a bumper one - but don't miss it because as well as all the news on NVDA 2025.3.2, more on configuration profiles and the NEW Web interface for the add-on store, we've also got a BLACK FRIDAY SALE!

All that and EVEN MORE at: nvaccess.org/post/in-process-2…

#NVDA #NVDAsr #ScreenReader #BlackFriday #BlackFriday2025 #Sale #Accessibility