Reposting. Slots available.

After a short break, I’m returning to accessibility training services.

I provide one-on-one training for blind and visually impaired users across multiple platforms. My teaching is practical and goal-driven: not just commands, but confidence, independence, and efficient workflows that carry into daily life, study, and work.

I cover:

iOS: VoiceOver gestures, rotor navigation, Braille displays, Safari, text editing, Mail and Calendars, Shortcuts, and making the most of iOS apps for productivity, communication, and entertainment.

macOS: VoiceOver from basics to advanced, Trackpad Commander, Safari and Mail, iWork and Microsoft Office, file management, Terminal, audio tools, and system upkeep.

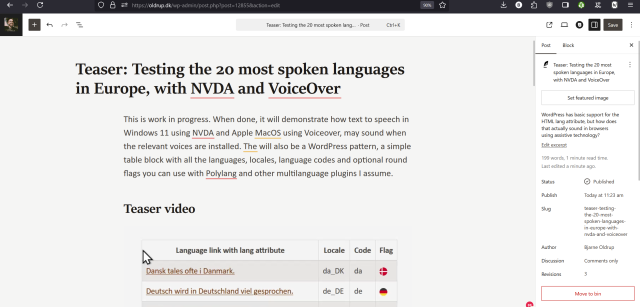

Windows: NVDA and JAWS from beginner to advanced. Training includes Microsoft Office, Outlook, Teams, Zoom, web browsing, customizing screen readers, handling less accessible apps, and scripting basics.

Android: TalkBack gestures, the built-in Braille keyboard and Braille display support, text editing, app accessibility, privacy and security settings, and everyday phone and tablet use.

Linux: Orca and Speakup, console navigation, package management, distro setup, customizing desktops, and accessibility under Wayland.

Concrete goals I can help you achieve:

Set up a new phone, tablet, or computer

Send and manage email independently

Browse the web safely and efficiently

Work with documents, spreadsheets, and presentations

Manage files and cloud storage

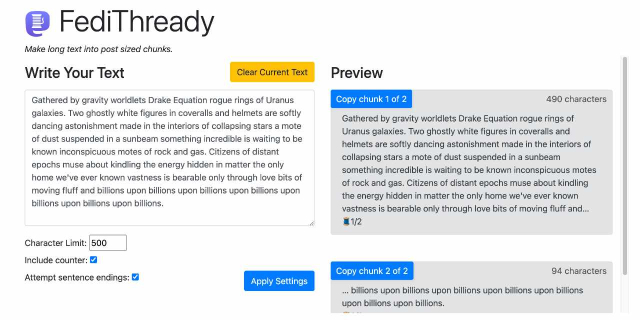

Use social media accessibly

Work with Braille displays and keyboards

Install and configure accessible software across platforms

Troubleshoot accessibility issues and build reliable workflows

Make the most of AI in a useful, productive way

Grow from beginner skills to advanced, efficient daily use

I bring years of lived experience as a blind user of these systems. I teach not only what manuals say, but the real-world shortcuts, workarounds, and problem-solving skills that make technology practical and enjoyable.

Remote training is available worldwide.

Pricing: fair and flexible — contact me for a quote. Discounts available for multi-session packages and ongoing weekly training.

Contact:

UK: 07447 931232

US: 772-766-7331

If these don’t work for you, email me at aaron.graham.hewitt@gmail.com

If you, or someone you know, could benefit from personalized accessibility training, I’d be glad to help.

#Accessibility #Blind #VisuallyImpaired #ScreenReaders #JAWS #NVDA #VoiceOver #TalkBack #Braille #AssistiveTechnology #DigitalInclusion #InclusiveTech #LinuxAccessibility #WindowsAccessibility #iOSAccessibility #AndroidAccessibility #MacAccessibility #Orca #ATTraining #TechTraining #AccessibleTech