P.S. What I boosted: @somegregariousdude posted that he will delete his #Matrix account and all links to it. Our shared experience is that there is no accessible client out there. So whenever you offer to "Ditch slack for Matrix", think once more.

Search

Items tagged with: Accessibility

P.S. What I boosted: @somegregariousdude posted that he will delete his #Matrix account and all links to it. Our shared experience is that there is no accessible client out there. So whenever you offer to "Ditch slack for Matrix", think once more.

I tried making a Neocities account today. I was stopped dead in my tracks by an unlabeled field with protected input in the signup form. I thought it was the password field, but there's a labeled password field with protected input a little further down, so I have no idea what that first one is. Just a little further down is an hcaptcha challenge that forces me to give my email address to hcaptcha because hcaptcha can't be bothered to implement actual accessibility

for your reference, here's the first beautiful sentence of this article:

"ffGE ARrj XRejm XAj bZgui cB R EXZgl, Rmi mjji jrjg-DmygjREDmI XgRDmDmI iRXR XZ DlkgZrj."

I don't know the technology behind this BS, but screen readers see it as scrambled text, kind of encrypted or something like this. I guess it's some font juggling (ChatGPT supposed it's gliph scrambling, where random Unicode values are mapped to random letters — I'll trust her in this because I really don't care about the tech behind it), but if you have a tiny little grain of empathy, never ever ever do this, for goodness sake.

tilschuenemann.de/projects/sac…

#Accessibility #Blindness #Empathy #BadPractices #Web #Text

Visited Winchester Christmas Market this afternoon. It was very busy and crowded but mostly nice to just see the pretty lights and have a massively overpriced but very tasty sausage roll.

However, it was pretty disappointing to see that I couldn’t get to 90% of the stalls.

I get that it’s on historic ground and there are limits to what they can do but I feel like they could’ve done a bit more to make it accessible.

Most of the stalls were up a large step. I could see what they had from a distance but I couldn’t get to most of them to view things up close.

Vojtux - Accessible Linux distro which is almost pure Fedora

Vojtěch Polášek has put together a technical preview of a version of Fedora that should work well for blind or visually impaired users. While his goal is explicitly to see these improvements and changes become part of Fedora itself, for now you can use this implementation based on the Fedora MATE spin. :)

➡️ freelists.org/post/orca/Announ…

#Vojtux #Fedora #Accessibility #a11y #Linux #OpenSource

[orca] Announcing Vojtux - Accessible Linux distro which is almost pure Fedora - orca - FreeLists

[orca] Announcing Vojtux - Accessible Linux distro which is almost pure Fedora, orca at FreeListswww.freelists.org

Not just unsurprising, but utterly predictable.

“Trump administration says sign language services ‘intrude’ on Trump’s ability to control his image”

apnews.com/article/american-si…

Though he has a point — having an ASL interpreter could make it look like he cares about, well anything other than himself. That’s not his brand.

About fucking time.

“Judge orders White House to use American Sign Language interpreters at briefings”

npr.org/2025/11/05/nx-s1-55991…

Sadly, does not include VP briefings. White House is supposed to update court on progress by end of week. Curious how it will jerk court around.

Hey, I've been under distress lately due to personal circumstances that are outside my control. I can't find a permanent job that allows me to function, I'm not eligible for government benefits, my grant proposals got rejected, paid internships are quite difficult to find. Essentially, I have no stable monthly income that allows me to sustain myself.

Nowadays, I work mostly on accessibility throughout GNOME as a volunteer, improving the experience of people with disabilities. I helped make the majority of GNOME Calendar accessible with a keyboard and screen reader — still an ongoing effort with !564 and !598 —which is an effort no company ever contributed financially. These merge requests take thousands (literally) of hours to research, develop, and test, which would have been enough to sustain myself for a couple of years if I had been working under a salary.

I would really appreciate any kinds of donations, especially ones that happen periodically to bump my monthly income.

These donations will allow me to sustain myself while allowing me to continue working on accessibility throughout GNOME, potentially even 'crowdfunding' development without doing it on the behalf of the Foundation.

I accept donations through the following platforms:

- “TheEvilSkeleton” on Liberapay: liberapay.com/TheEvilSkeleton/… (free and open-source platform)

- “TheEvilSkeleton” on Ko-fi: ko-fi.com/theevilskeleton

- “TheEvilSkeleton” on GitHub Sponsors: github.com/sponsors/TheEvilSke…

Boosts welcome and appreciated.

#Accessibility #a11y #GNOME #GNOMECalendar #MutualAidRequest #MutualAid

GNOME Calendar: A New Era of Accessibility Achieved in 90 Days

There is no calendaring app that I love more than GNOME Calendar. The design is slick, it works extremely well, it is touchpad friendly, and best of all, the community around it is just full of wonderful developers, designers, and contributors worth …TheEvilSkeleton

And finally done with the alt-texts for my trip to Japan. In total we are talking about 597 images, only a very small number of which received empty alt texts (because they showed largely the same thing as the previous one from a slightly different angle).

I don’t claim that they are perfect, but they are there! If you don’t like one, send me a better one.

I’m not yet done with the site, I still want to add more regular text, and more notably some videos, but this is a huge step in any case…

I’m proud of it, but my takeaway here really is that it is simply not practical to do like this for a personal site: I easily spent 20 times as much time on getting alt-texts than I spent on selecting the images and if anything that is a very low estimate. It simply doesn’t scale for posting a large number of vacation pictures, with this selection being very much a selection, we have many more images, not all of them good or even that dissimilar from what is there now, but still…

I was originally planning to also put pictures from my previous trip up, but the way this went I don’t think I’ll do that, at least not with fully manually generated alt texts.

I see a lot of complaints about AI-generated alt-texts, but I’m honestly not sure that they are worse than much of what I came up in this case, especially once I do a second path on them to fix any mistakes, in the same way in which I use DeepL for translations: It’s not that they are fully reliable, but they are good enough, that I can focus on the few issues they have and don’t have to do all the tedium that translating everything manually brings with it. And in a lot of cases DeepL does a better job than I would have done: My English is pretty good, but I am not a native speaker, and sometimes that’s noticeable in that my vocabulary is not as comprehensive as it is in German. Maybe the same approach would be fine for #accessibility? I’d be interested in opinions and proposals.

And I am familiar with all the benefits of alt-texts, but since that page is first and foremost a image-site, where not being able to see them will really remove most of the reasons to use it, it also does make me wonder how many people will actually benefit from it, compared to the effort I put in…

I normally use my computer with a regular qwerty keyboard. But since it's a seven-inch Toughpad, I wanted to try it with my Orbit Writer, due to the size. I bought it to use with my iPhone, which it does very well (better than with Android,). I read the manual and even saved the HID keyboard commands so that I could refer to them quickly. But I don't understand a few things.

1. It is missing the Windows key. Due to this, I can't get to the start menu as I usually do. I also can't get to the desktop in the regular way.

2. I created a desktop shortcut which I put on the start menu, but I can't type ctrl+escape at the same time, so that method of getting to the start menu is also blocked, meaning that I still can't get to the desktop.

3. I can't type NVDA+F11 or F12 for the system tray or the time and date, respectively. I was able to create new commands for both under Input Gestures. But I also tried NVDA+1 for key identification, with both caps lock and insert, and that didn't work either. Fortunately, I was able to create another gesture to get into the NVDA menu.

4. On a qwerty keyboard, I can type alt+f4 to switch between windows. If I hold the alt key, I can also continue pressing f4 to switch between more than two windows. But with the Orbit Writer, while the command works, it seems to only work for two windows i.e. I can't hold alt and continue pressing f4.

Am I missing something here or is this a half-implemented system? How can they say it works with Windows when basic commands can't even be performed? If there are ways around these problems, please let me know.

#accessibility #blind #braille #NVDA #OrbitWriter #technology #Windows

I should also note that Bluesky has really great hashtag alt text settings, but I have not come across anyone who uses them yet. It makes me wonder why did that catch on over here, and not there? Is there just something about mastodon users that makes them more inclined to care about #Accessibility?

Disability advocates denounce cuts into Exo station accessibility

Montreal’s main commuter rail and bus system, Exo, is dropping long-term projects and investments amid a financial crunch – and accessibility advocates are pushing back.Erika Morris (CTVNews)

This morning when I was in the car I had an issue with my Pixel Buds. I wanted to google it, but this is where the issue appeared:

As you may know, typing on Android devices with Talkback is already a quite slow experience even using direct touch. But imagine even if your finger is on the proper letter, if you lift it it chooses one that is next to it basically every third letter. If you have at least some bit of imagination, this will probably be very bad in said. And let me tell you, it infact is as crappy as it sounds.

I sincerely hope this is something which gets fixed as soon as possible. I already noticed it two or three days ago. If this won't be fixed, my phone simply isn't workable with and I will need to use voice messages and dictation for everything.

#accessibility #a11y #google #android #pixel #googlepixel #talkback #blind

Pleast boost for reach:

On the subject of Linux phone accessibility, the developer of AT-SPI wrote:

There's a long-standing issue that Joanie filed against at-spi2-core for

touch screen support:

gitlab.gnome.org/GNOME/at-spi2…

Ande I haven't taken the time to figure out how best to handle this. I'm

not sure if evdev would do what we need, or we might want to work with the

maintainers of mutter, KWin, etc. to create a protocol for

intercepting/manipulating gestures. We would also need code that can

detect gestures from touch screen presses. NVDA has code that we might be

able to use as a model.

All of this is theoretically on my list of things to do. Of course, help

would be welcome if anyone else was able to take it on.

end quote

For blind people to switch to Linux on phones, we need to use the touch screen. If anyone can help with this, it would allow the blind to take one step closer to being capable of creating our own accessibility, our own environments, in the way that helps us most. See NVDA as a great example of how that goes.

#accessibility #blind #foss #linux #phones #pinephone

Implement support for touch/gestures (#148) · Issues · GNOME / at-spi2-core · GitLab

Orca currently is able to register grabs for, be notified of, and consume keyboard events of interest. It should be able to do the same for touch/gestures.GitLab

Seriously people, if you're going to put work into making your blog look beautiful you HAVE GOT to see what it looks like with large minimum font size (not zoom). At my default 20pt minimum font size this blog gives me roughly 4 words per line.

I'm not going to read the damn article like this, no matter how good the words are. Yes, I can switch to "Reader View" but I shouldn't have to.

From the Blinux list, my thoughts below the quote:

"Hello to all.

This is partly a (re)introduction and some good news (news for definitely not all, but some) of you.

This message is actually send to two lists, as I have no clue how big intersection of the member lists there are (I suppose huge, but not complete), so sorry for double receiving, you who are in both lists.

And now, with the disclaimers out of the way... I started to be interested in Linux, say ten or twelve years ago (don't remember exactly). Through a set of happy coincidences, I managed to get a job at Red Hat in the desktop team, and because accessibility was in the times of the reorg an important thing, a tools and accessibility team actually was created. It is quite a small team, and the majority of people on it do not have work time to do anything with accessibility (we're maintaining some of the Gnome apps, we do RHEL packaging, testing etc.).

However, I am working on the accessibility related things every day, so don't be afraid to ask something, mention your issues, and such.

Personally, in terms of distributions, I use GNome on Arch (personal laptop) and Fedora (work laptop), so I have the most experience with these setups, but I definitely used Mate, and tried Kde for some time as well.

And, that's it for now. "

So, a small team, that barely has time to do what the team is about. This is really sad. This is what people with disabilities get from one of the biggest Linux companies there is.

#accessibility #blind #linux #foss #gnu

The World #Blind Union General Assembly and World #Blindness Summit in São Paulo, #Brazil in September was an amazing opportunity not only to talk about NVDA, but to give a presentation on the amazing MOVEMENT behind the world's favourite free #screenreader! We have two videos of the presentation and a full transcript for you, complete with an audience-initiated chant of "#NVDA NVDA NVDA!" at the end!

I recently started using Book Notification (booknotification.com).

For me, the use case is simple: add a list of #authors I like, and they send me a weekly email with upcoming releases by those authors and a link to a personalised #book release calendar page.

I read a lot of #books, so I like what they're doing. Unfortunately there is a catch. Two, actually.

Firstly, they use an #accessibility overlay (Ally by Elementor). While it isn't personally bothersome for me (particularly given the fact that my primary interest is in the content they email out), I do fundamentally object to using a service that perpetuates the use of these tools however unmaliciously.

Secondly, the first time I visited the site after signing up, I'd been signed out and the backend had apparently forgotten my password. Services not handling authentication data and user sessions well is a development pet peeve of mine.

All of this to say: if this sounds appealing to you and you're not put off by the highlighted caveat and hiccup, it does seem to work well. But I'm also interested in recommendations of other services like this that will let me keep track of new book releases by authors I enjoy. Do you know of any?

(Please no Goodreads, Amazon, Audible, or the like.)

#Blind #Accessibility

Today's Web Design Update: groups.google.com/a/d.umn.edu/…

Featuring @michaelharshbarger, @aardrian, @SteveFaulkner, @deconspray, @mgifford , @sarajw, @matuzo, @j9t, @mehm8128, @Jayhoffmann, @Meyerweb, @bkardell, @adactio, @slightlyoff, and more.

Subscribe info: d.umn.edu/itss/training/online…

#Accessibility #A11y #WebDesign

Web Design References: Webdev Newsletter

Web Design References: News and info about web design and development. The site advocates accessibility, usability, web standards and many related topics.www.d.umn.edu

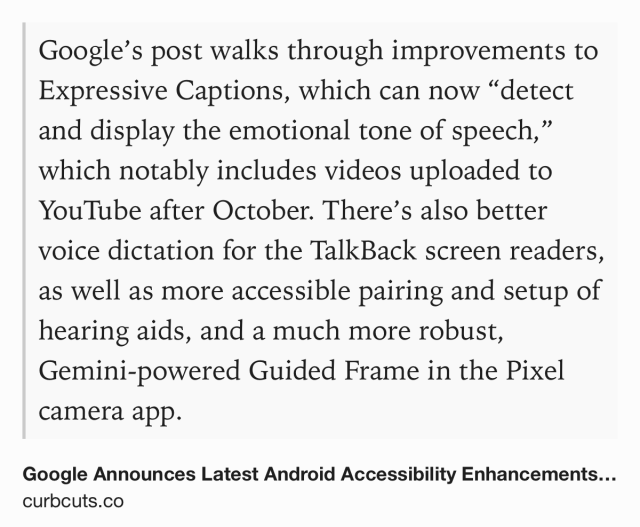

Google Announces Latest Android Accessibility Enhancements in new blog post

Google commemorated this year’s International Day of Persons with Disabilities earlier this week by publishing a blog post in which the company detailed “7 ways we’re making Android more accessible.Steven Aquino (Curb Cuts)

The similarities between #accessibility and #cybersecurity continue to amaze me.

These are both areas of standards, recommendations, legal precedents etc. that SHOULD, in theory, give companies the tools, as well as the insentive, to do what their clients/customers need them to do.

Is that the reality? Sadly, often, no it isn't. I just saw a renowned voice in the cybersecurity space repost a post that essentially states that if the infraction is cheaper/more lucrative than the fine, companies will choose the fine every single time. Frustrating, innit?

So what if I say the exact same thing is true for #accessibility and that the majority of GUI-based cybersecurity tools are not #accessible enough to be productive?

Here's a callout to #cybersecurity vendors. Are you going to fix this, or be a hypocrite? :) #tech

In some web platform circles, there's a push for a move away from walled garden distribution methods like the App Store and native apps in general.

Broadly, I think progressive web apps (PWAs) should be easier to distribute and install. And Apple should definitely do more to make those a viable option on iOS.

Unfortunately, purely web-based apps are currently not able to take advantage of several important #accessibility features, primary among them the ability to add actions to elements (e.g. for the VoiceOver rotor).

Bon je viens de retester mais on ne peut toujours pas activer l'accessibilité via les lecteurs d'écrans pour les app GTK4 sous Windows (donc Gajim), l'issue est toujours là mais je ne sais pas si quelqu'un arrivera à la corriger dans les mois/années à venir.

Si jamais vous êtes dev et que vous aimez GTK, l'issue est ici :

gitlab.gnome.org/GNOME/gtk/-/i…

Je peux tester des patch si besoin

#a11y #accessibility #dev #gtk

Crash with accesskit-c on Windows (#7653) · Issues · GNOME / gtk · GitLab

Example program: Using gtk 4.18.6, accesskit-c 0.15.1 Downstream report: https://github.com/msys2/MINGW-packages/issues/24812 Clicking on the button crashes. To...GitLab

A severe #accessibility issue I've seen very few people talking about is the widespread adoption (in my country at least) of touch-only card payment terminals with no physical number buttons.

Not only do these devices offer no tactile affordances, but the on-screen numbers move around to limit the chances of a customer's PIN number being captured by bad actors. In turn, this makes it impossible to create any kind of physical overlay (which itself would be a hacky solution at best).

When faced with such a terminal, blind people have only a few ways to proceed:

* Switch to cash (if they have it);

* refuse to pay via inaccessible means;

* ask the seller to split the transaction into several to facilitate multiple contactless payments (assuming contactless is available);

* switch to something like Apple Pay (again assuming availability); or

* hand over their PIN to a complete stranger.

Not one of these solutions is without problems.

If you're #blind, have you encountered this situation, and if so how did you deal with it? It's not uncommon for me to run into it several times per day.

why do you think this is not being talked about or made the subject of action by blindness organisations? Is it the case that it disproportionately affects people in countries where alternative payment technology (like paying via a smart watch) is slower to roll out and economically out of reach for residents?

@jonathan859 I take it back, this is possible (for anyone looking for this, `setTaskDescription`). This doesn't change the visible title, but it does change what Talkback reads out.

So, what would be useful to use here?

Depending on the state I thought one of:

- Replying to (name)

- Quoting (name)

- Writing post

(Talkback automatically puts "Pachli" before each of those)

Anything I've missed?

#Blind #Accessibility

Sorry, no. *First* you propose an accessible alternative or at least do something for it like sit down with accessibility experts, obtain grants and financing, so I could see I'm not forgotten at least, and *then* we'll think what to do next. #Accessibility