Search

Items tagged with: blind

Do-It-Blind (DIB) Besprechung

Learn using BigBlueButton, the trusted open-source web conferencing solution that enables seamless virtual collaboration and online learning experiences.bbb.metalab.at

As it is the start of the month I would like to invite my fellow #Blind, #DeafBlind, and #VisuallyImpaired people, along with their family, and friends, to #OurBlind. OurBlind comprises the #Discord, #Lemmy, and #Reddit communities operated by the staff of the r/Blind subreddit, as well as those who have joined since the creation of the Discord in 2022, and Lemmy in 2023. We have members from all over the world, and of all ages, hearing and vision levels, and are a welcoming and safe space for Our #LGBTQIA and #neurodiverse friends. Our general community guidelines, and the links to reach our platforms can be found on our website.

Blog post about the #Dolby #Accessibility Solution

When Good Intentions Meet Poor Design at the movie theatre: Why Nothing About Us Without Us Still Matters

Please boost for reach, for any OnePlus users or staff:

I wrote a review of my OnePlus 13 on OnePlus' community site. If you're a member there, please like it to show support for the accessibility issues I brought up. I'd really like to get these fixed, since this is a powerful phone that's got Google's TalkBack, not Samsung's moldy fork, and is great overall, besides the accessibility issues. I'd love to be able to recommend this phone as an all-around great phone for blind people.

community.oneplus.com/thread/1… [A review of the OnePlus 13, from a blind person's perspective]

#android #OnePlus #OnePlus13 #blind #accessibility #Braille

OnePlus Community

Introducing our new OnePlus Community experience, with a completely revamped structure, built from the ground-up.community.oneplus.com

Do-It-Blind (DIB) Besprechung

Learn using BigBlueButton, the trusted open-source web conferencing solution that enables seamless virtual collaboration and online learning experiences.bbb.metalab.at

Step into the ring and take control! Welcome to GRUNT - The Wrestling Game, the ultimate text-based wrestling simulation where you are the booker, the promoter, and the wrestling god of your very own universe!

Tired of wrestling games that limit your imagination? GRUNT WRESTLING hands you the keys to the entire promotion. From creating a rookie in a local gym to running a multi-division global powerhouse, every choice is yours. Witness epic five-star matches, shocking betrayals, and the crowning of new legends—all brought to life through a detailed, moment-to-moment simulation engine.

This isn't just a game; it's a sandbox for your wrestling stories. Build your dream roster, book the matches, and watch the chaos unfold!

nmercer1111.itch.io/grunt-the-wrestling-game

sightlessscribbles.com/posts/2…

via @WeirdWriter

AI Audiobooks are not accessible, Sightless Scribbles

A fabulously gay blind author.sightlessscribbles.com

***Attention! If you miss MSN/Windows Live Messenger, AIM, and/or ICQ, this is for you! If you use a screen reader and want a 100% accessible messenger client, this is also for you.*

This works with Windows XPthrough 11, and I'm logged into it as I write! It's called Escargot, and it revives Windows Live/MSN Messenger. This is the original software, but it has been patched so that it connects to the escargot.chat server and not the Microsoft one. It is 100% free and accessible with NVDA and I'm sure JAWS as well. They also have projects for AIM (AOL Instant Messenger) and ICQ, including for Android and IOS, and are working on a web client for MSN. (I don't know if AIM or ICQ are accessible with screen readers, as I have never tried them). Anyway, if you're over twenty-one (my personal request), have read my profile here, and wish to add me, I am dandylover1@escargot.chat. You can find everything here.

Note: If you already have Windows Live/MSN Messenger on your system, you will still need to download their version and create an account. Your Microsoft, MSN, or Hotmail one won't work for signing in. Also, remember to click on RUN_AFTER_INSTALL.exe, in order to patch the program to the Escargot server.

#accessibility #AIM #Android #AOL #blind #chat #Escargot #EscargotChat #ICQ #IOS #Messenger #MSN #MsnMessenger #Microsoft #NVDA #Talkback #technology #Voiceover #Windows #WindowsLiveMessenger

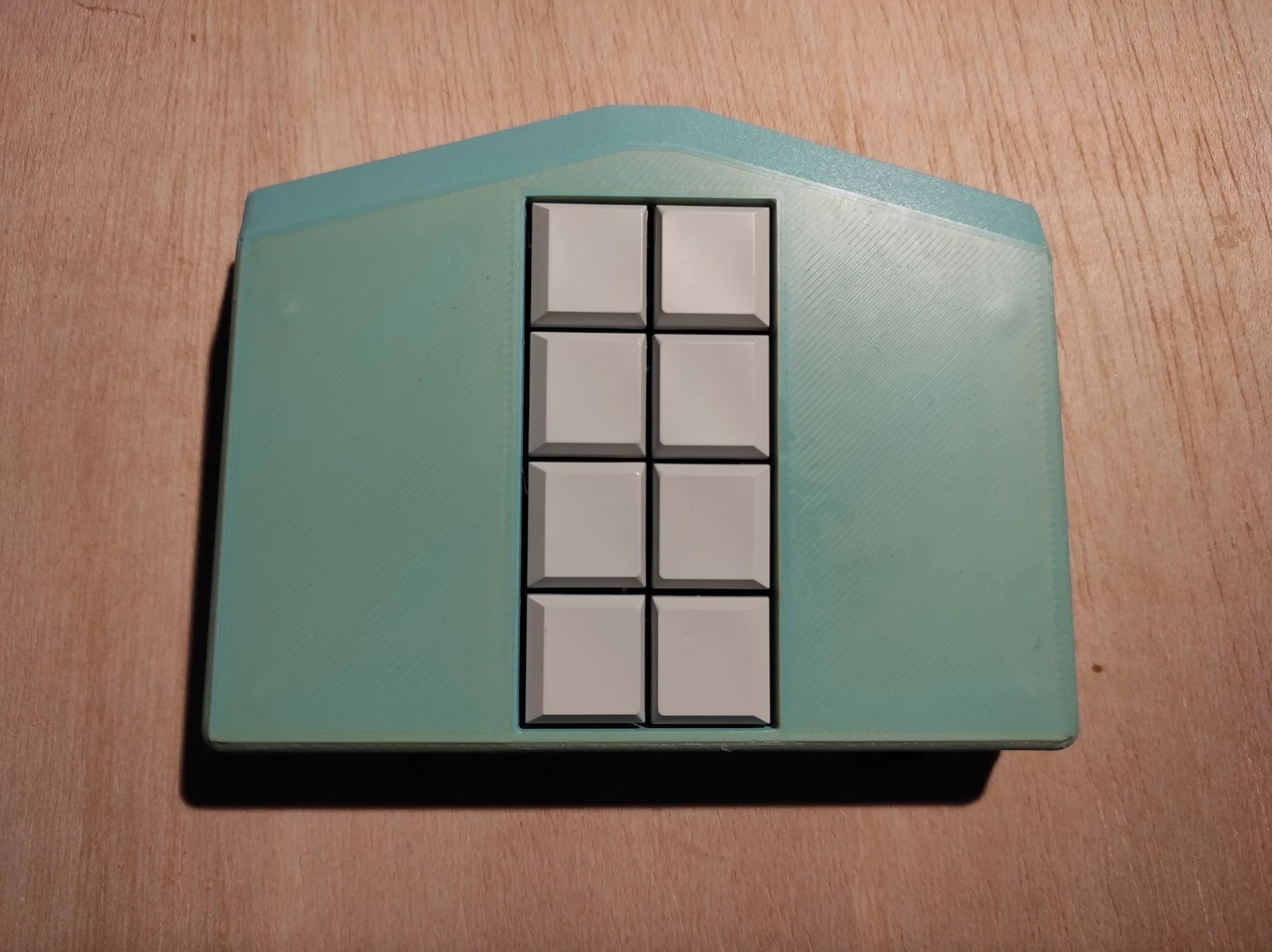

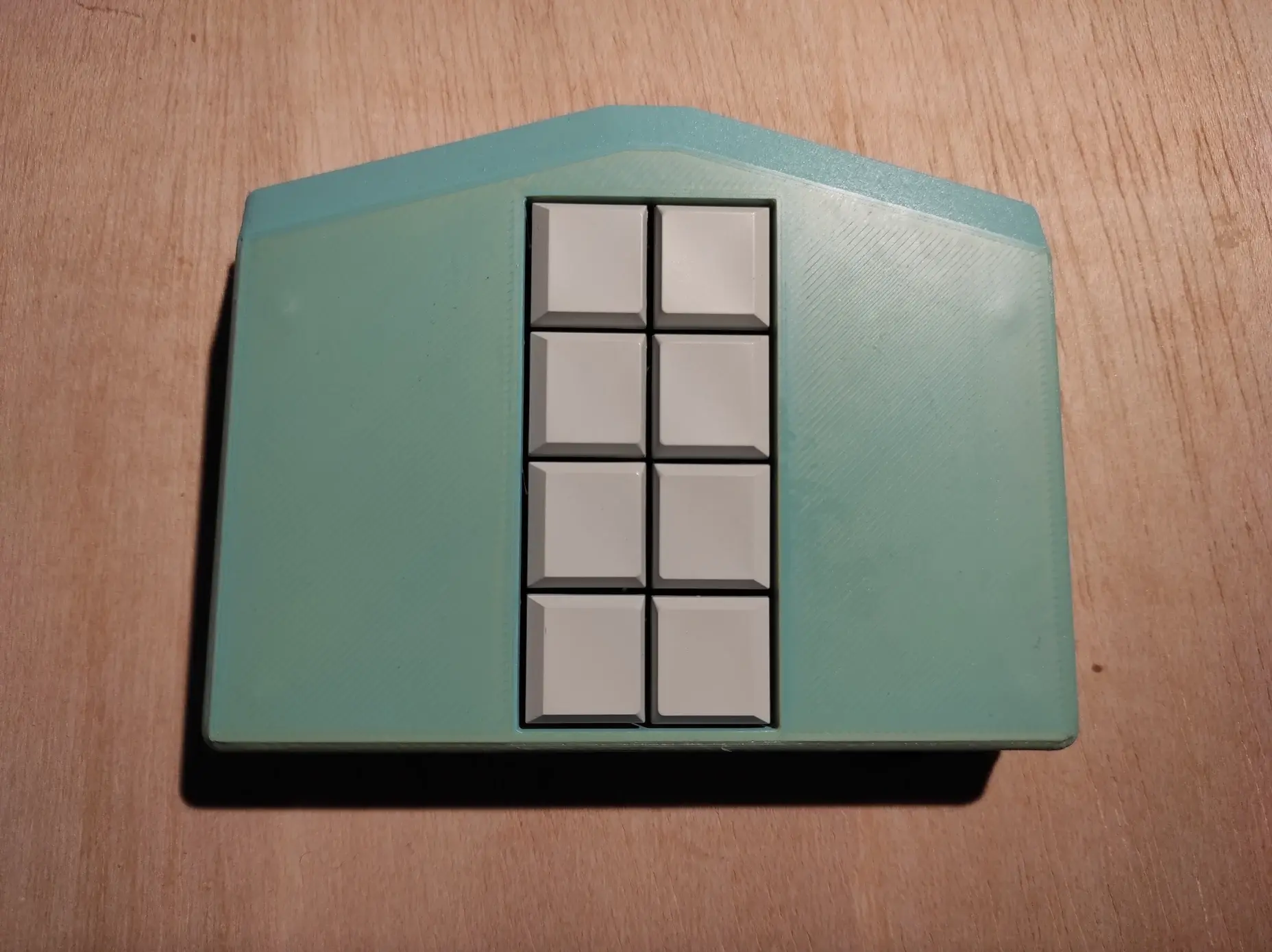

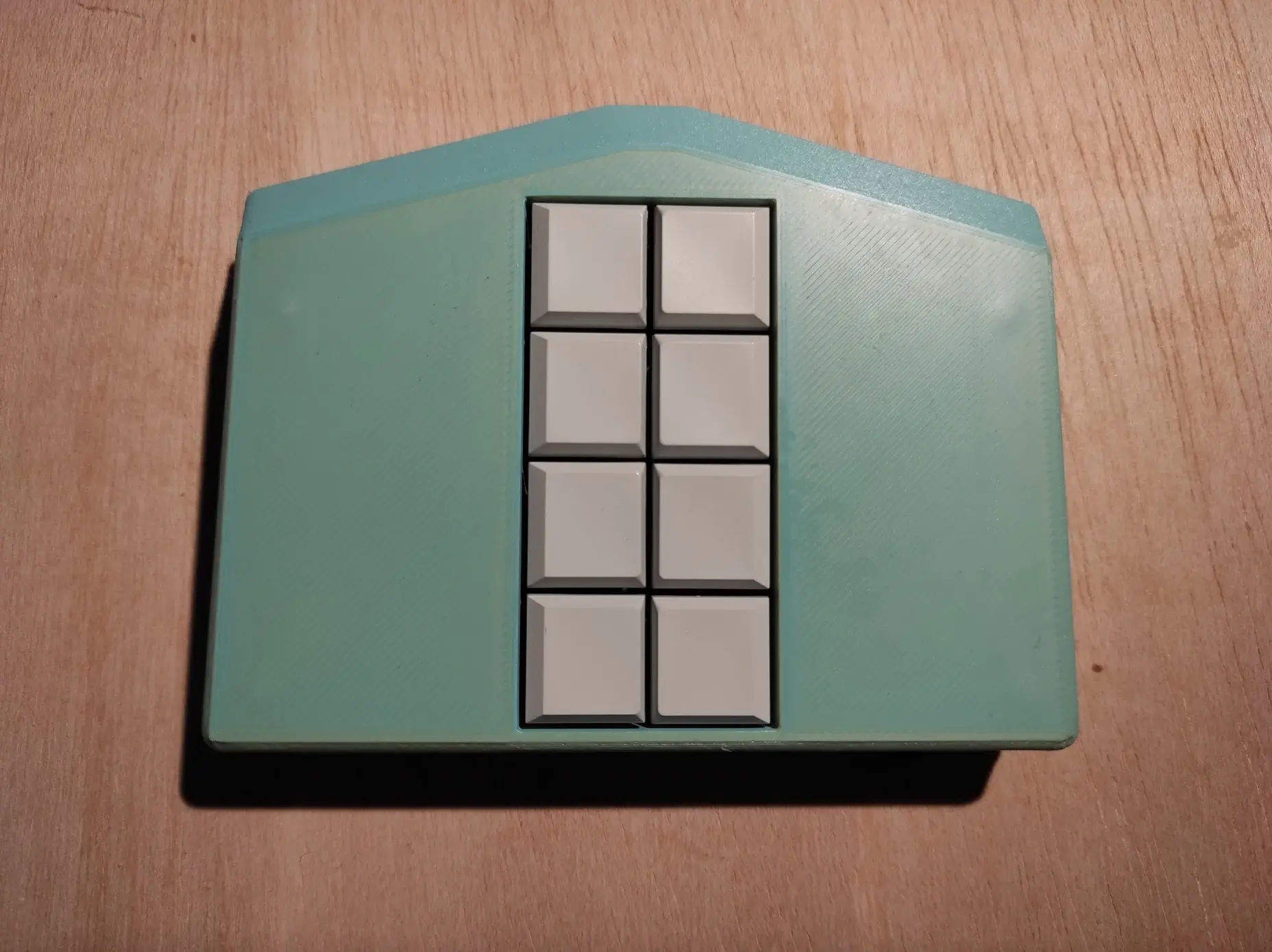

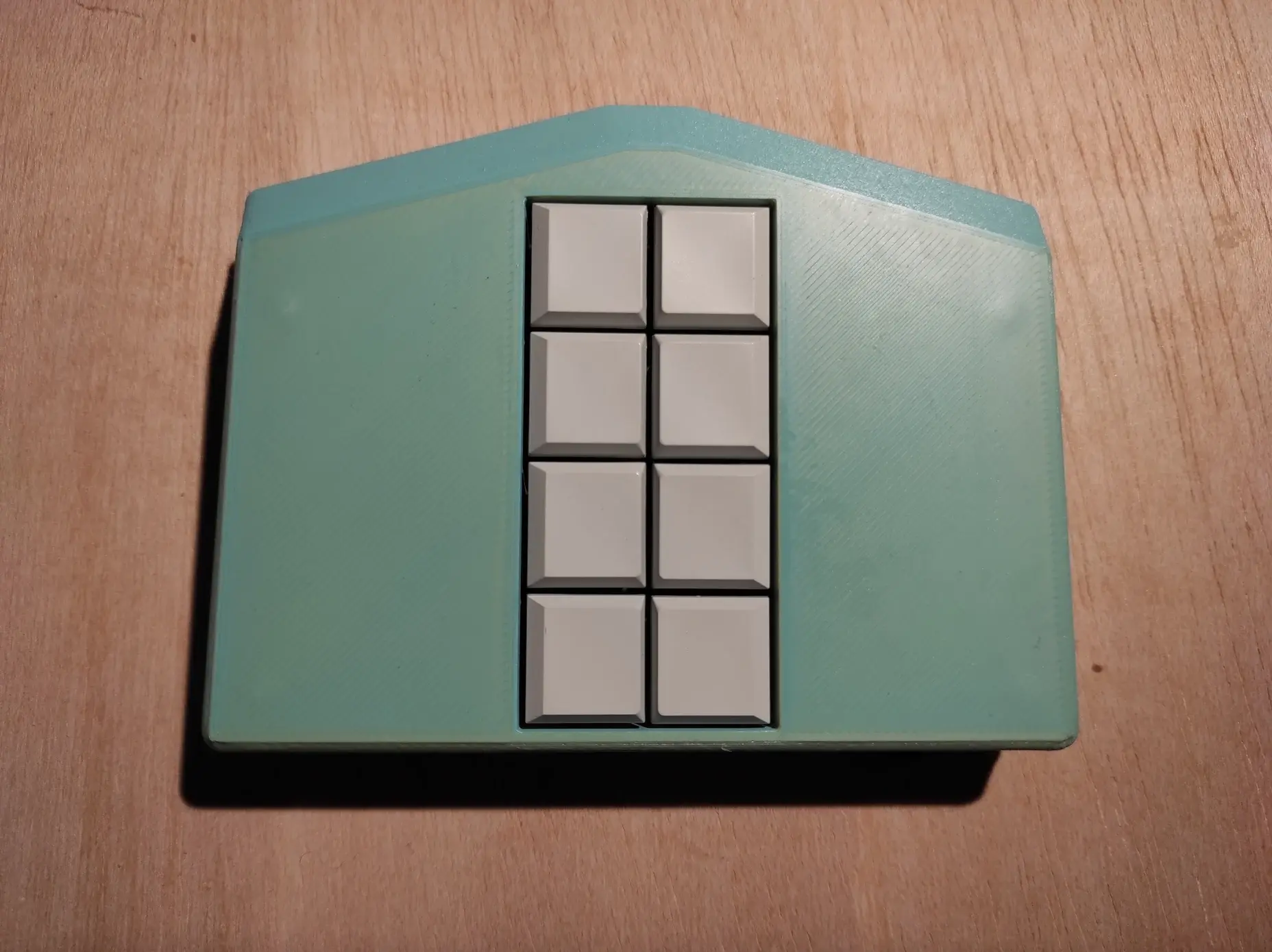

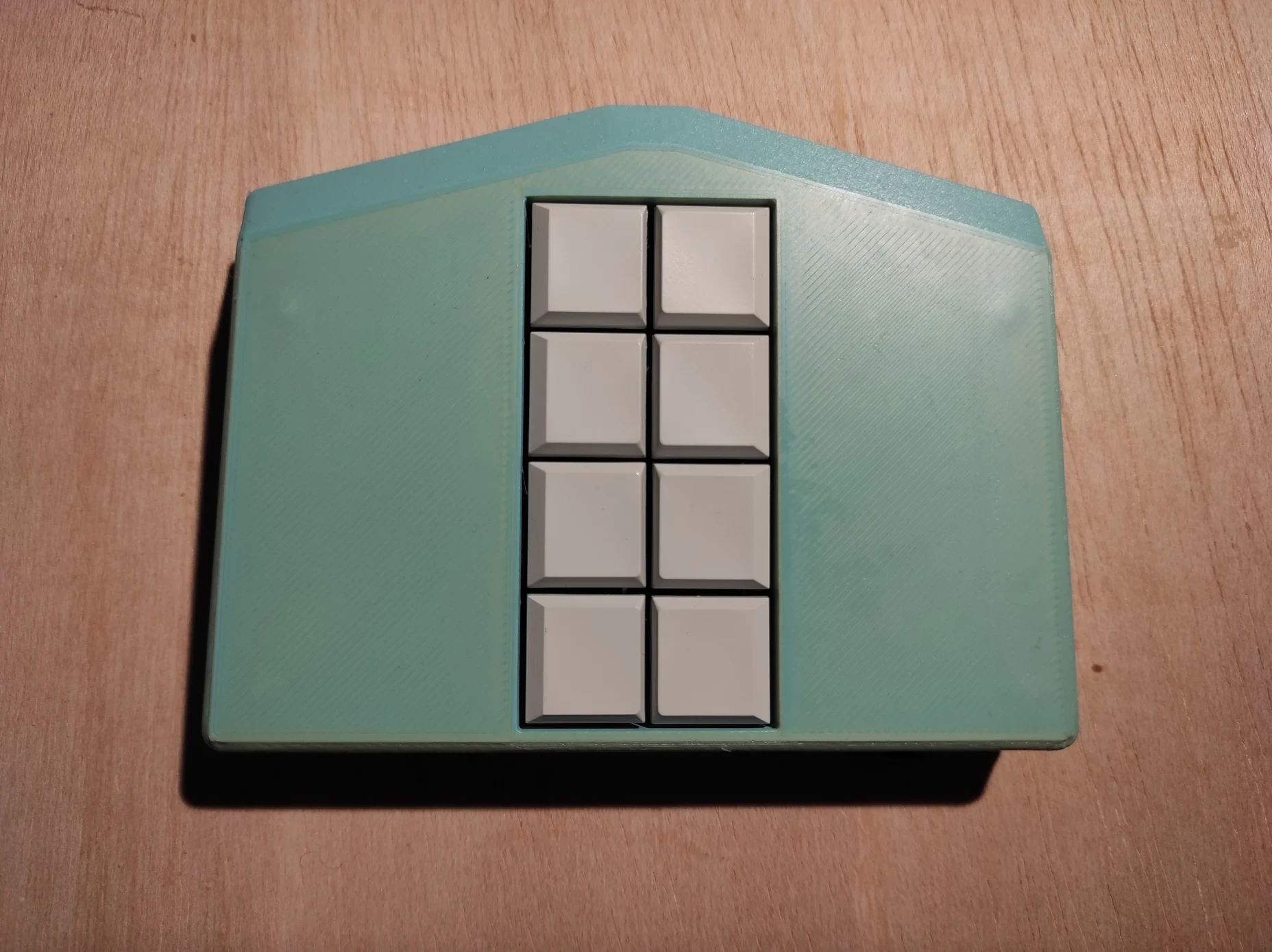

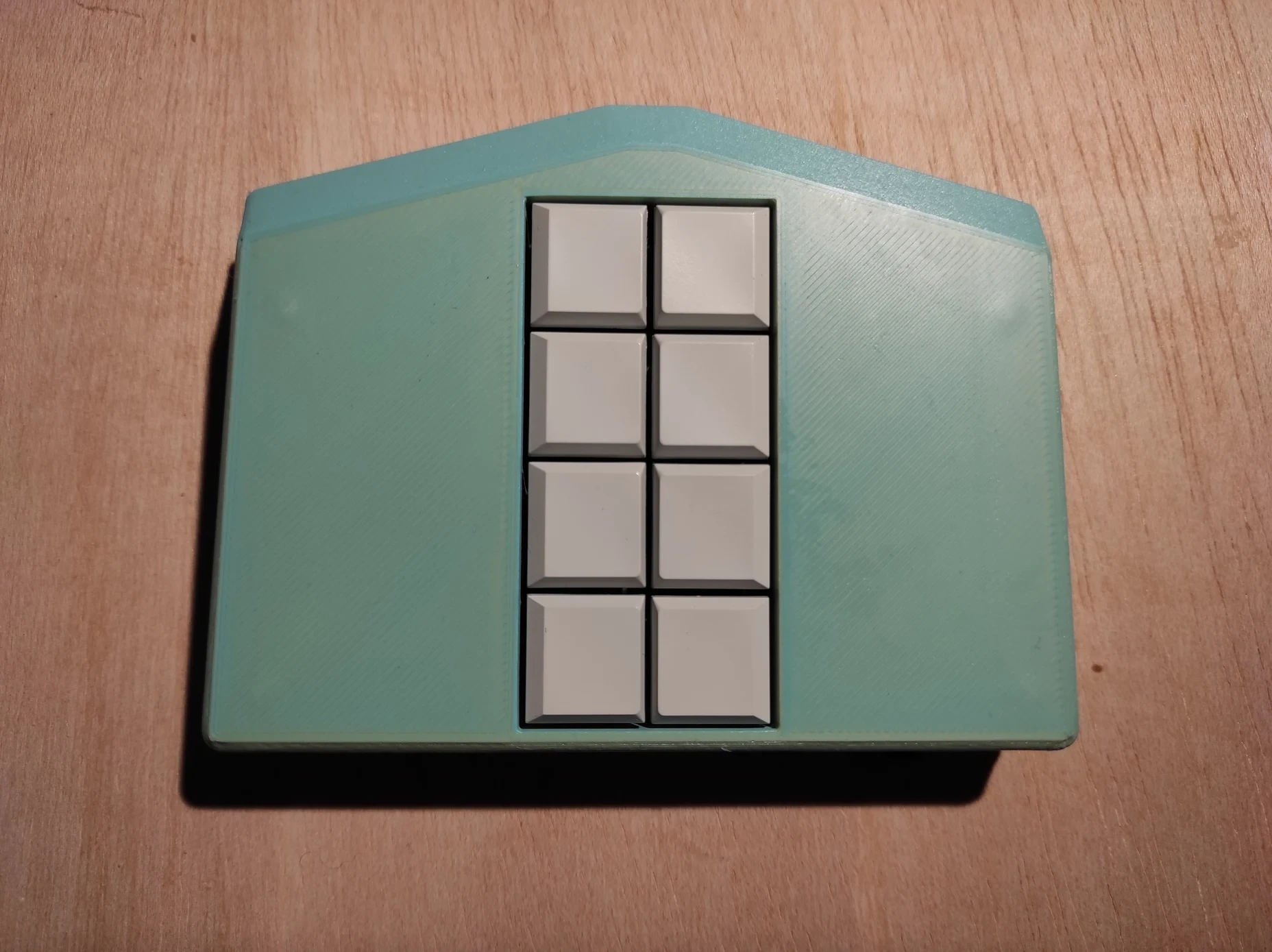

The new series presents inclusive innovations in more detail. youtu.be/GA7kCZowkks #make #blind #inclusion

Episode 1: the Metabraille keyboard, with Johannes Střelka-Petz.

EBU is delighted to launch its #a11yTechies series with an interesting chat with Johannes Střelka-Petz, from Vienna. Through close collaboration with people ...YouTube

Do-It-Blind (DIB) Besprechung

Learn using BigBlueButton, the trusted open-source web conferencing solution that enables seamless virtual collaboration and online learning experiences.bbb.metalab.at

Ok I debated making this but it cannot hurt. I hope all my fellow #Blind, #DeafBlind, and #VisuallyImpaired people, along with their family, friends, and others have had a great time at the #ACB and/or #NFB 2025 conventions. I am taking this time, and the increased activity on certain hashtags to do some shameless promotion of #OurBlind. OurBlind comprises the Discord, Lemmy, and Reddit communities operated by the staff of the r/Blind subreddit, as well as those who have joined since the creation of the Discord in 2022, and Lemmy in 2023. We have members from all over the world, and of all ages, hearing and vision levels, and are a safe space for Our LGBTQIA+ and neurodiverse friends. Our general community guidelines, and the links to reach our platforms can be found on our website.

Well y'all, I might have to go back to Windows. With Fedora 42, and Orca 48.6, I cannot use Google Docs well at all on Google Chrome, and on Firefox, the outline view which I use to navigate through many, many headings in a document I need for work, isn't usable at all. I can arrow up and down all I want, but nothing speaks.

OpenProject - Open Source Project Management Software

Open source project management software for classic, agile or hybrid project management: task management✓ Gantt charts✓ boards✓ team collaboration✓ time and cost reporting✓ FREE trial!OpenProject

Tuesday, July 1, 7:00 PM Eastern time

us02web.zoom.us/j/81033758140?…

#BTSpeak #Blind

We are pleased to be able to offer you these features, along with being able to set reminders for your appointments, in this latest free update for your BT Speak.

Read all of the details at

blazietech.com/july-2025-updat…

DG

#BTSpeak #blind

Do-It-Blind (DIB) Besprechung

Learn using BigBlueButton, the trusted open-source web conferencing solution that enables seamless virtual collaboration and online learning experiences.bbb.metalab.at

Live from HAM RADIO Friedrichshafen - 48th International Amateur Radio Exhibition - Stream 1

73 and thanks for watchingYouTube

Make this End of Financial Year special with an #EOFY donation to NV Access, or as we call it, #NEOFY!

Your gift helps keep the NVDA screen reader free for blind people around the world, helping give EVERYONE access to technology!

Donations over $2 are #tax deductible in Australia.

#Donation #Technology #Blind #GoodCause #Cause #Philanthropy

acapela-nvda.com/blog/

#NVDA #NVDASR #blind

Hi @fireborn ! Is this your blogpost?

fireborn.mataroa.blog/blog/you…

If so, I just wanted to thank you from the bottom of my heart.

I love #linux and always have. I have literally made my career with it ever since I first booted the H.J. Lu boot/root floppy set on my 486 DX2/66, but I'm partially #blind, and my vision is getting worse as I age.

And at times the amount of negativity and crap I get when I say that I generally run #WSL on Windows or a Mac? It's huge, pointless, and speaks to some ways in which parts of the Linux community are its own worst enemy.

When screen zoom broke for 2 years in #ubuntu and many of us kept signposting how important this is to us, over and over, and one of the Canonical engineers wrote in the issue saying that, due simply to the very limited number of engineering hours available, this was a low priority fix? That was a wake up call for me.

There's no malice there. It's not that anyone in the Linux community is doing an evil laugh and thrilling to the number of disabled users who can't reliably enjoy Linux on the desktop, it's about the reality that a tiny, rag tag group of engineers working for a handful of companies are doing the vast majority of the work keeping the Linux desktop world moving, and they BARELY have the bandwidth to keep development going at all much less catering to the myriad accessibility needs folks like us (Not comparing the nature of our didabilities, mind you. Everyone's different!).

But people like the guy your post responds to can make us feel not smart enough, not good enough, or not motivated enough to thrive in an environment that throws up HUGE obstacles, and that's just not right.

Pardon the length, I have Strong Feelings about this stuff as you can see, and thanks again for posting!

Do-It-Blind (DIB) Besprechung

Learn using BigBlueButton, the trusted open-source web conferencing solution that enables seamless virtual collaboration and online learning experiences.bbb.metalab.at

github.com/nvaccess/nvda/issue…

#NVDASR #blind

Add a more useful mechanism for modifying the secure mode config

The current means of editing the config for NVDA on secure screens is rather clunky and has been giving many users issues for a long time. Much discussion has been had on the matter, and I'm coming...ultrasound1372 (GitHub)

It's been quiet here for a couple of weeks because a project I was hoping to begin fell through.

stuff.interfree.ca/2025/06/22/flight-simulation.html#blind#accessibility#screenreader#accessible#flight#simulation#fsx#audiogames#games#gaming

A Walk Through Amsterdam - Marco Salsiccia

Chancey Fleet and Marco Salsiccia walking through the streets of Amsterdam as blind travelers.marconius.com

After reading this thread, I don't want a new Mac anymore. I do wish there were posts like this going over Android and Windows' accessibility frameworks, but I'll take what I can get.

applevis.com/comment/188396#co…

#apple #accessibility #VoiceOver #MacOS #mac #blind

The State of Screen readers in macOS | AppleVis

I know this subject is somewhat overused, but I have some thoughts that I can't help but want to discusse with the people in here.www.applevis.com

"Smart glasses offering a combination of sensory substitution based 'raw' vision and AI-based scene description and OCR appears to be technically and economically the most feasible and sustainable way toward meeting expectations, needs and interests of many blind people." artificialvision.com/neuralink…

And yes, I did already check this statement with a number of totally blind people, including a congenitally blind and a late-blind person.

#BCI #NeuroTech #blind #blindness

White paper: Why The vOICe will likely defeat Neuralink Blindsight

Why The vOICe vision BCI for the blind can likely defeat Neuralink Blindsight (and other brain implants for restoring vision)www.artificialvision.com

This felt too valuable not to share. Braille-labeled maps of washrooms to help people find and use facilities in the washroom. Everyone deserves to get in, do their business, wash their hands, and get out in peace and safety.

This seems valuable for all public spaces.

#a11y Experts, I've a question:

How is the the state of #hidden #content support in 2025?

That seems outdated:

stevefaulkner.github.io/HTML5a…

I wish to display a list of words only for #screenreader users.

It will be the real text content for a canvas element (wordcloud). I hope, I can it make #accessible for #blind users.

Or is there a better way to provide the word list?

Maybe @SteveFaulkner@mastodon.social have an idea?

First, the promised audio: audiopub.site/listen/7398e304-…

If you don't know what this is all about, take a read through our website to learn more: kpguild.games

If you liked what you heard, or what you read, enough to wish you could support us, you can do that through our Patreon and gain some cool benefits as you do: patreon.com/KPGuild

Enjoy listening!

#blind #audiogame

Get more from The Kuloran Players on Patreon

Designing the greatest sounding MMO RPG this side of Klagrond!The Kuloran Players (Patreon)

Do-It-Blind (DIB) Besprechung

Learn using BigBlueButton, the trusted open-source web conferencing solution that enables seamless virtual collaboration and online learning experiences.bbb.metalab.at

It has been an incredible two years since NV Access founders Mick Curran & Jamie Teh featured on Australian Story: abc.net.au/news/2023-06-05/mic…

The impact NVDA has for blind people around the world has only grown & the need is as great now as ever!

You can watch the Audio Description enabled version of Australian Story: youtu.be/3i7gkN-1sAI

Regular version: youtu.be/jwHbXh3WzSw

#NVDA #NVDAsr #ScreenReader #Blind #Accessibility #FreeSoftware #FOSS #Impact #Australia #AustralianStory

ABC News

ABC News provides the latest news and headlines in Australia and around the world.Kristine Taylor (Australian Broadcasting Corporation)

![[UK] Get paid to share your feedback on accessibility as a screen reader or magnification user - RBlind](https://fedi.ml/photo/preview/640/679778)