I bought myself a new keyboard with Christmas money, and after just a day of using it, I'm honestly kind of stunned by how much of a difference it's making.

I picked up a Keychron K10 Max from Amazon and got it yesterday, and I don't think I ever want to go back to a membrane keyboard again.

For context: before this, I was using a Logitech Ergo K860. It's a split, membrane keyboard that a lot of people like for ergonomics, and it did help in some ways — but for me, it was also limiting. My hands don't stay neatly parked in one position, and the enforced split often worked against how I naturally move. It also wasn't rechargeable, and the large built-in wrist rest (which I know some people love) mostly became a dirt-collecting obstacle that I had to work around.

Another big factor for me is that I often work from bed. That means my keyboard isn't sitting on a perfectly stable desk. It's on a tray, my lap, or bedding that shifts as I move.

The Logitech Ergo K860 is very light, which sounds nice on paper, but in practice it meant the keyboard was easy to knock around, slide out of position, or tilt unexpectedly. Combined with the split layout, that meant I was constantly re-orienting myself instead of just typing.

The Keychron, by contrast, is noticeably heavier — and that turns out to be a feature. It stays put. It doesn’t drift when my hands move. It feels planted in a way that reduces both physical effort and mental overhead. I don't have to think about where the keyboard is; I can just use it.

For a bed-based workflow, that stability matters more than I realized.

With chronic pain, hand fatigue, and accessibility needs, keyboards are not a neutral tool. They shape how long I can work, how accurately I can type, and how much energy I spend compensating instead of thinking.

This new keyboard feels solid, responsive, and predictable in a way I didn't realize I was missing. The keys register cleanly without requiring force, and the feedback is clear without being harsh. I'm not fighting the keyboard anymore. It's just doing what I ask.

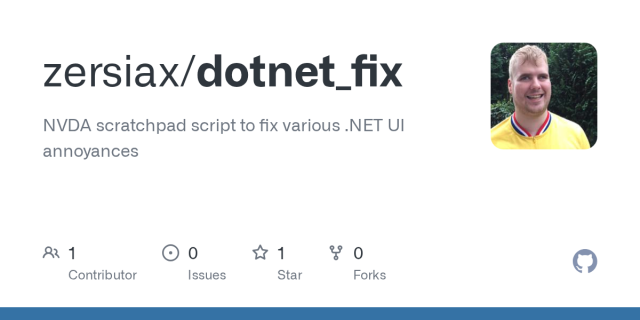

What surprised me even more is how much better the software side feels from an accessibility perspective. Keychron's Launcher and its use of QMK are far more usable for me than Logitech Options Plus ever was. Being able to work with something that’s web-based, text-oriented, and closer to open standards makes a huge difference as a screen reader user. I can reason about what the keyboard is doing instead of wrestling with a visually dense, mouse-centric interface.

That matters a lot. When your primary interface to the computer is the keyboard, both the hardware and the configuration tools need to cooperate with you.

I know mechanical keyboards aren't new, but this is my first one, and I finally understand why people say they'll never go back. For me, this isn't about aesthetics or trends. It's about having a tool that respects my body and my access needs and lets me focus on the work itself.

I'm really grateful I was able to get this, and I'm genuinely excited to keep dialing it in. Sometimes the right piece of hardware, paired with software that doesn’t fight you, doesn’t just improve comfort. It quietly expands what feels possible.

#

Accessibility #

DisabledTech #

AssistiveTechnology#

ScreenReader #

NVDA#

MechanicalKeyboards #

Keychron@accessibility @disability @spoonies @mastoblind